CodeBot Setup

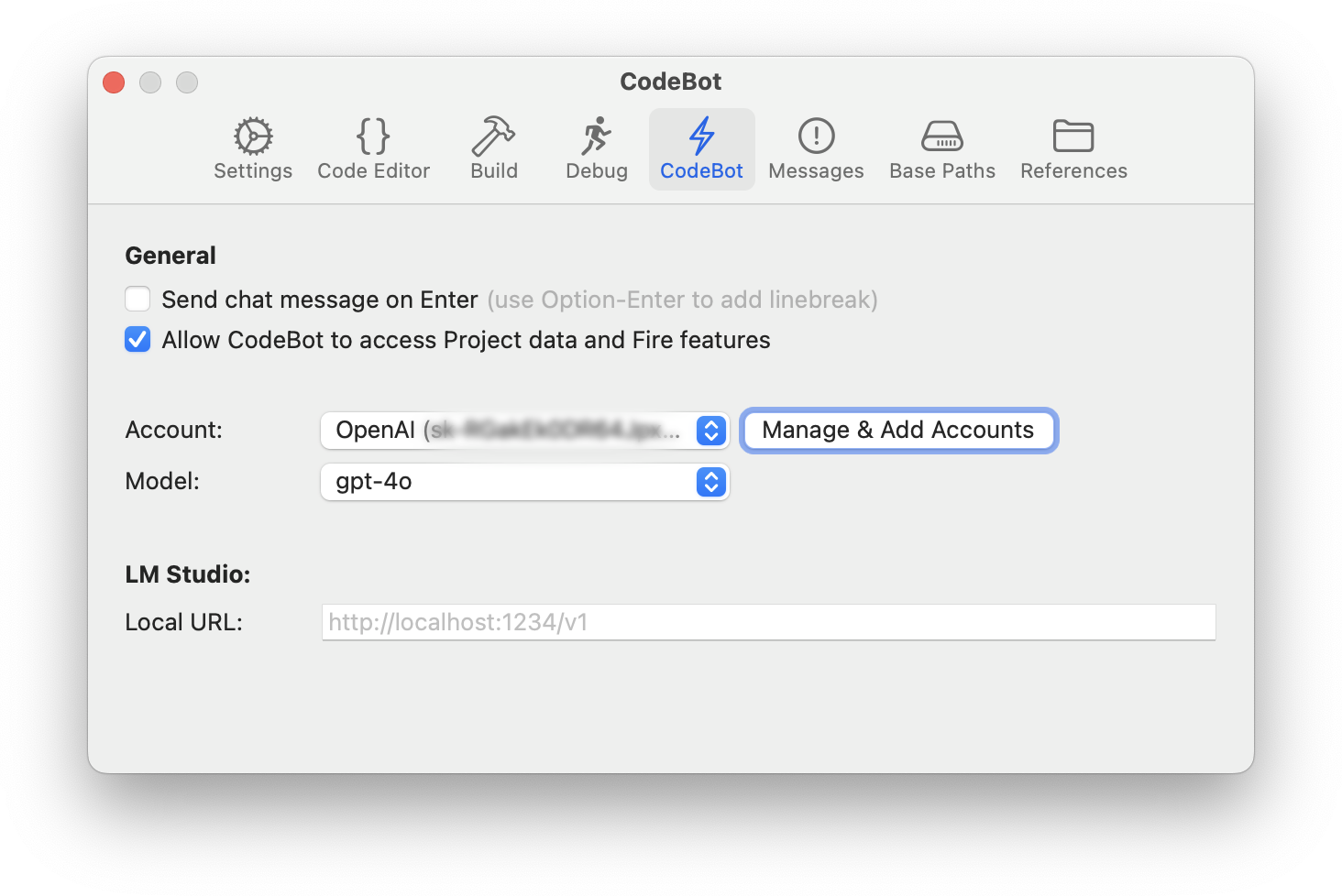

Before using CodeBot, the smart coding assistant in Fire and Water, it needs to be appropriately configured in a few easy steps. This can be done by opening the Settings/Options window, available via ⌘, or "Fire|Settings" (in Fire) and Ctrl_, or "Tools|Options" (in Water) and going to the "CodeBot" tab.

CodeBot needs one (or more) AI models to work with. You have two options here, you can either connect it to an AI provider of your choice from the list below (the recommended option), or — if you're experienced with AI and/or are running on a very powerful machine, you can run the open source LM Studio locally, and have CodeBot use one of the models it supports.

Unless you really know what you are doing, we recommend configuring a third AI provider.

Adding AI Accounts

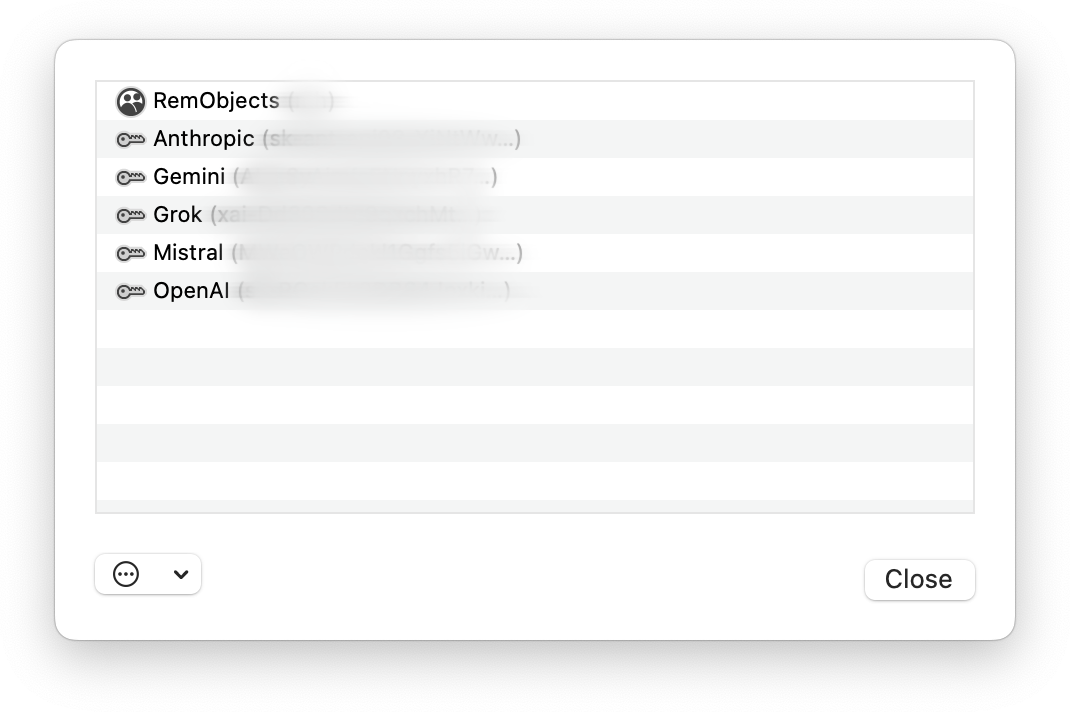

To do this, click on the "Manage Accounts" button, to bring up the new Account Manager (which is also always available directly from the "Tools" menu):

The Account Manager may already show your RemObjects account that Fire/Water uses to keep your licenses up to date. You can add more accounts by clicking the "..." button at the bottom left, includin accounts for the following AI providers:

- Anthropic (Claude)

- Google (Gemini)

- xAI (Grok)

- Mistral

- OpenAI (ChatGPT)

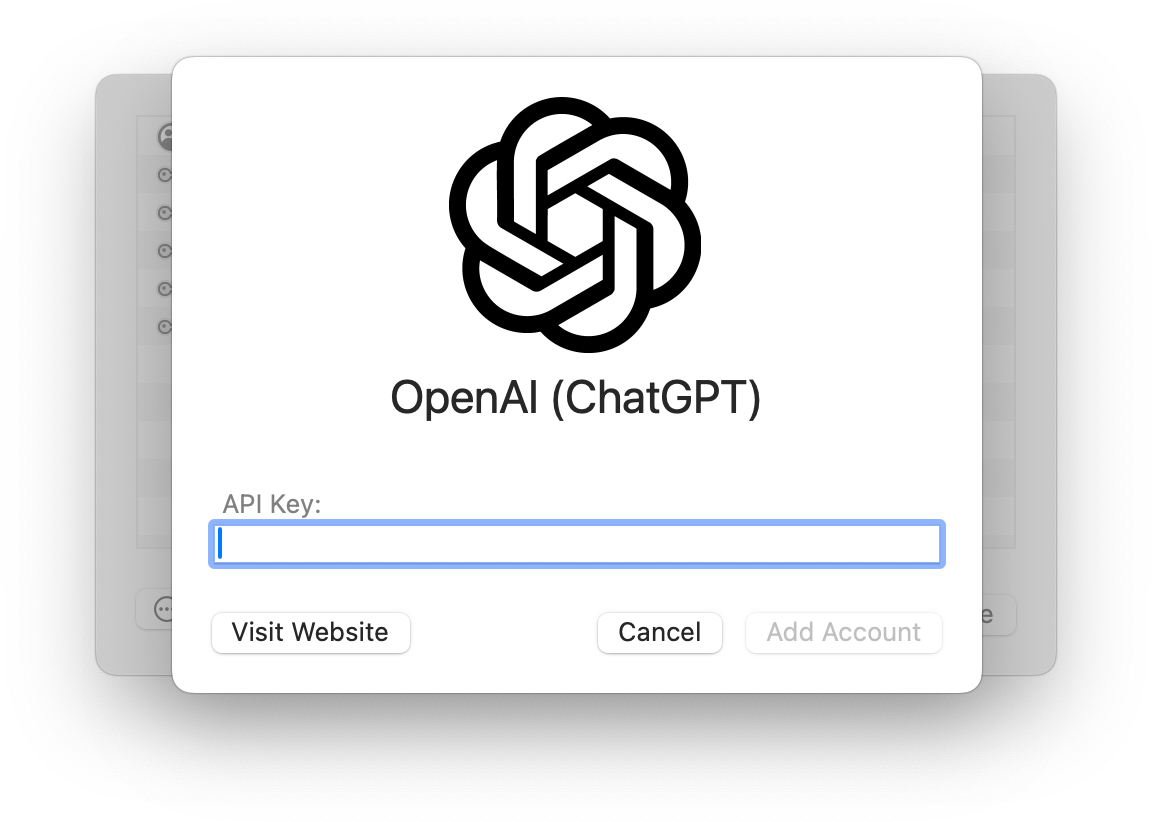

Simply pick the account type you would like to add, and in the subsequent window, enter your API key. If you are unsure where to find that key (or still need to sign up for an account with the provider in question), you can click the "Visit Website" button to open their site and follow the instructions there.

Once the key is entered, click "Add Account". Account manager will verify that the APi key works, and then add the new account.

If this is the first and only AI account you are adding, it will automatically be set to be your active account.

Selecting AI Accounts and Models

Back in the Preferences window, you can now select between all the AI accounts you have connected (if you connected more than one) by choosing from the "Account:" popup. Your first one should already be selected.

As you pick an account, the "Model:" popup will populate with the lst of different LLM models available (and suitable for CodeBot) from the provider. You can check with the AI provider to see what model they suggest. Note that the capabilities and usage costs can vary drastically between models

Our current recommendations (as of June 2025) are as follows.

-

Anthropic (Claude)

claude-sonnet-4(general coding, faster & cheaper)claude-opus-4(more advanced & capable of deeper reasoning, more expensive)

-

Google (Gemini)

gemini-2.5-flash(fast, large context window)gemini-2.5-pro(advanced reasoning and "deep thinking", more expensive)

-

xAI (Grok)

grok-3-minigrok-3-fast(fast, cheaper)grok-3

-

Mistral

mistral-large-latest

-

OpenAI

gpt-4o(great for general use, with reasoning, fast and cheap)gpt-4.1-mini(cheaper and has a much larger context window, but is less capable)

Note: This may change more quickly than we have time to update this page. AI model offerings and names are constantly changing. If you’re seeing different models than listed here, please check the provider’s site or API dashboard for up-to-date information.

Working with Local Models

If you want to let CodeBot use local models running on your system (or local network), you can use its support for LM Studio. LM Studio is an open source application that makes it (fairly) easy to download and run a large variety of LLMs locally on your one development machine or server, including models such as Deepseek, Llama, and more.

Running a local model is a good option if you are concerned about code privacy and your questions and code are shared with a third-party AI provider.

That said, while LM Studio does take away some complexity, this option is still only recommended if you are familiar with the tool and experienced with the system. Please refer to the documentation provided by the creators of LM Studio for how to get it set up.

Once up and running, LM Studio offers the option to run a local server, by default available on http://localhost:1234/v1.

You can configure CodeBut to use LM Studio by selecting the "LM Studio" option from the "Account:" pop-up. This option will always be available, whether you have accounts configured or not. Once selected, you can choose one of the models you have installed, to be used for CodeBot, from the "Model:" popup.

If you are running LM Studio on a different port than the default on a different server on your local network, you can manually provide the URL to it in the LM Studio URL field.

Note: LM Studio allows you to install various models, not all of which are compatible with CodeBot and/or suitable for the tasks CodeBot requires. If you select a model not compatible with CodeBot, your message requests will most likely be met with error messages.

Also note that if you choose a deep reasoning model such as Deepseek, those models are very verbose and not only think deeply (or pretend to, as may be) before answering, but often also share their entire thought process as before the real answer...