Elements Docs

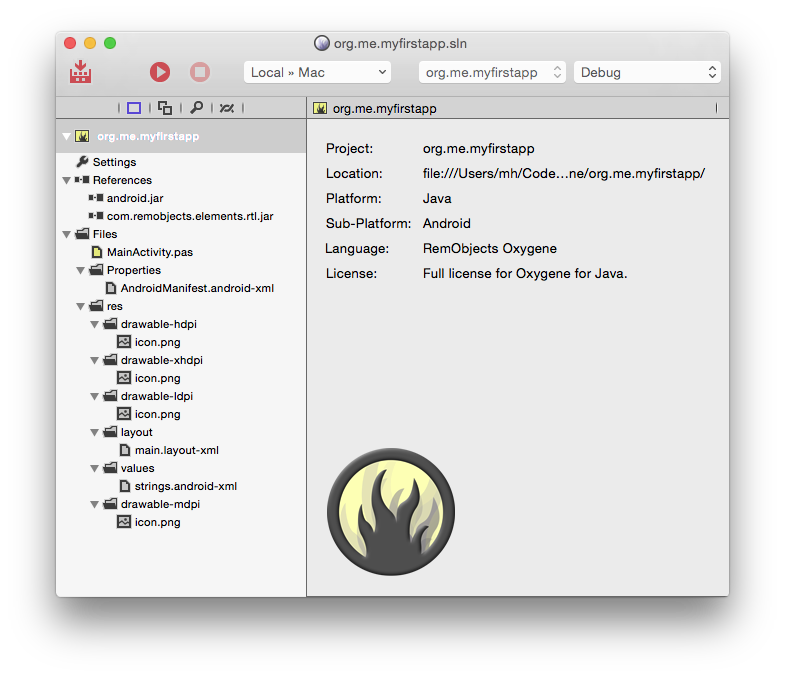

One toolchain, six languages, all major platforms: Elements is a modern development tool stack for creating applications for all of today's platforms, using either our very own Oxygene Language or the C#, Java, Mercury, Go or Swift languages.

All the languages let you leverage the same language skill set, no matter what platform(s) you are developing for, without losing the benefit of working natively and directly with the underlying platforms — be it the .NET Framework, the Java and Android Runtime Libraries, the Cocoa and Cocoa Touch Frameworks for the Apple platforms, or our new Island platform for CPU-native Windows, Linux and Android NDK projects.

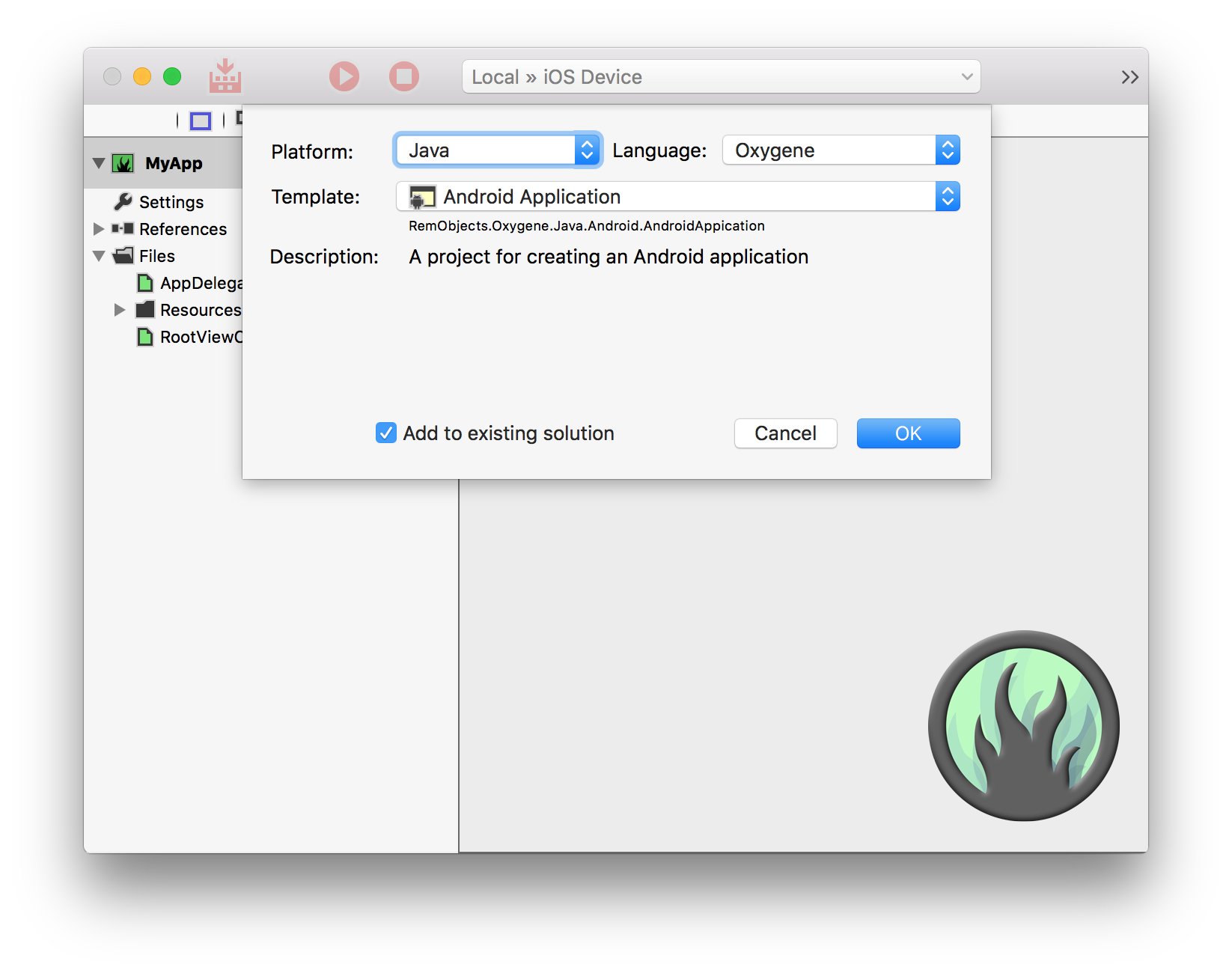

RemObjects Oxygene is our own state-of-the art programming language for the 21st century. Combining ideas from many origins along with original concepts unique to Oxygene, the language provides an unprecedented level of productivity.

Oxygene is the most advanced and most versatile general purpose programming language available today.

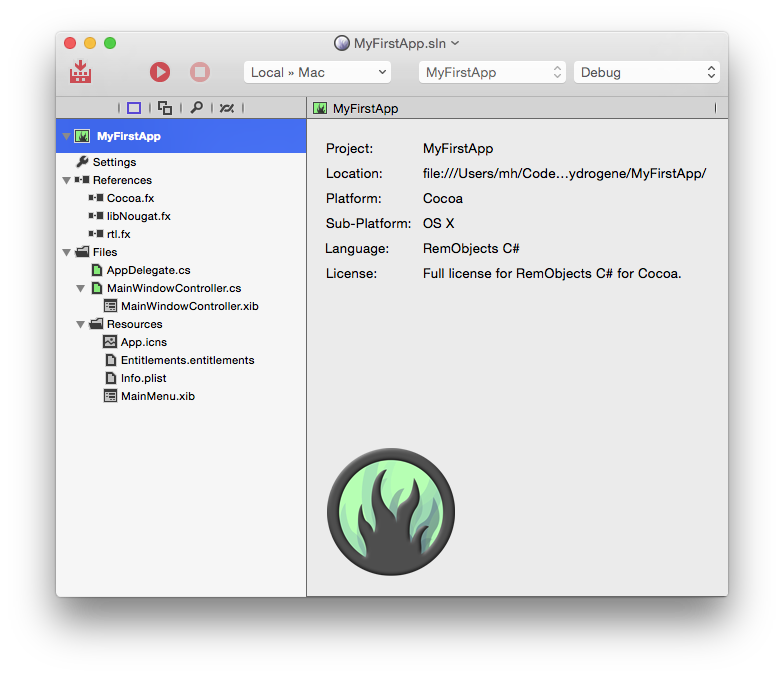

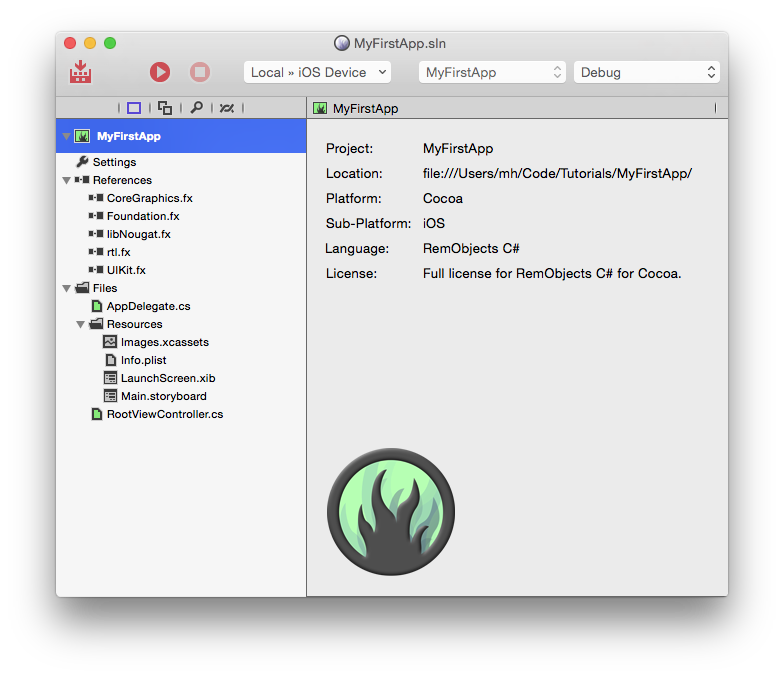

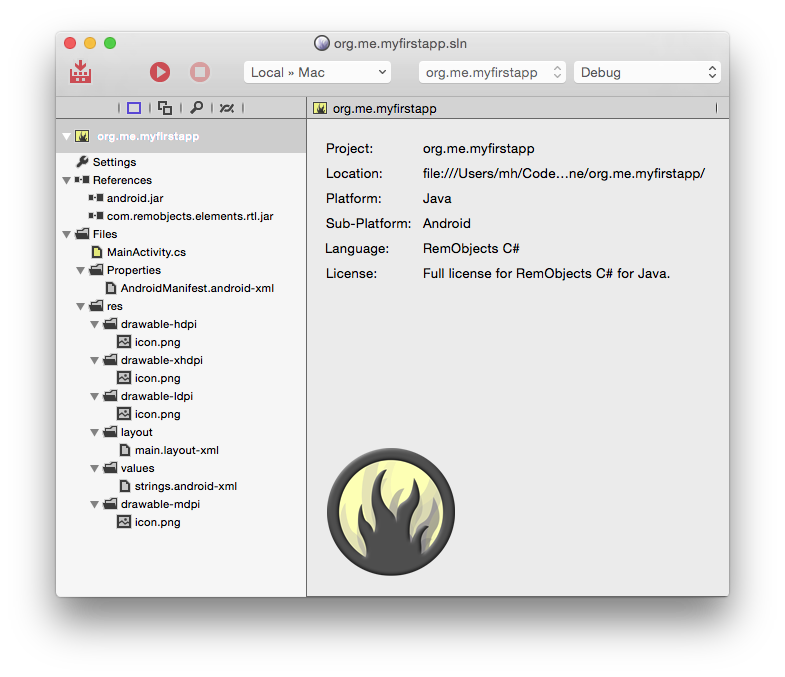

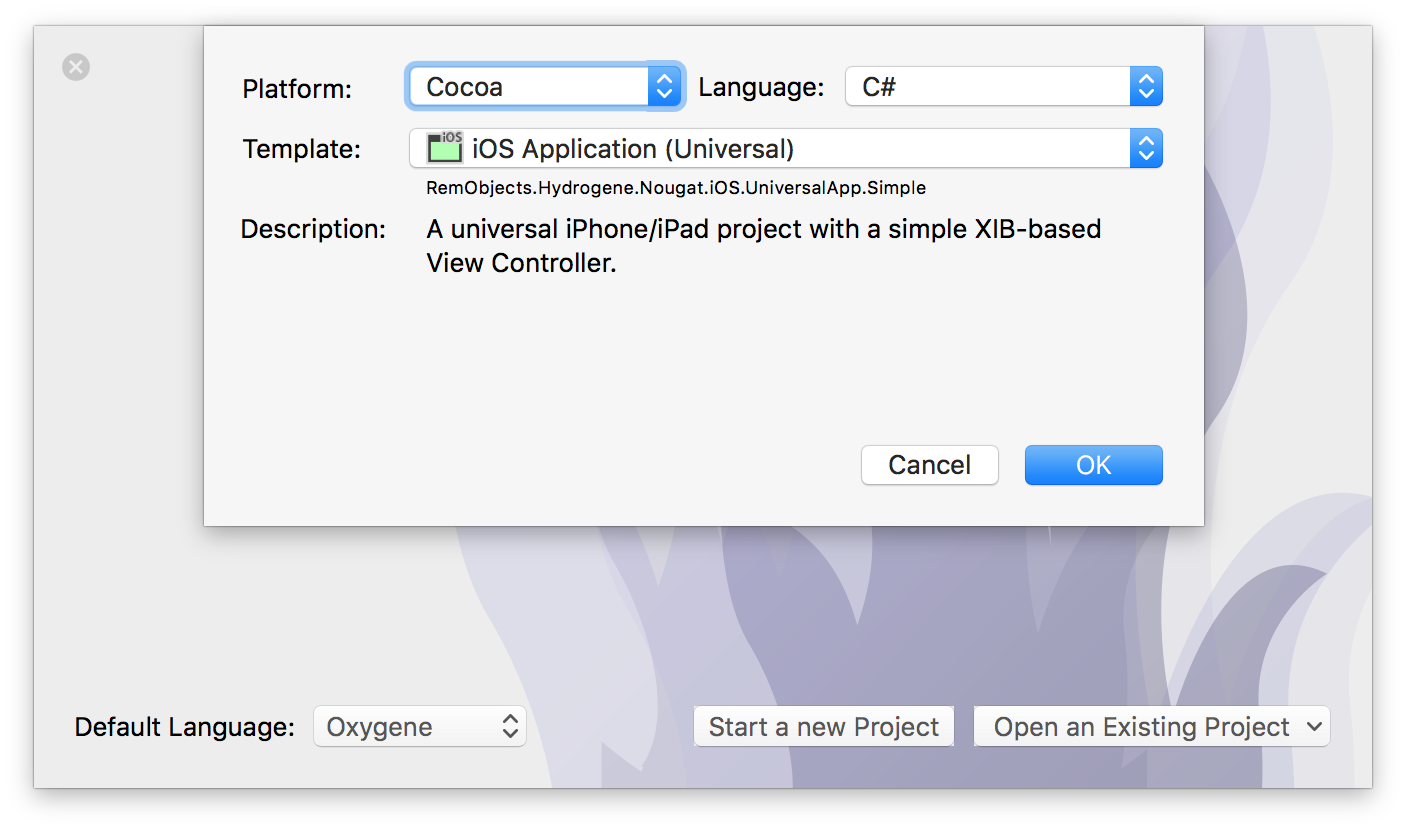

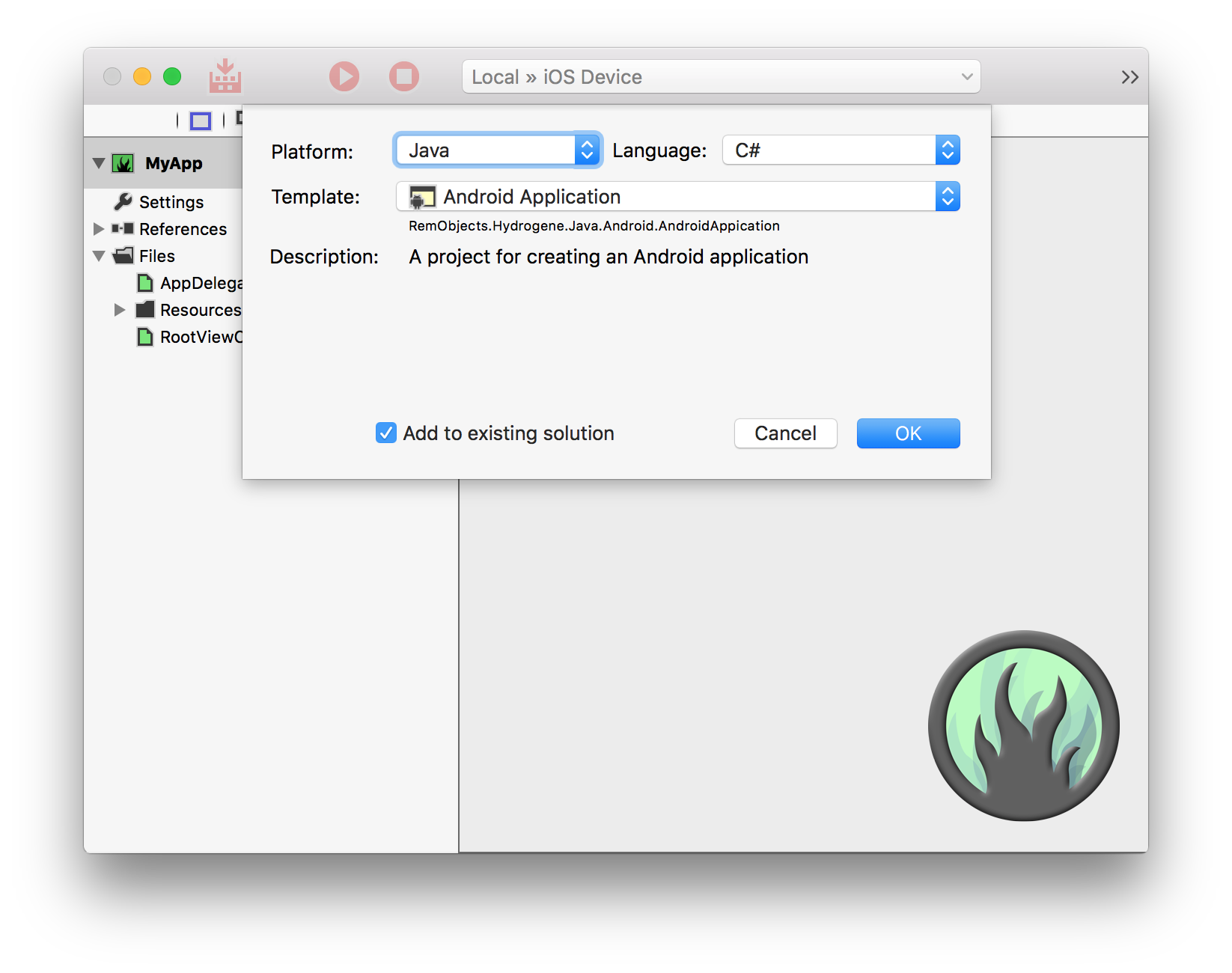

RemObjects C# is 100% C# — now available natively on the Java/Android and Cocoa platforms as well. Primarily designed for developers already familiar with C# on .NET, it allows you to expand your existing skills to iOS, Android and Mac development in a snap.

RemObjects Iodine is our take on the Java programming language — again brought over to now be usable on all platfroms, including .NET, Cocoa and Island.

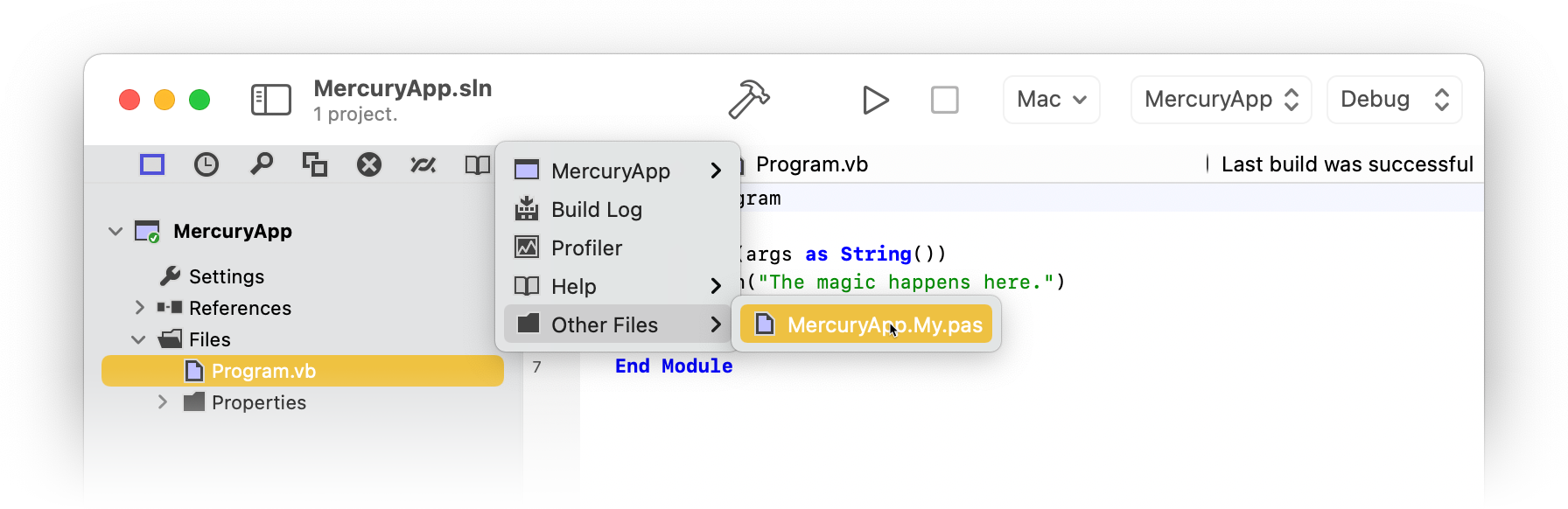

RemObjects Mercury is an implementation of the BASIC programming language that is fully code-compatible with Microsoft Visual Basic.NET™, but takes it to the next level, and to all elements platforms.

RemObjects Go adds support for the Go language (and access to the vast Go Base Library for all languages).

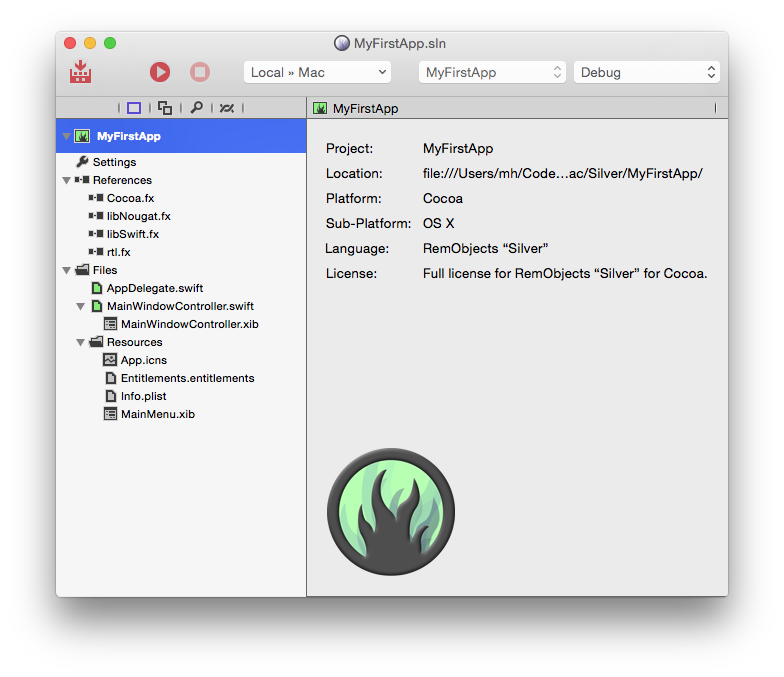

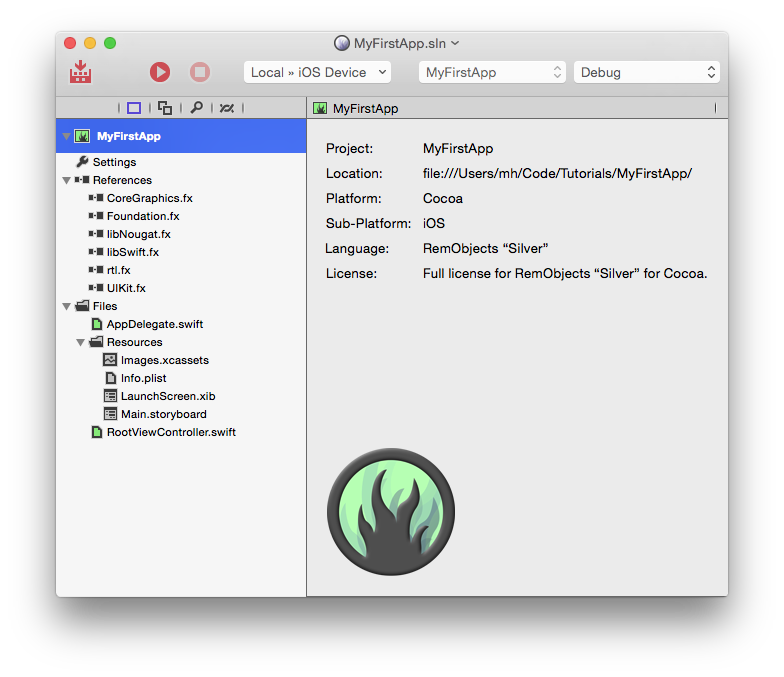

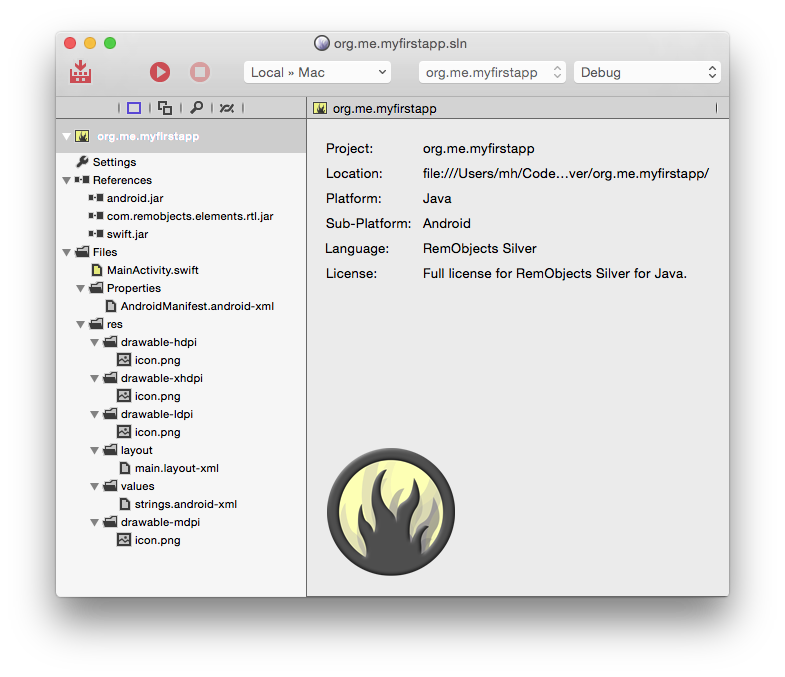

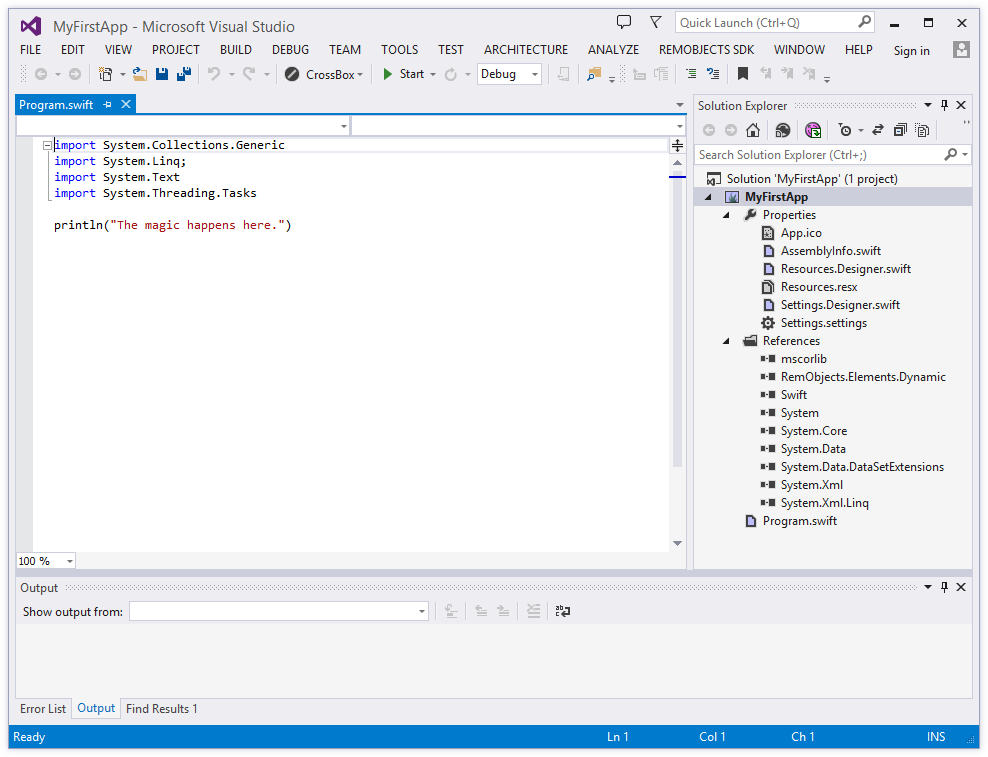

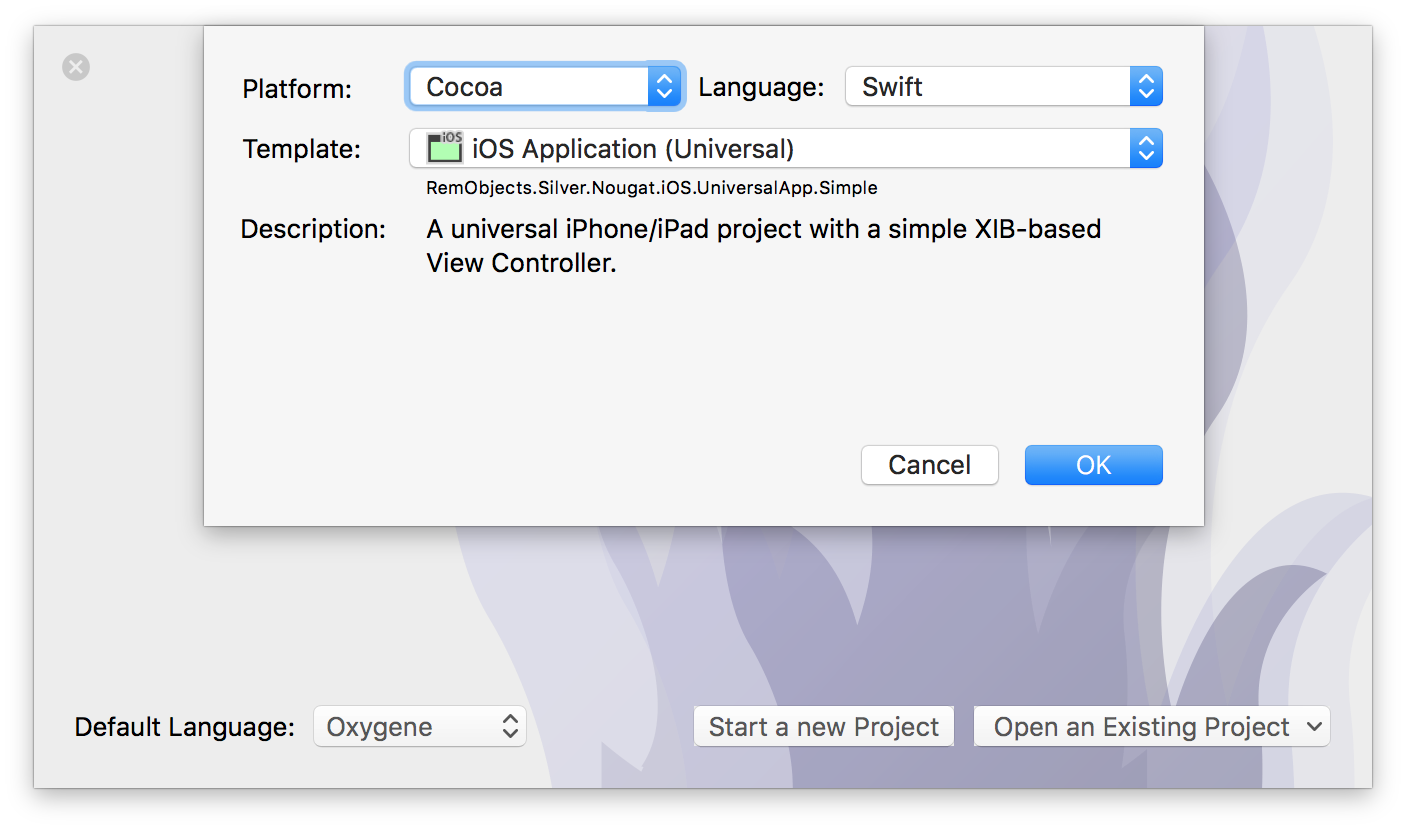

RemObjects Swift is our implementation of Apple's new Swift programming language — brought over to Java/Android and .NET/Mono development (as well as of course Cocoa).

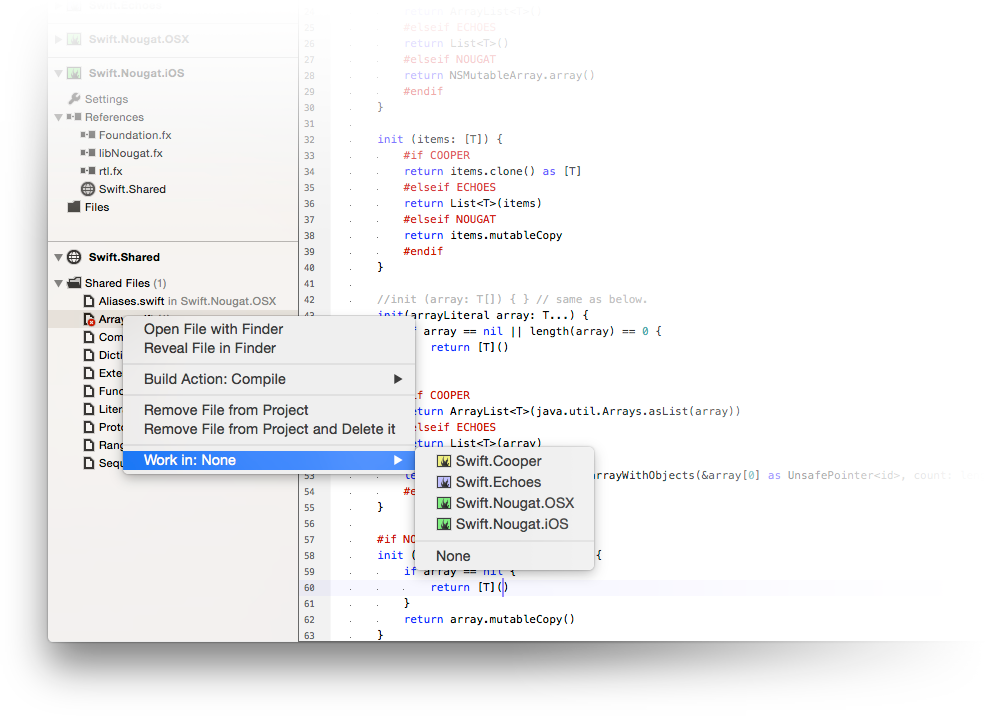

Depending on how you roll, you can pick your language of choice, or you can mix any of the six languages in the same project (on a file-by-file basis) to use each language for its strengths, where applicable.

Documentation Overview

-

The first six sections, Oxygene, C#, Iodine, Mercury, Go & Swift and explore each of the languages individually. Go here to get a first introduction to your language and to learn about specific language features, syntaxes and capabilities.

-

Concepts explores specific ideas and technologies available in the languages in more depth. Many of these apply to all languages, while some are specific to more advanced features only available in our own Oxygene language.

-

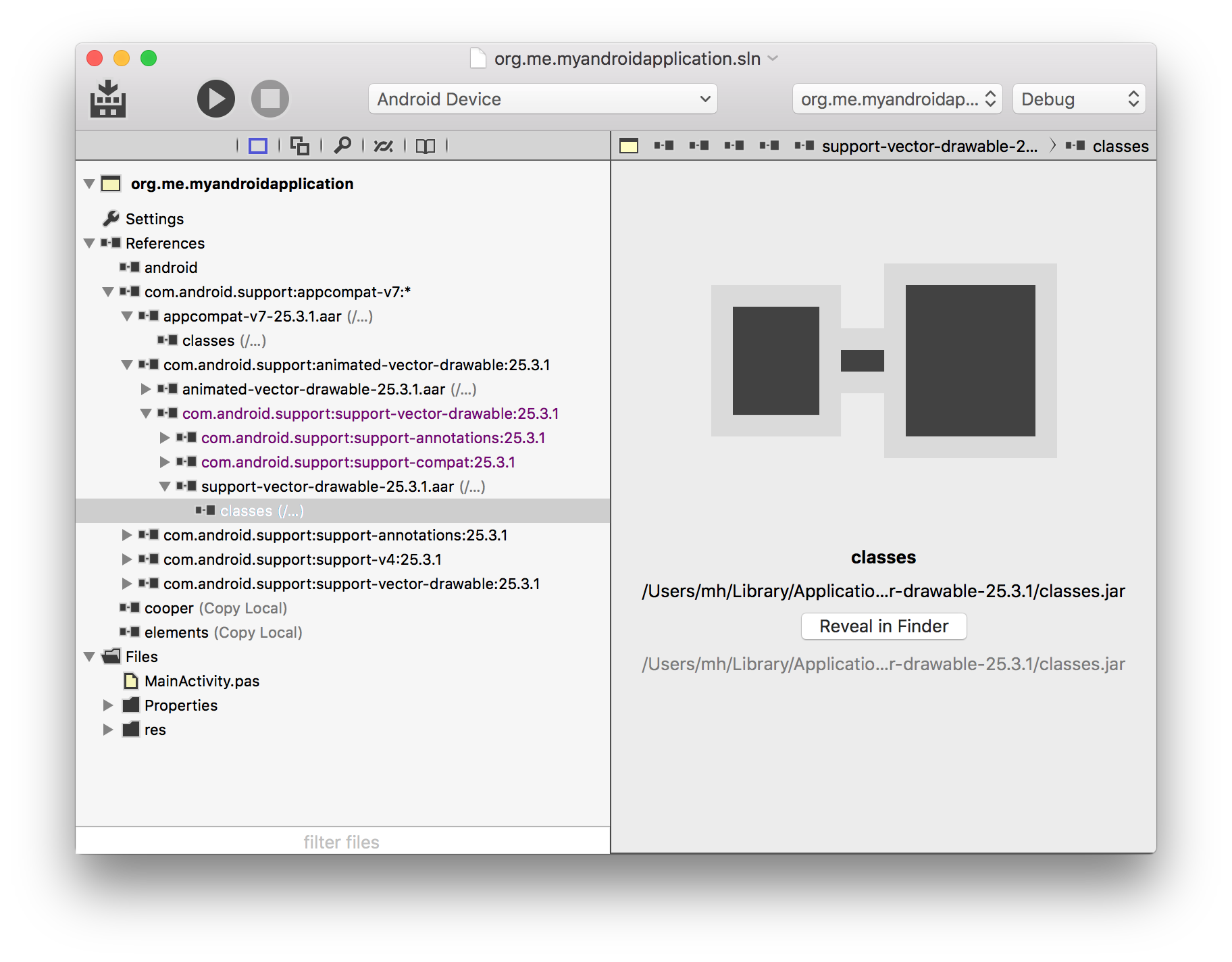

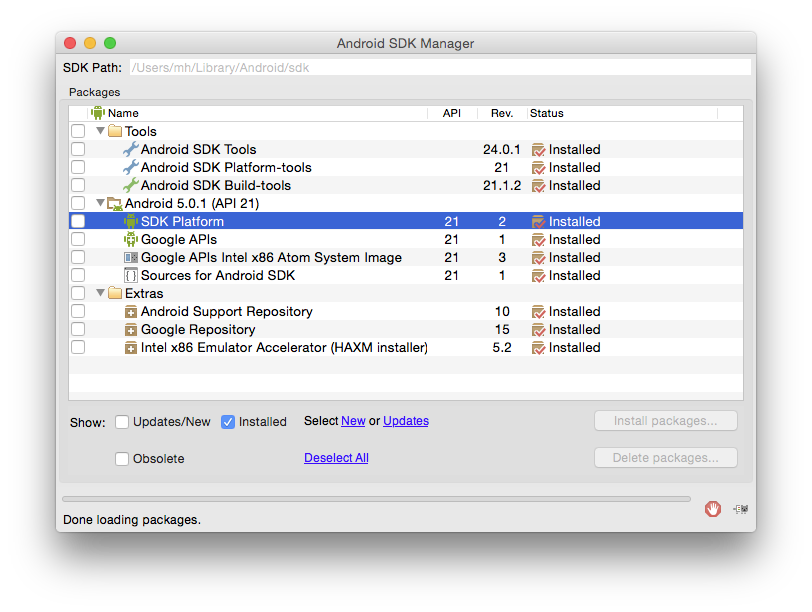

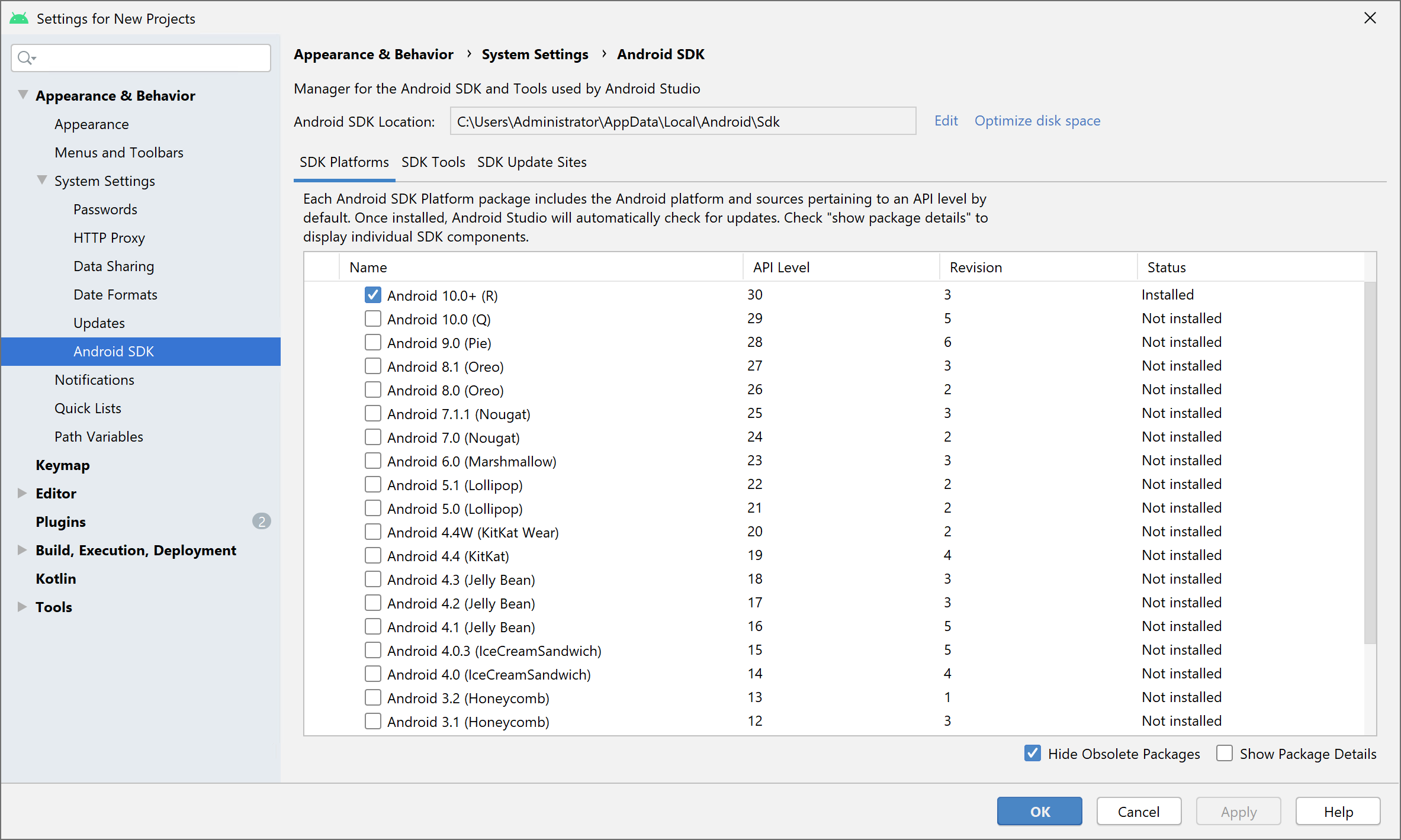

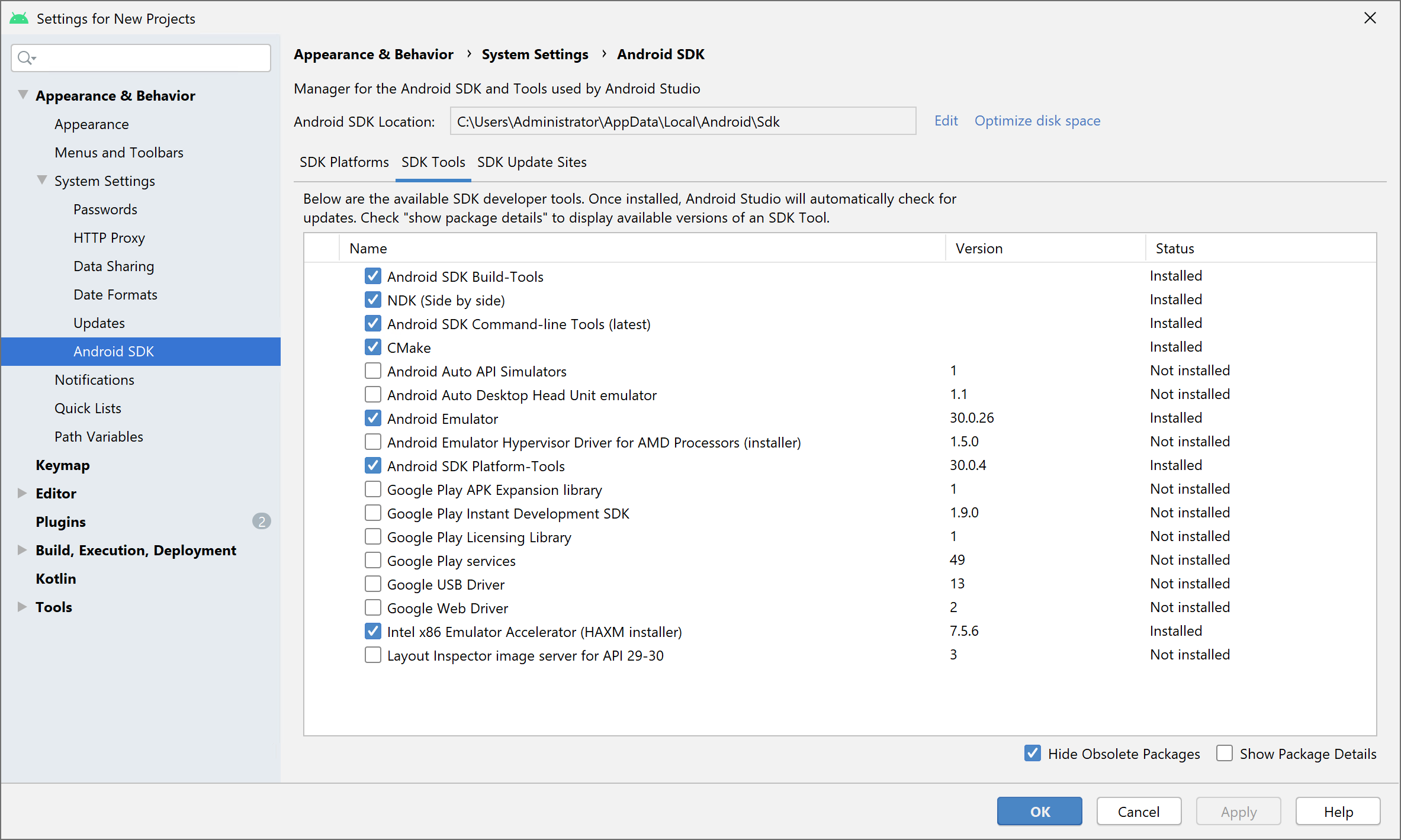

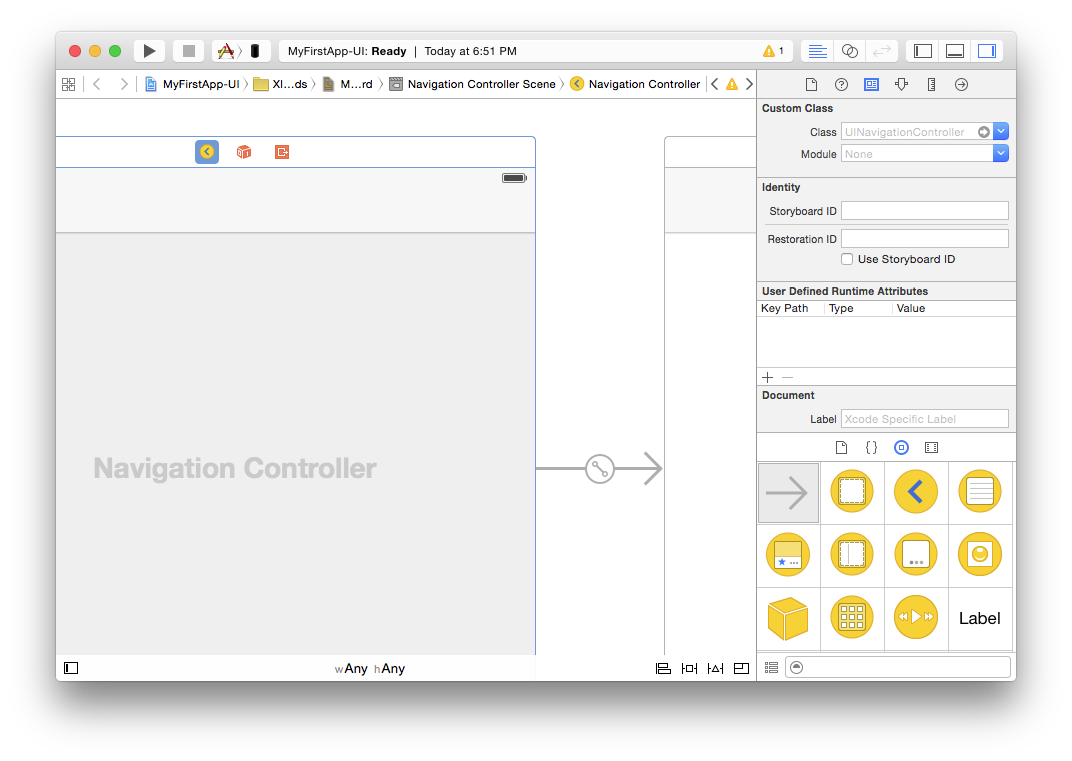

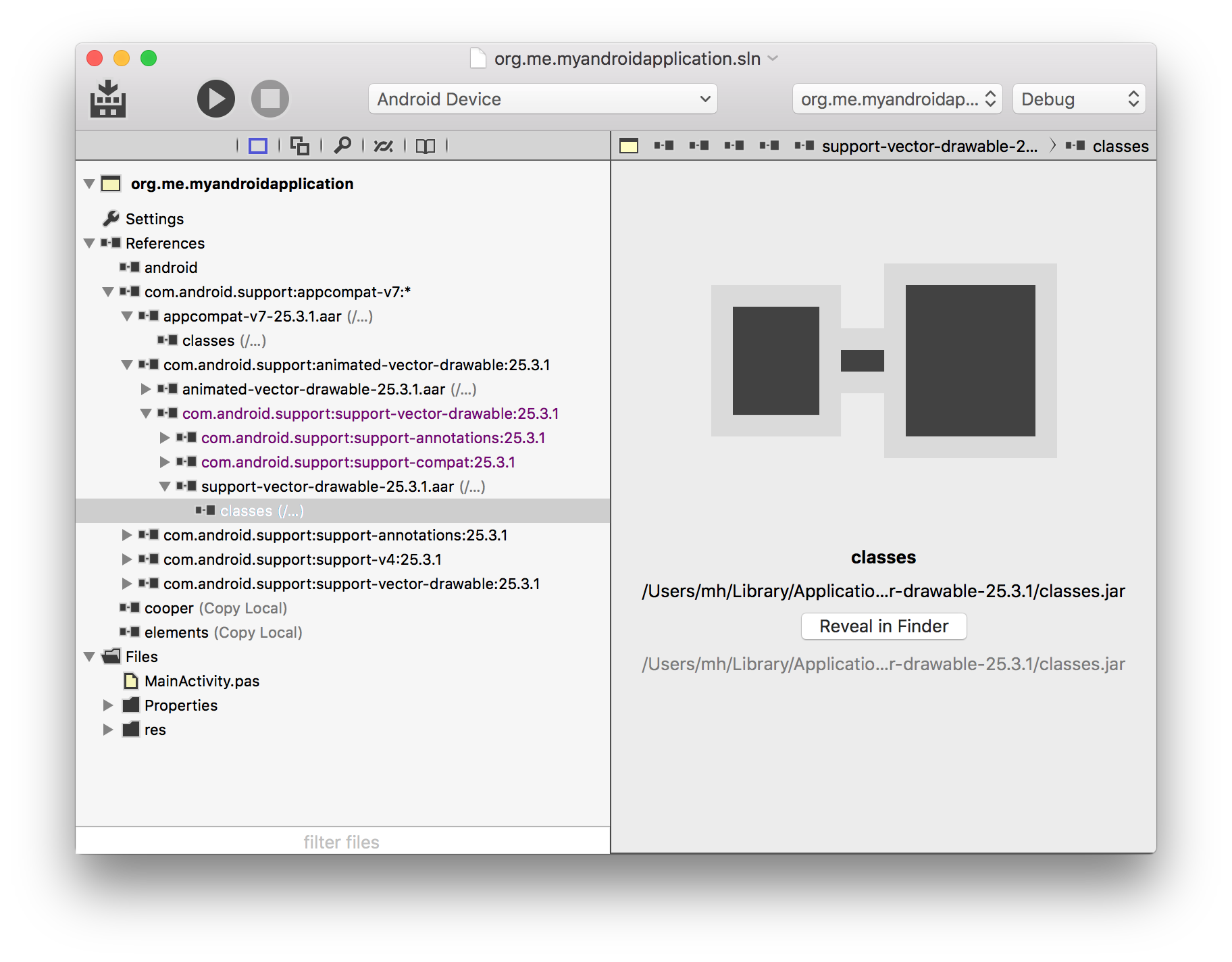

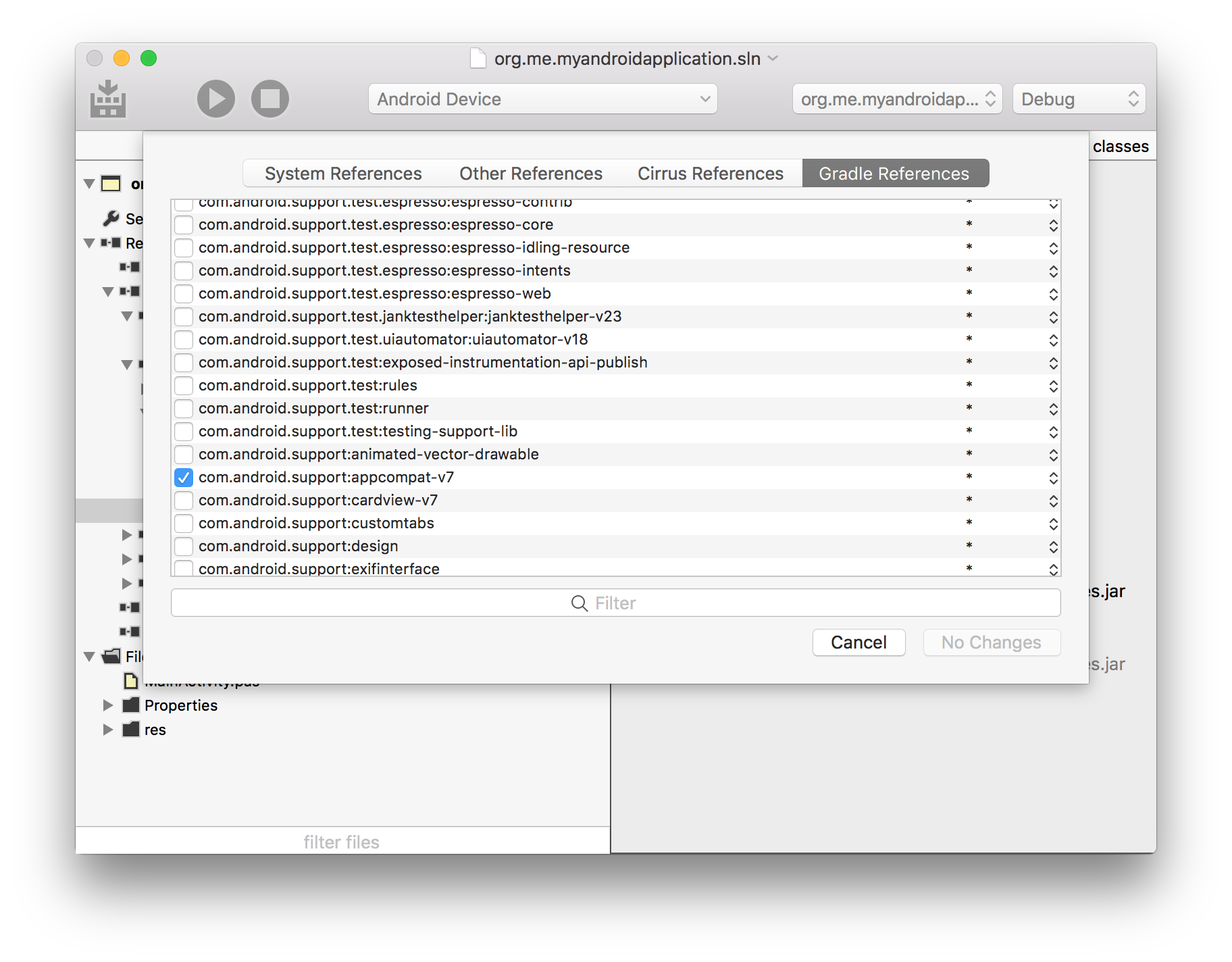

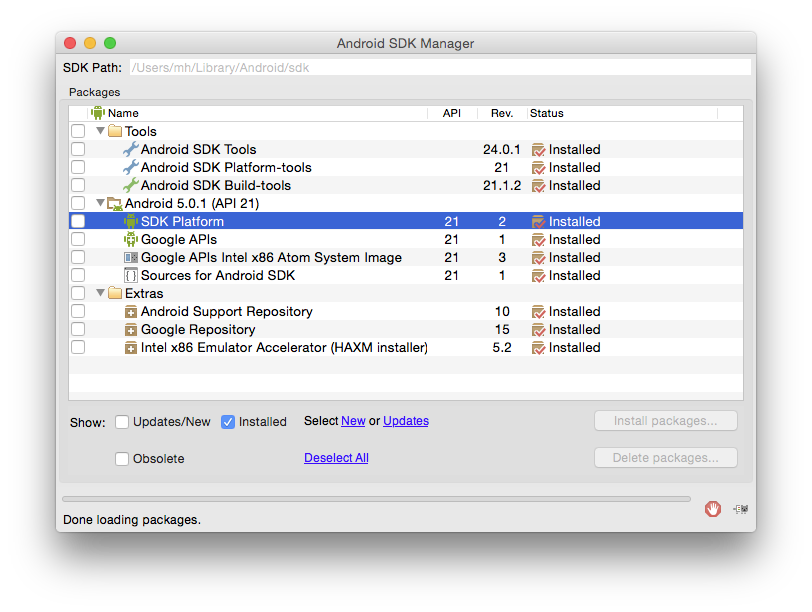

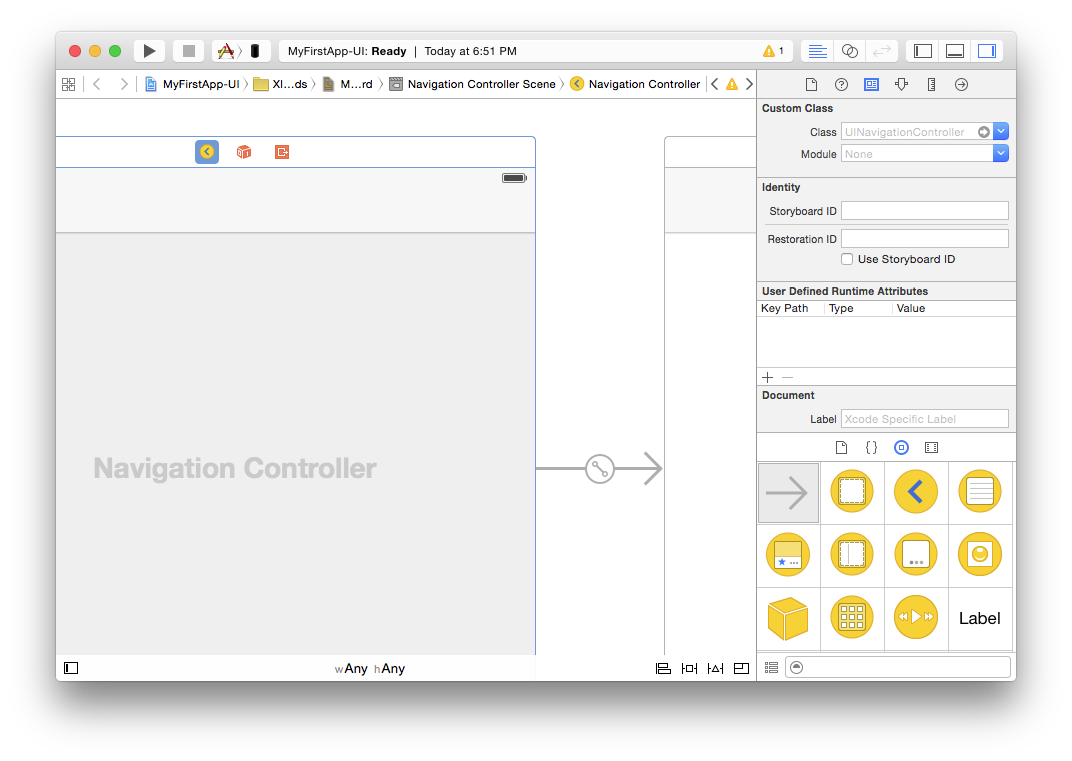

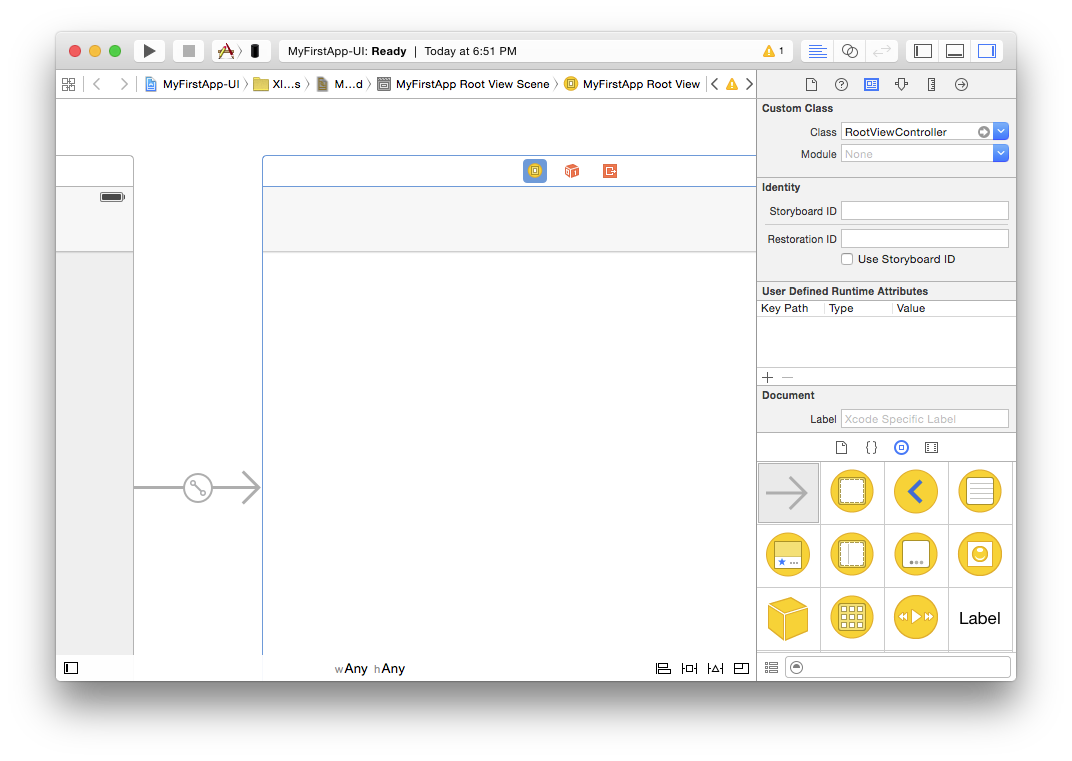

Platforms dives into topics specific to the individual platforms – .NET, Cocoa, Android, Java, WebAssembly and native Windows and Linux.

-

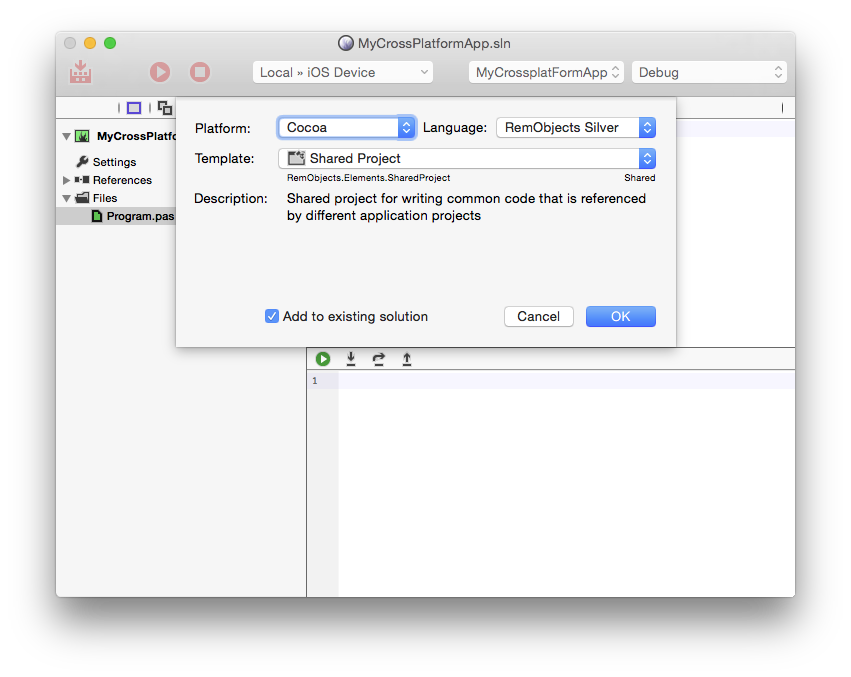

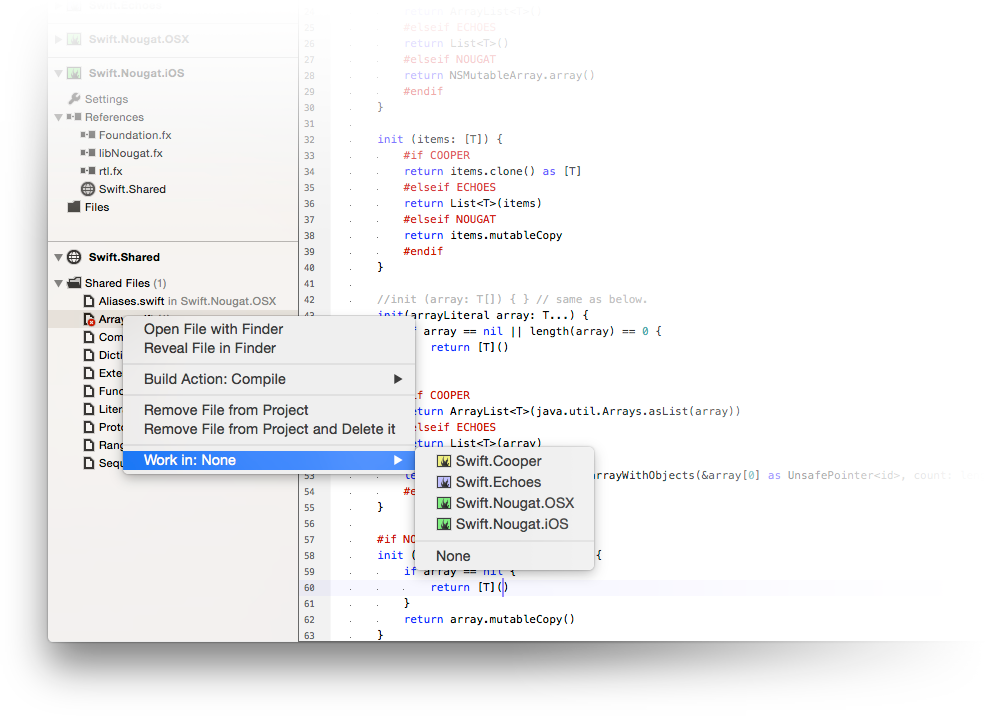

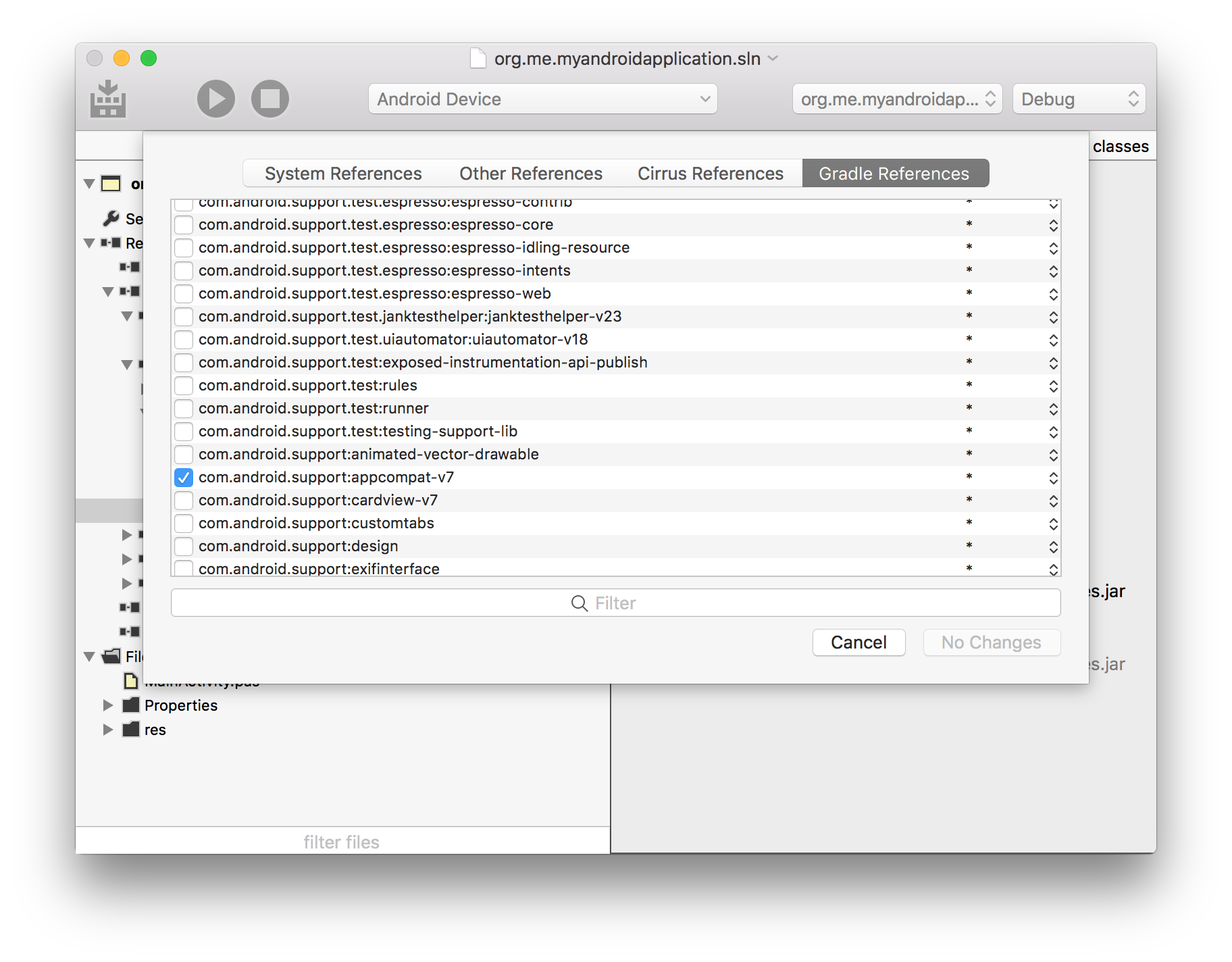

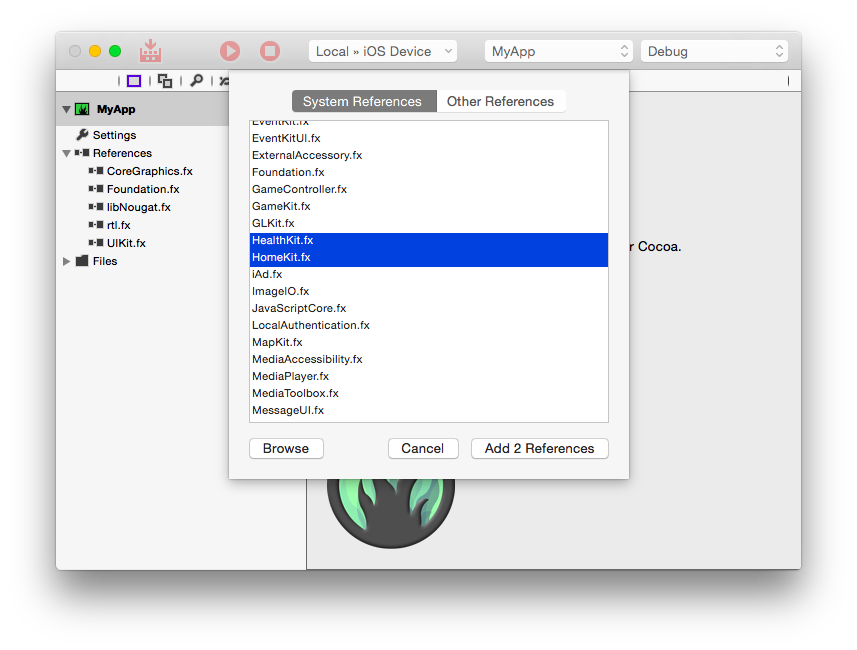

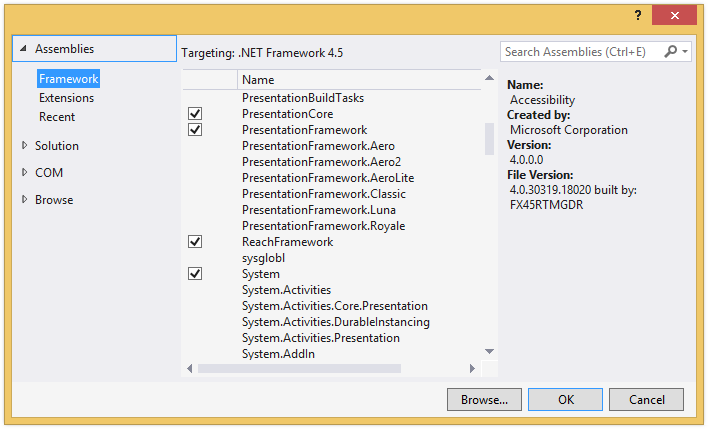

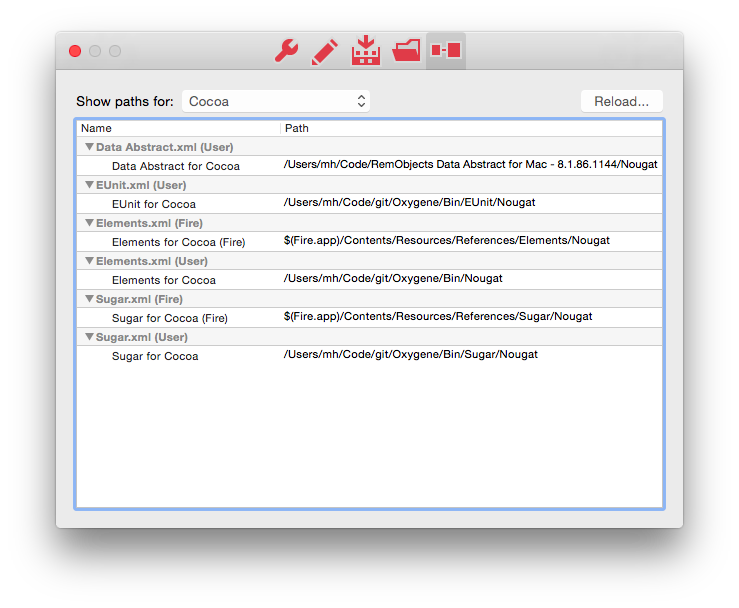

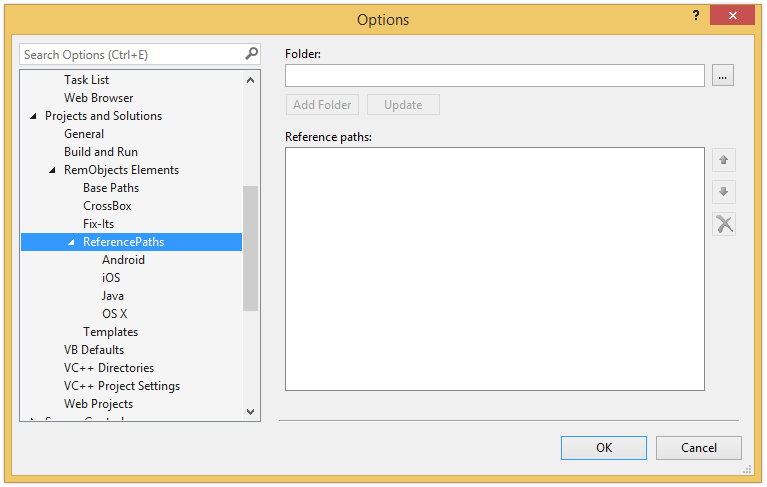

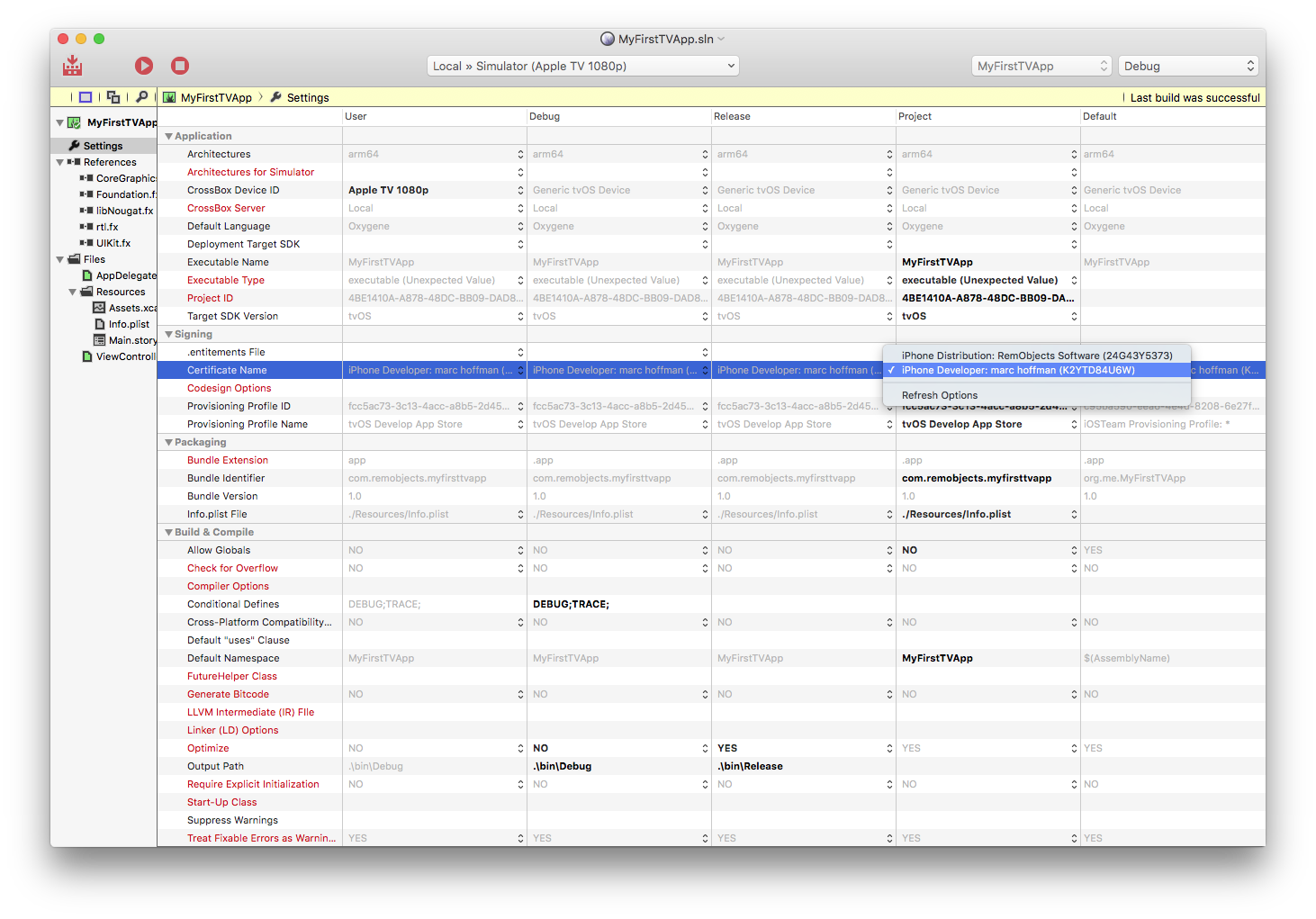

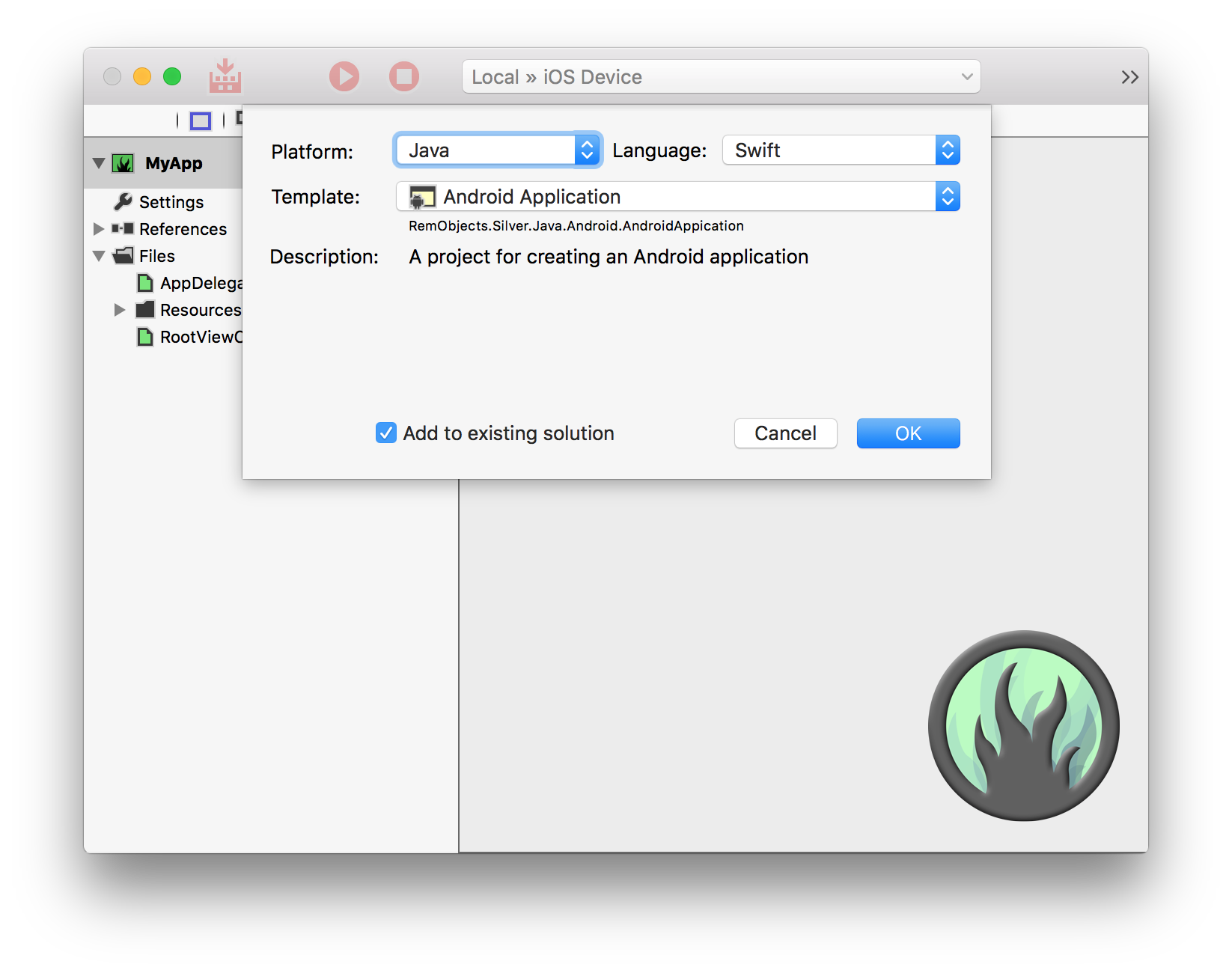

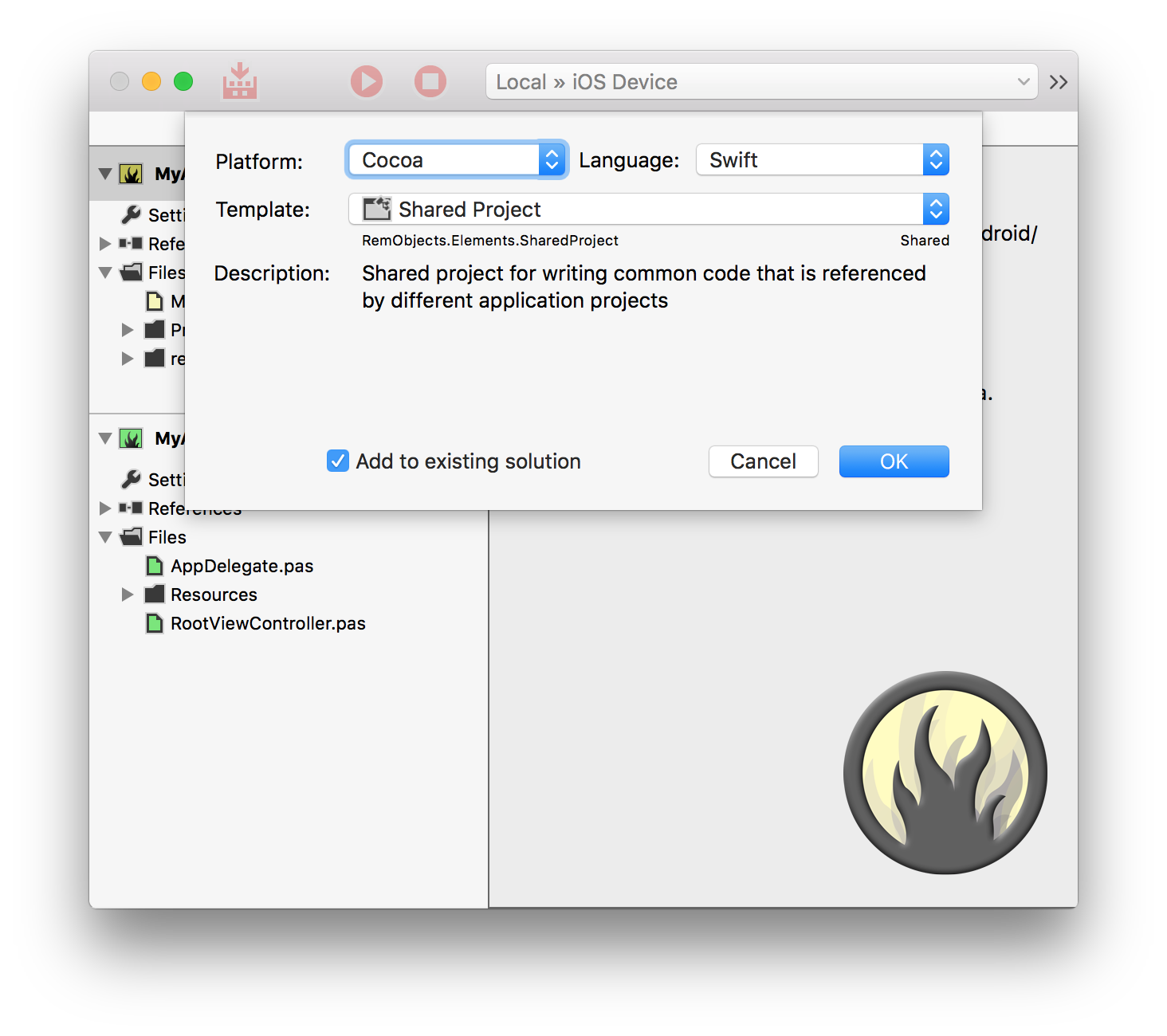

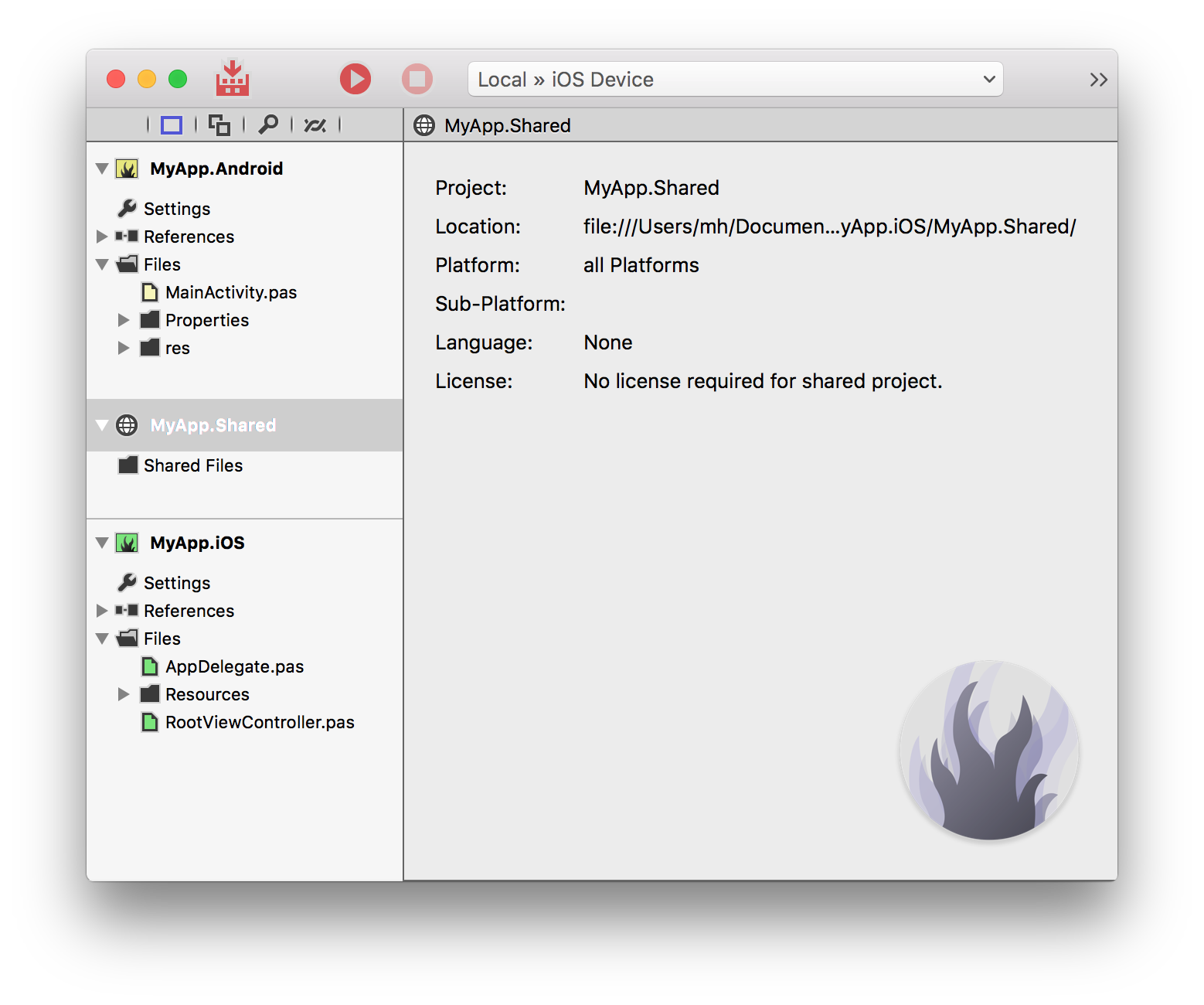

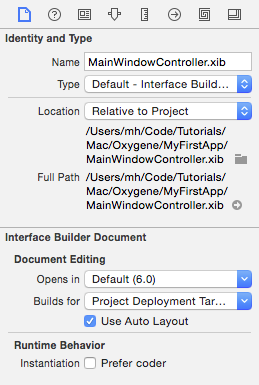

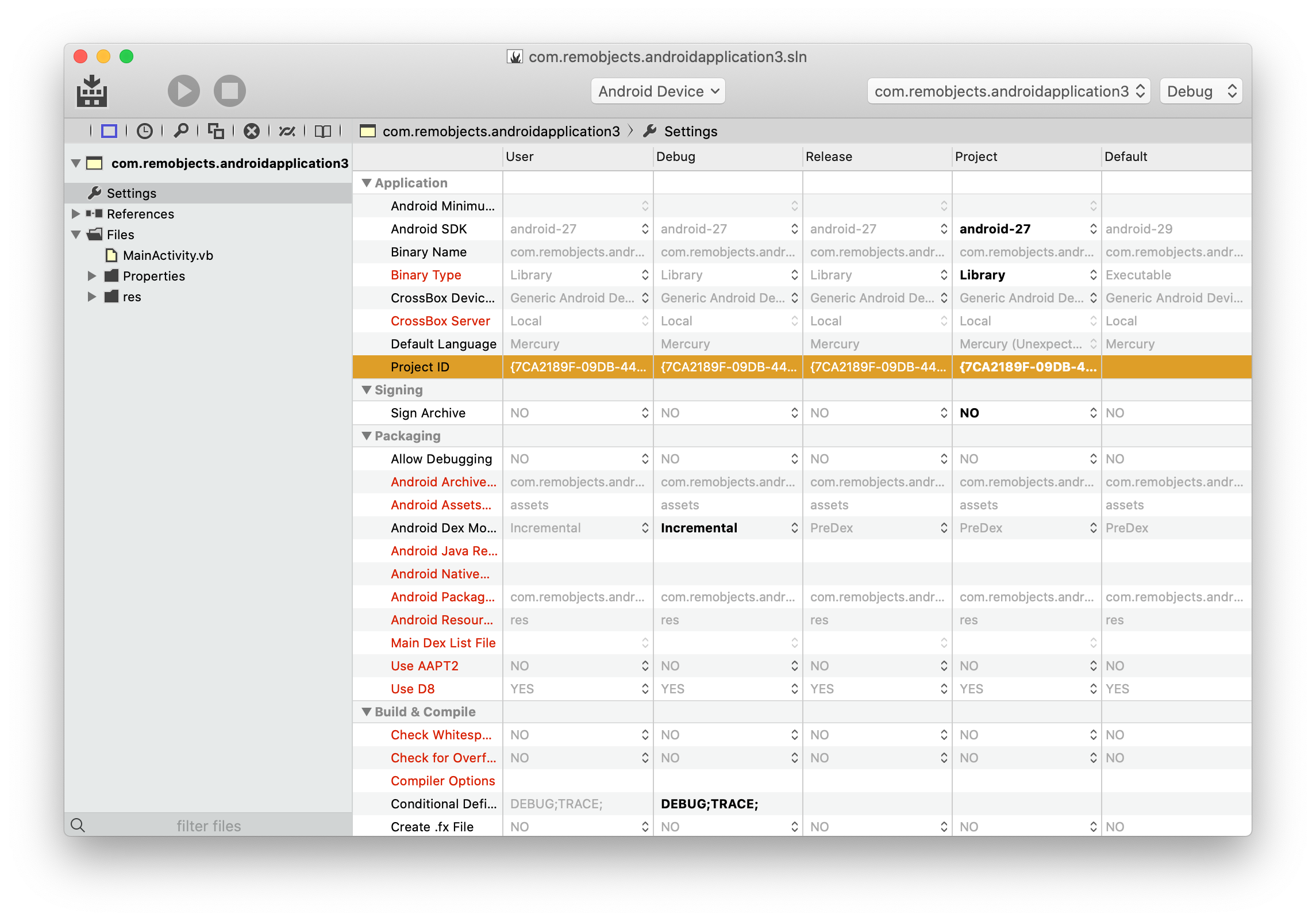

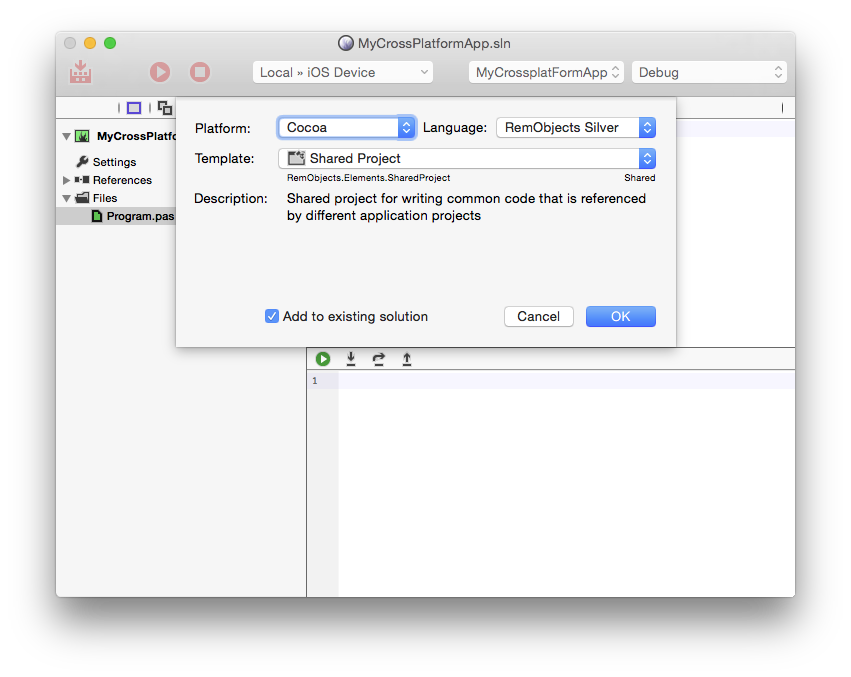

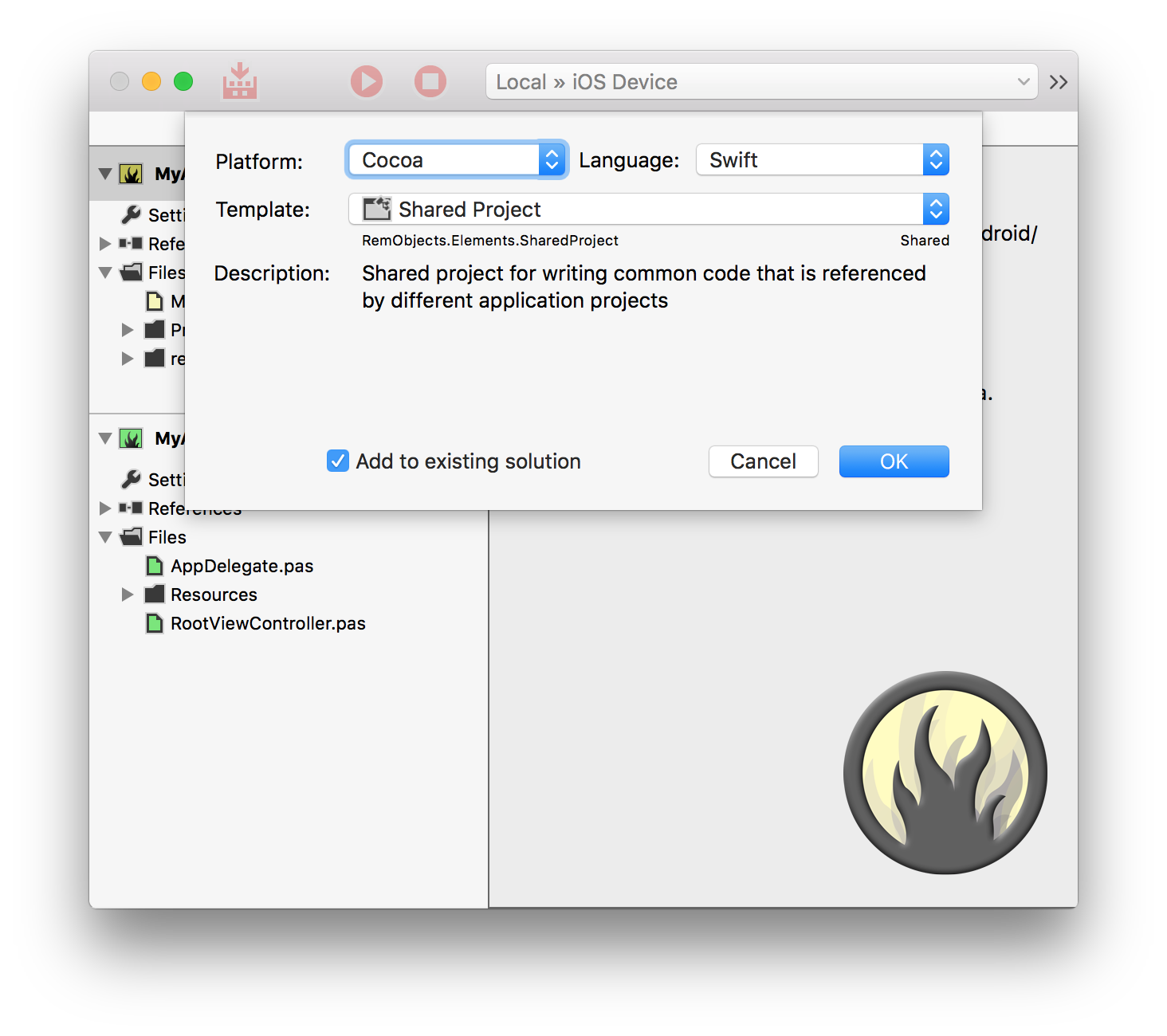

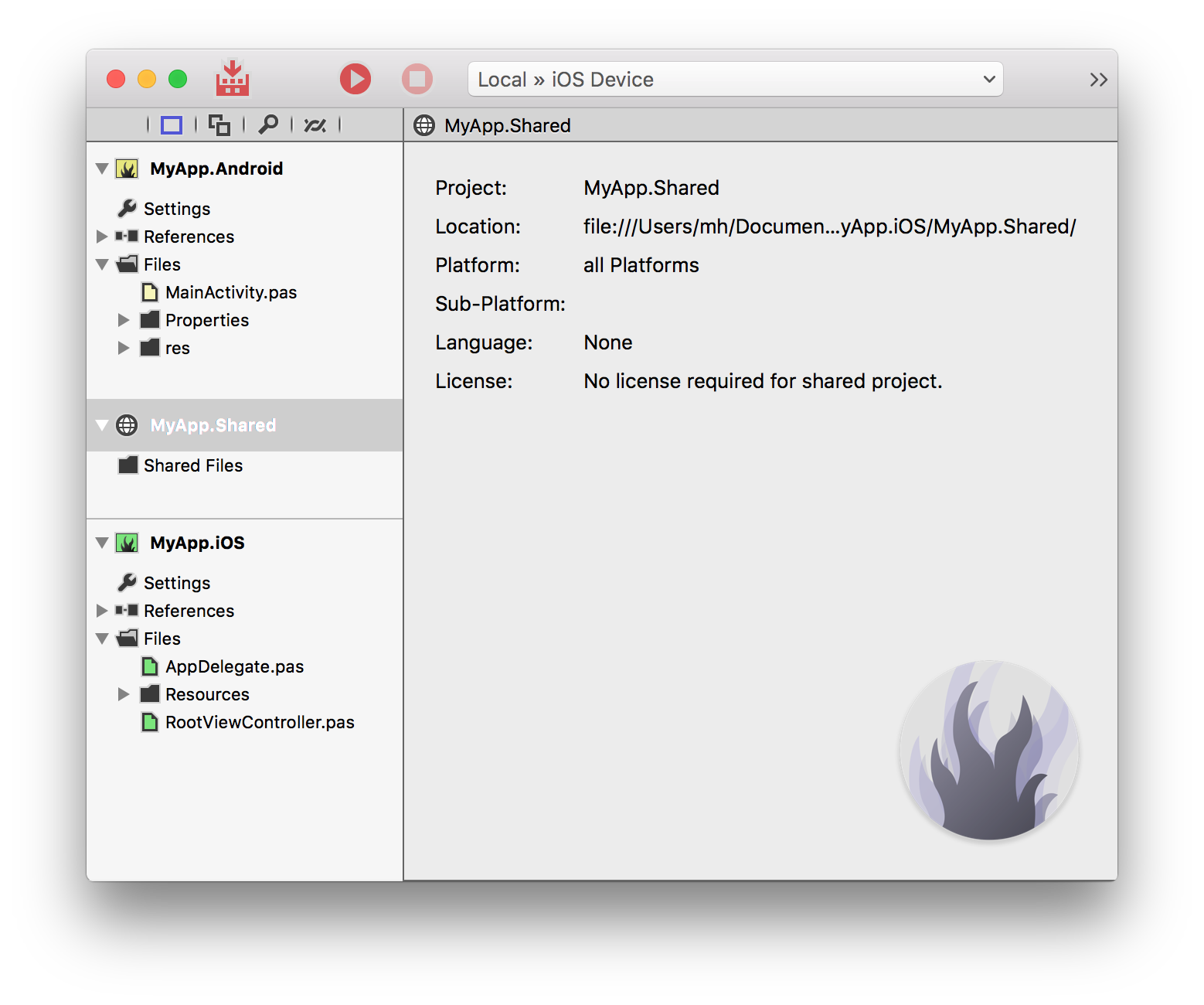

Projects talks about advanced topics for working with projects, from Project Settings over References to Shared Projects.

-

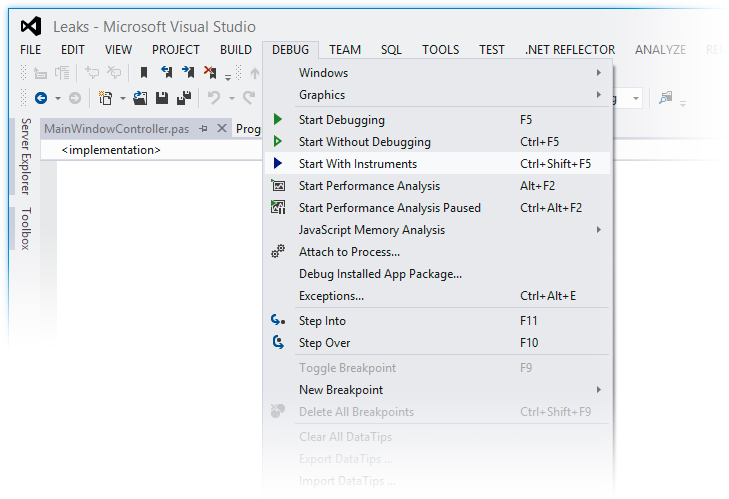

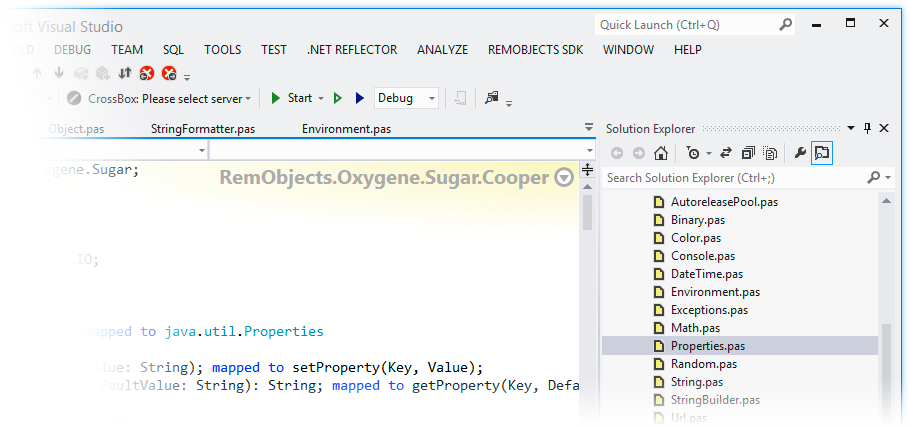

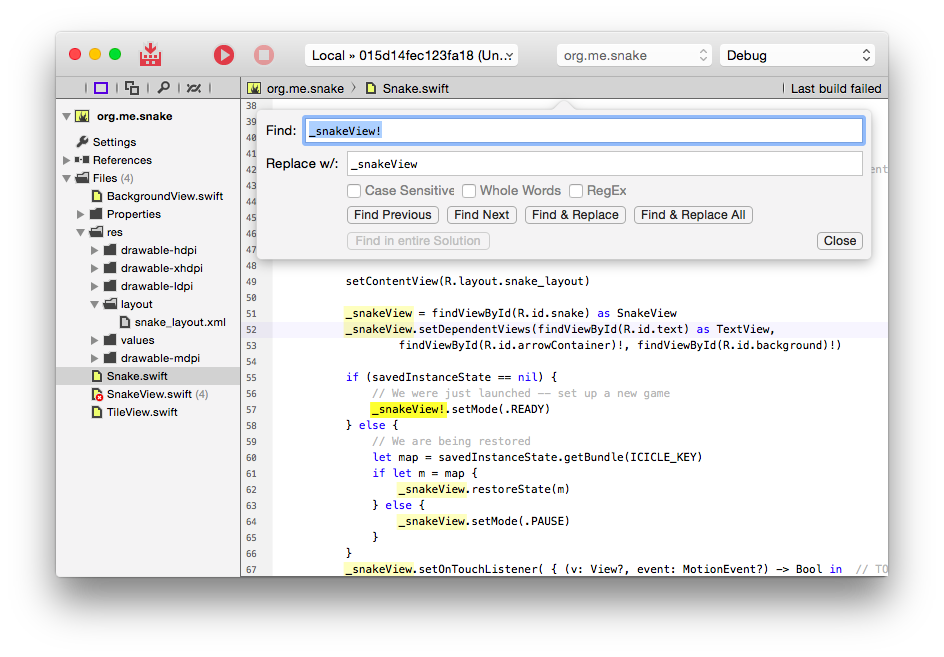

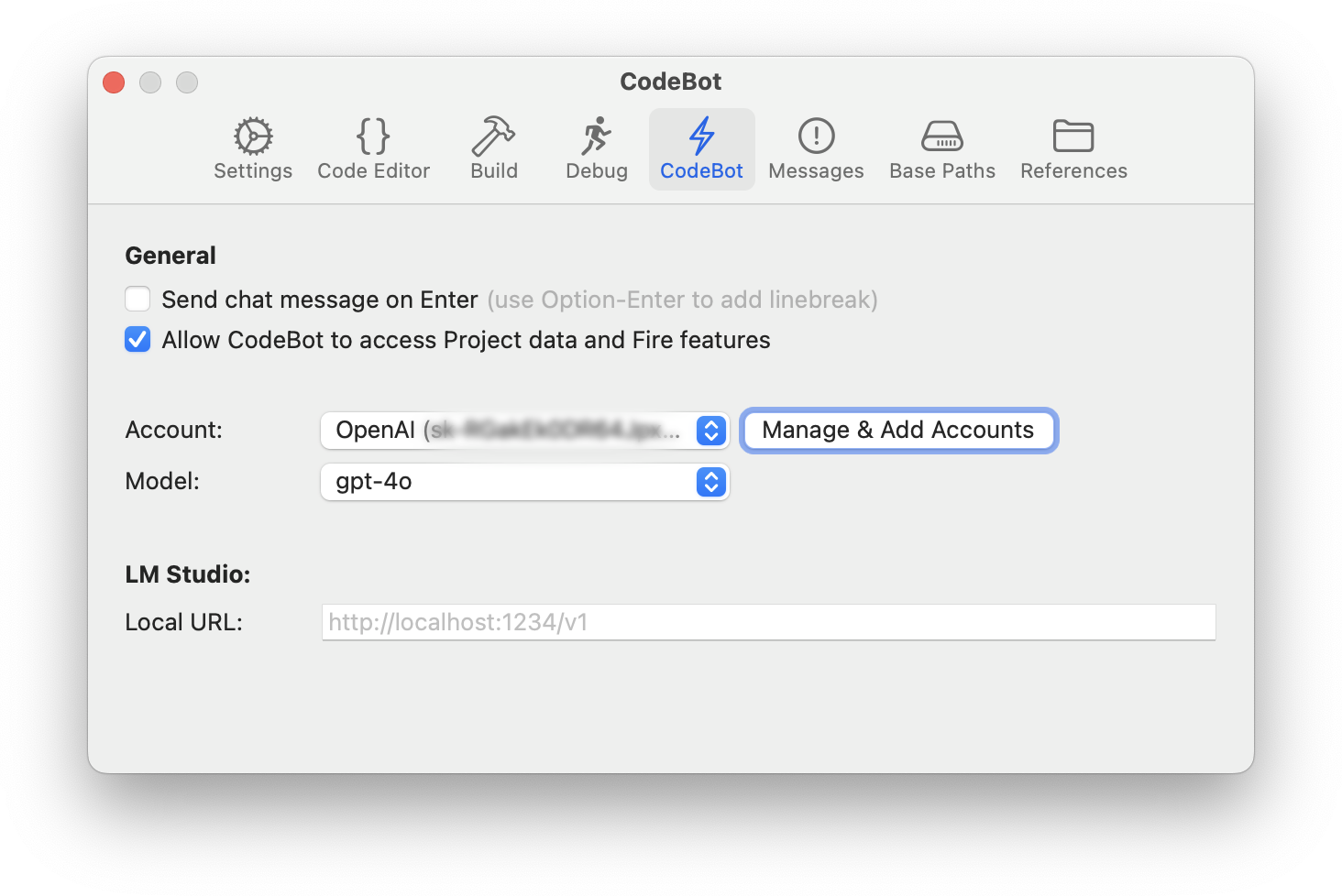

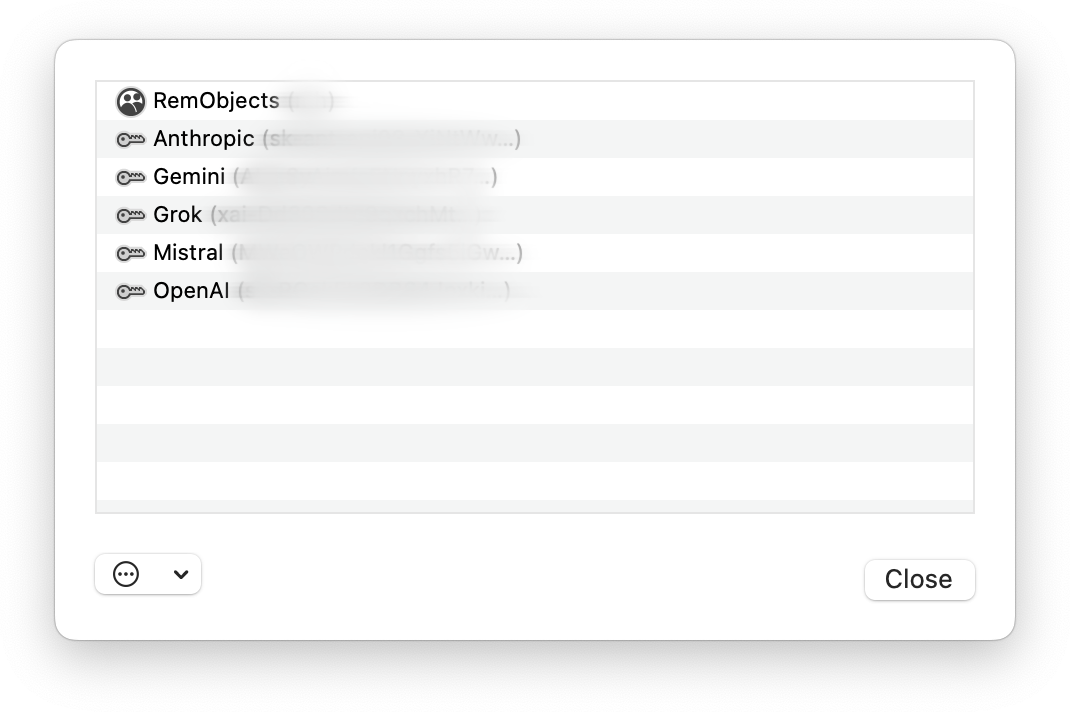

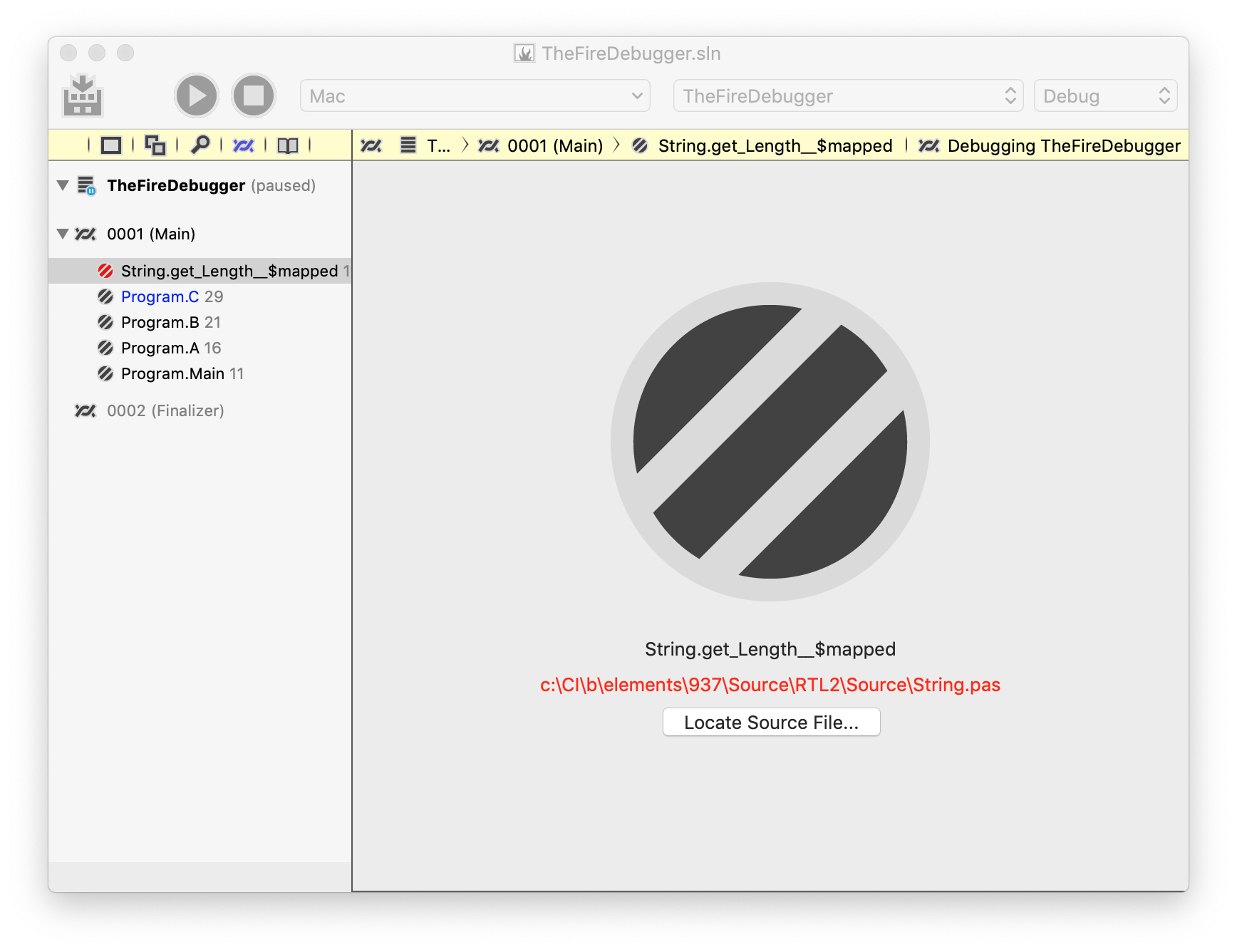

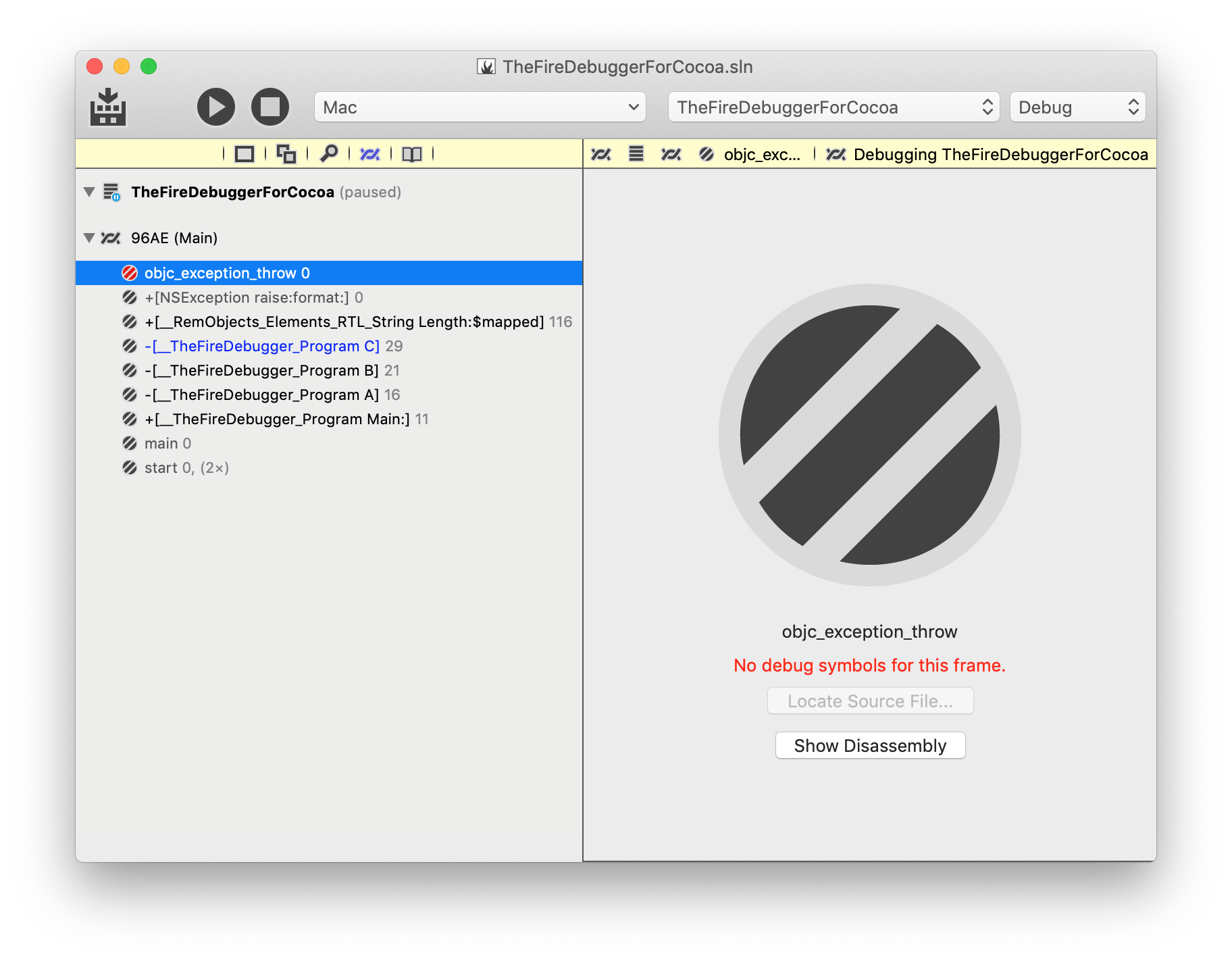

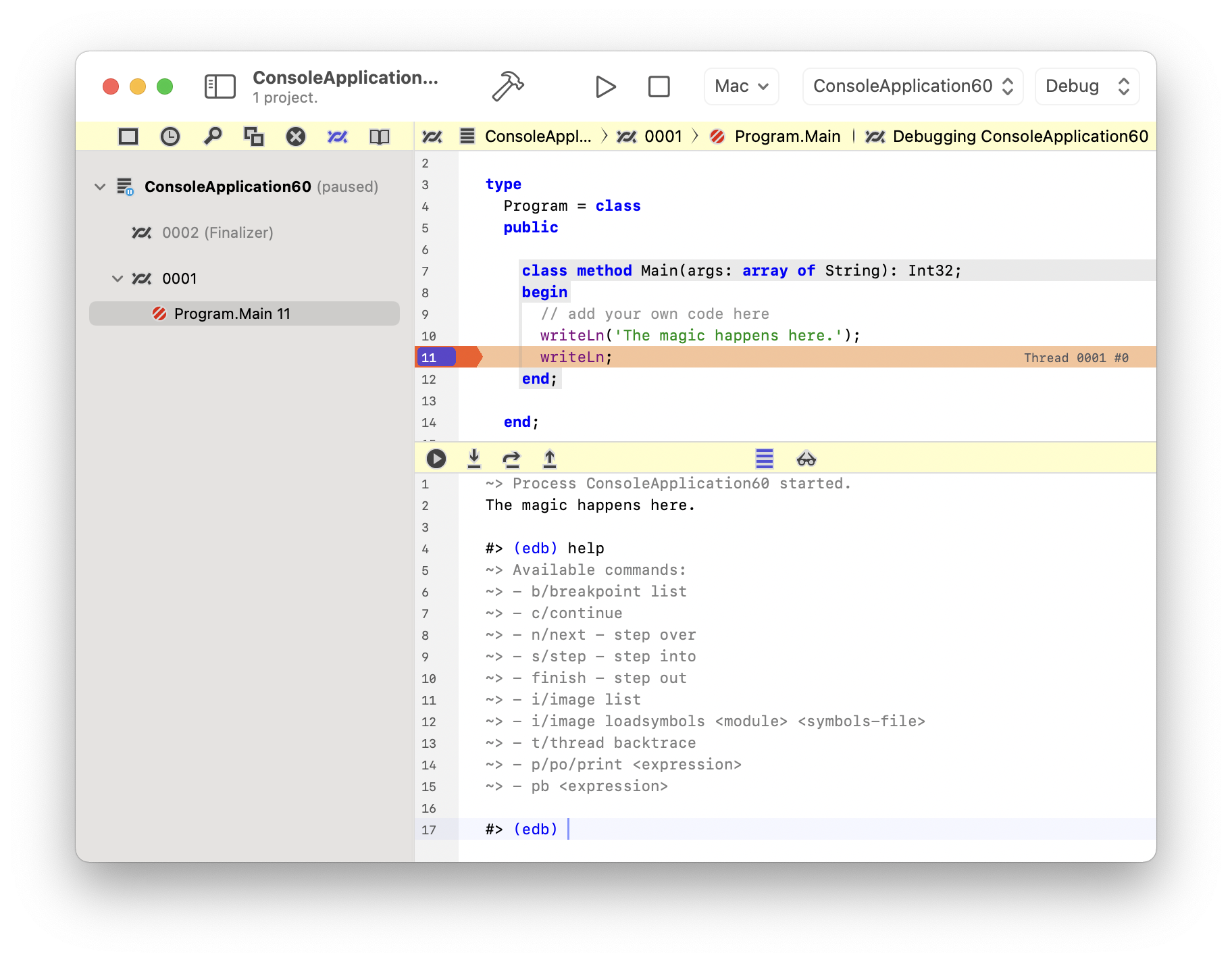

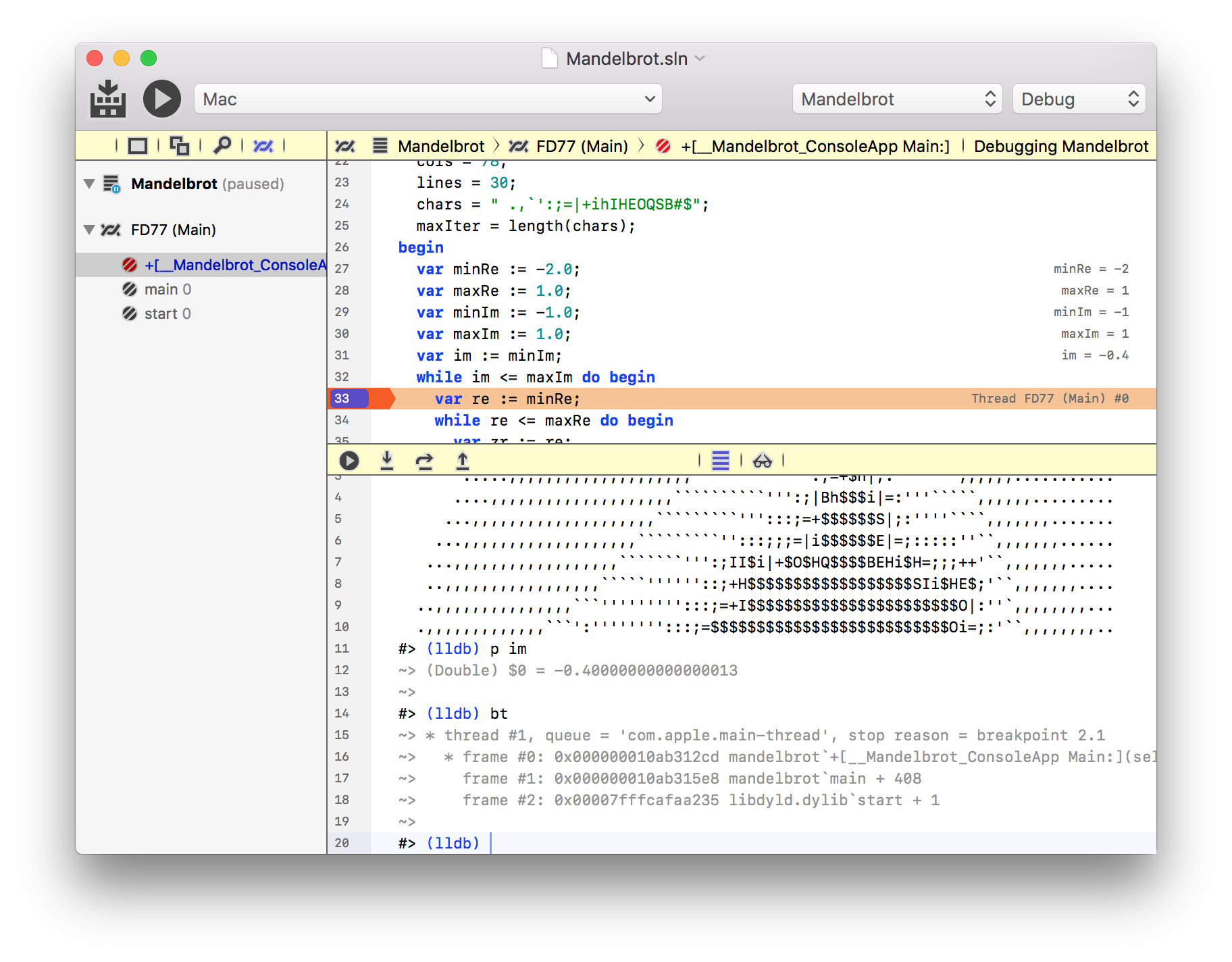

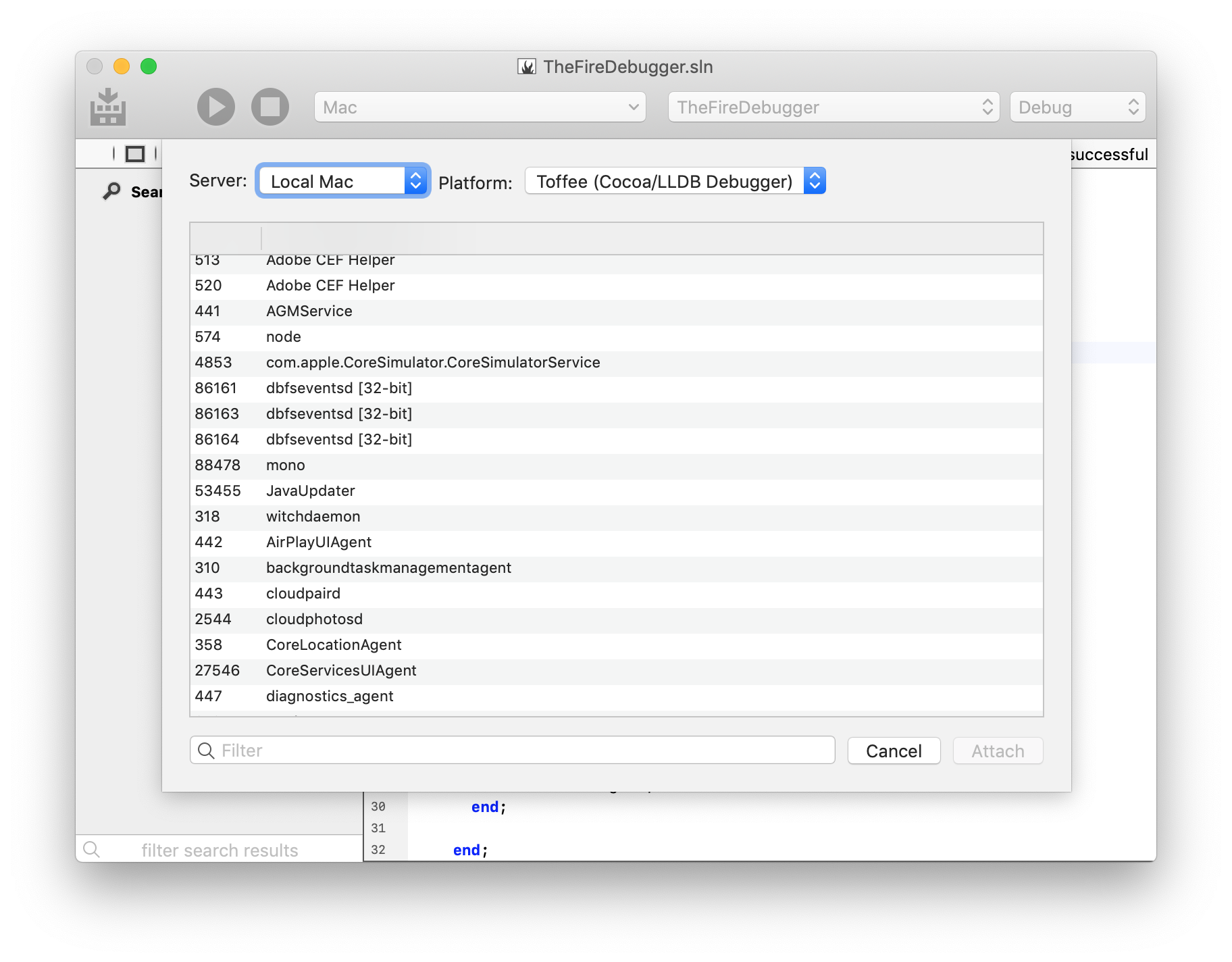

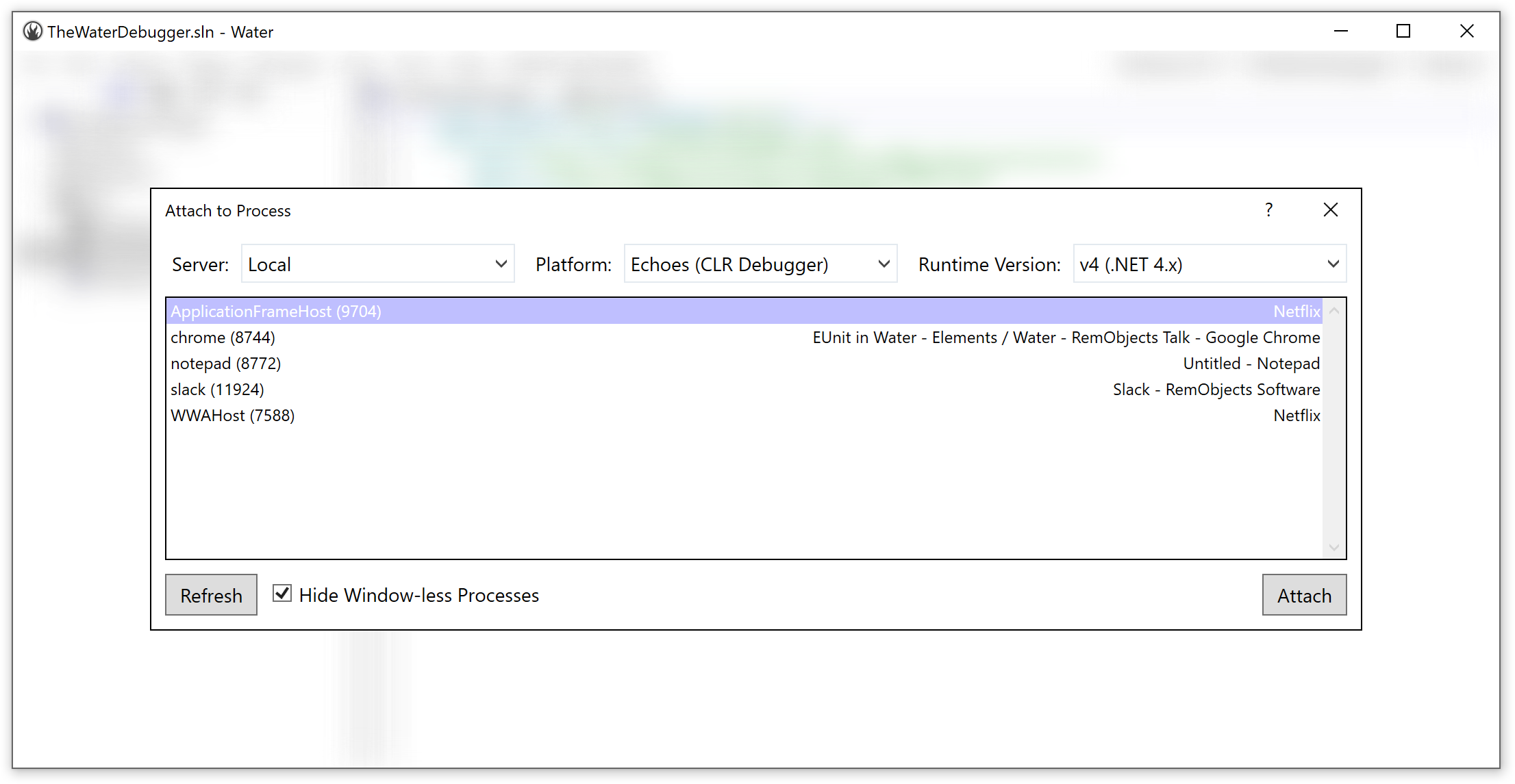

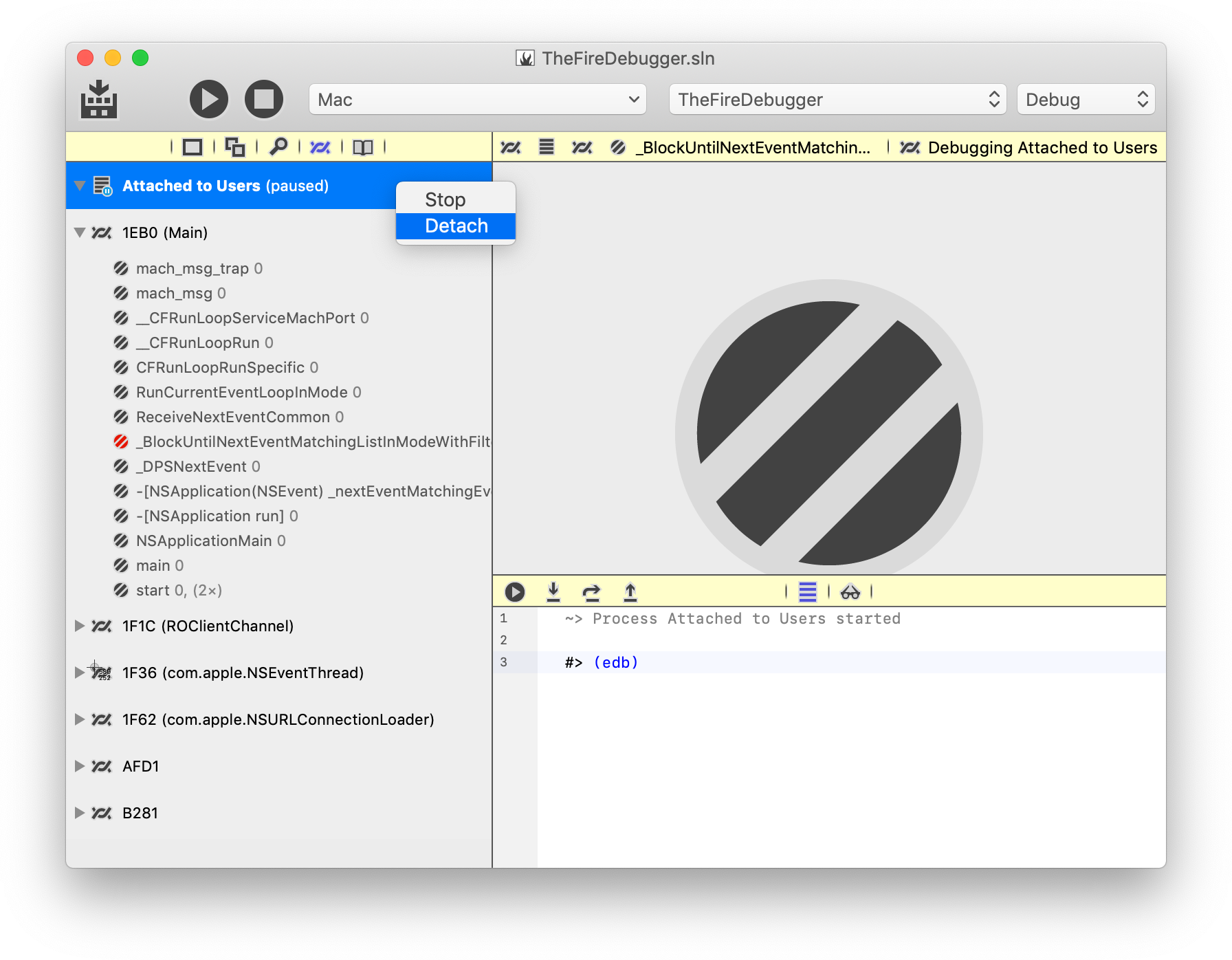

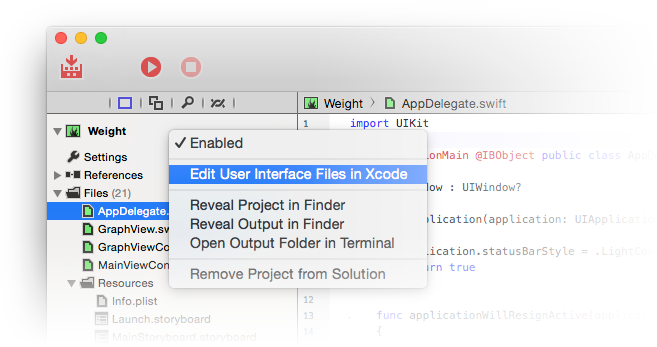

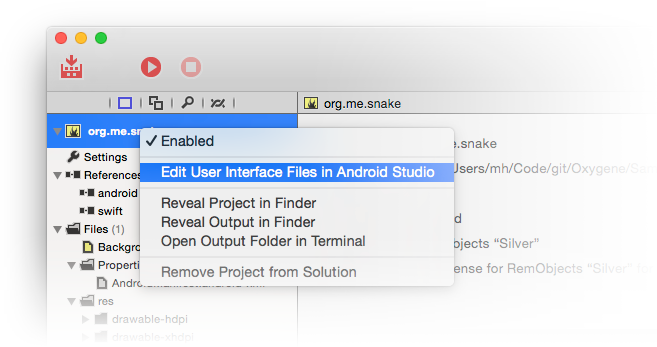

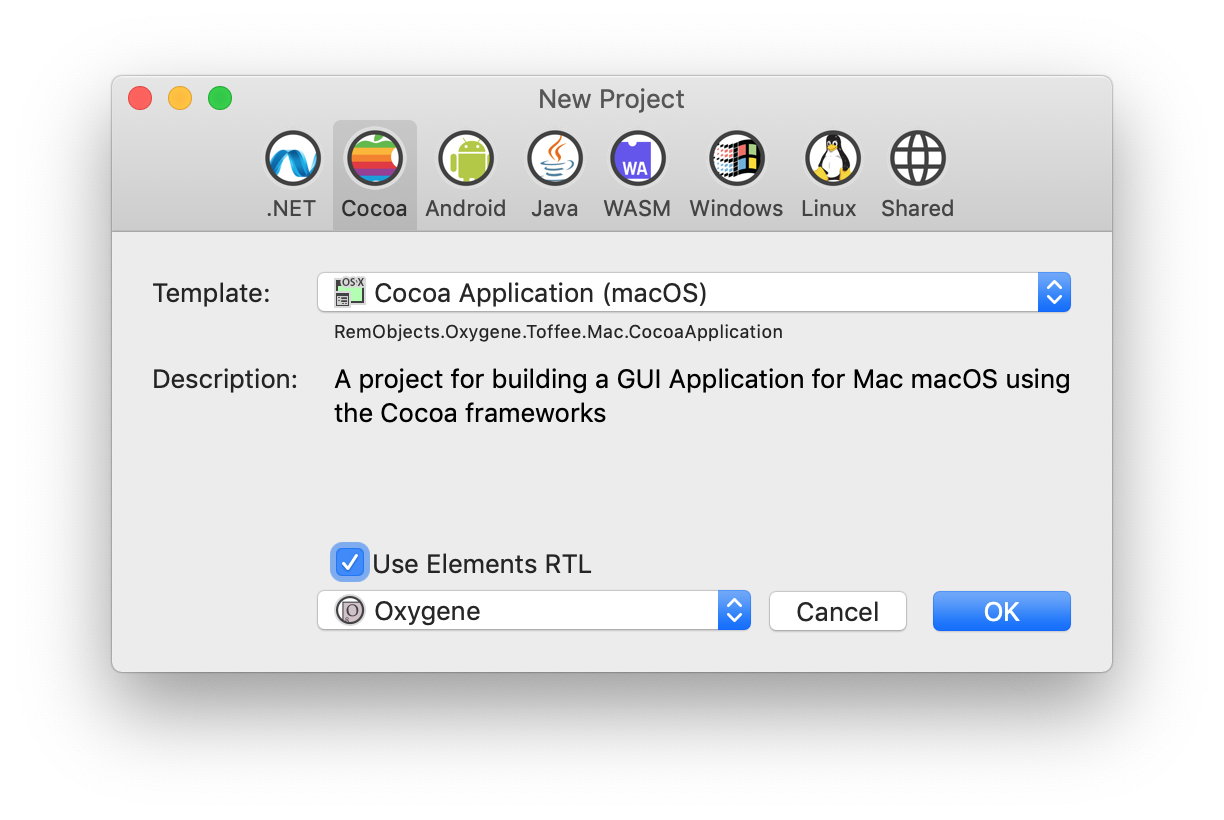

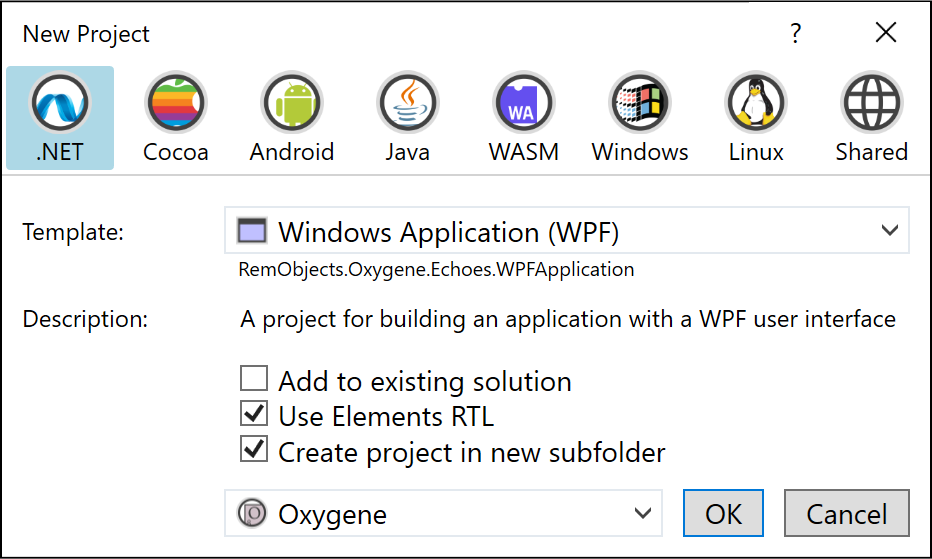

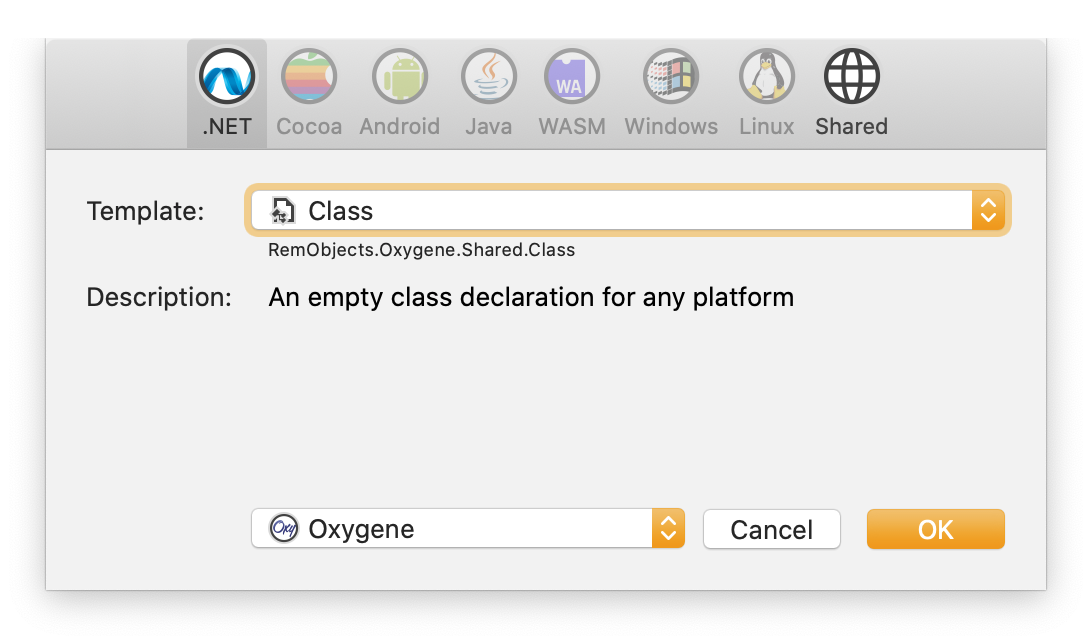

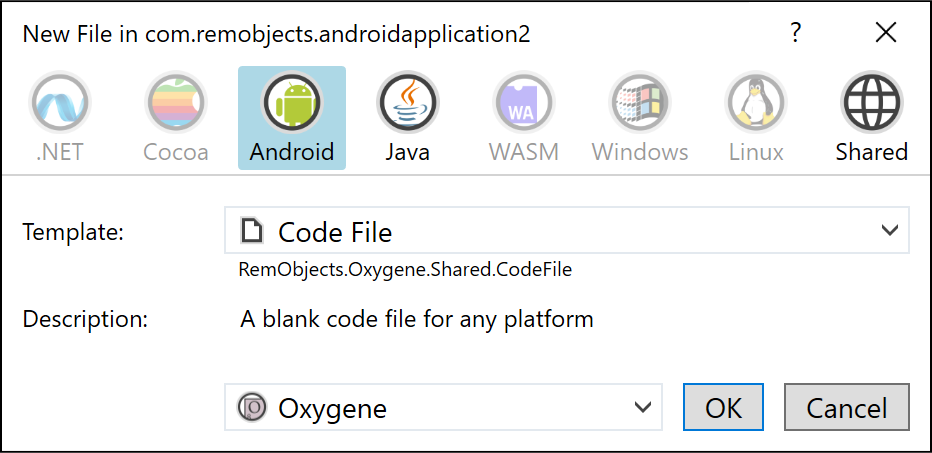

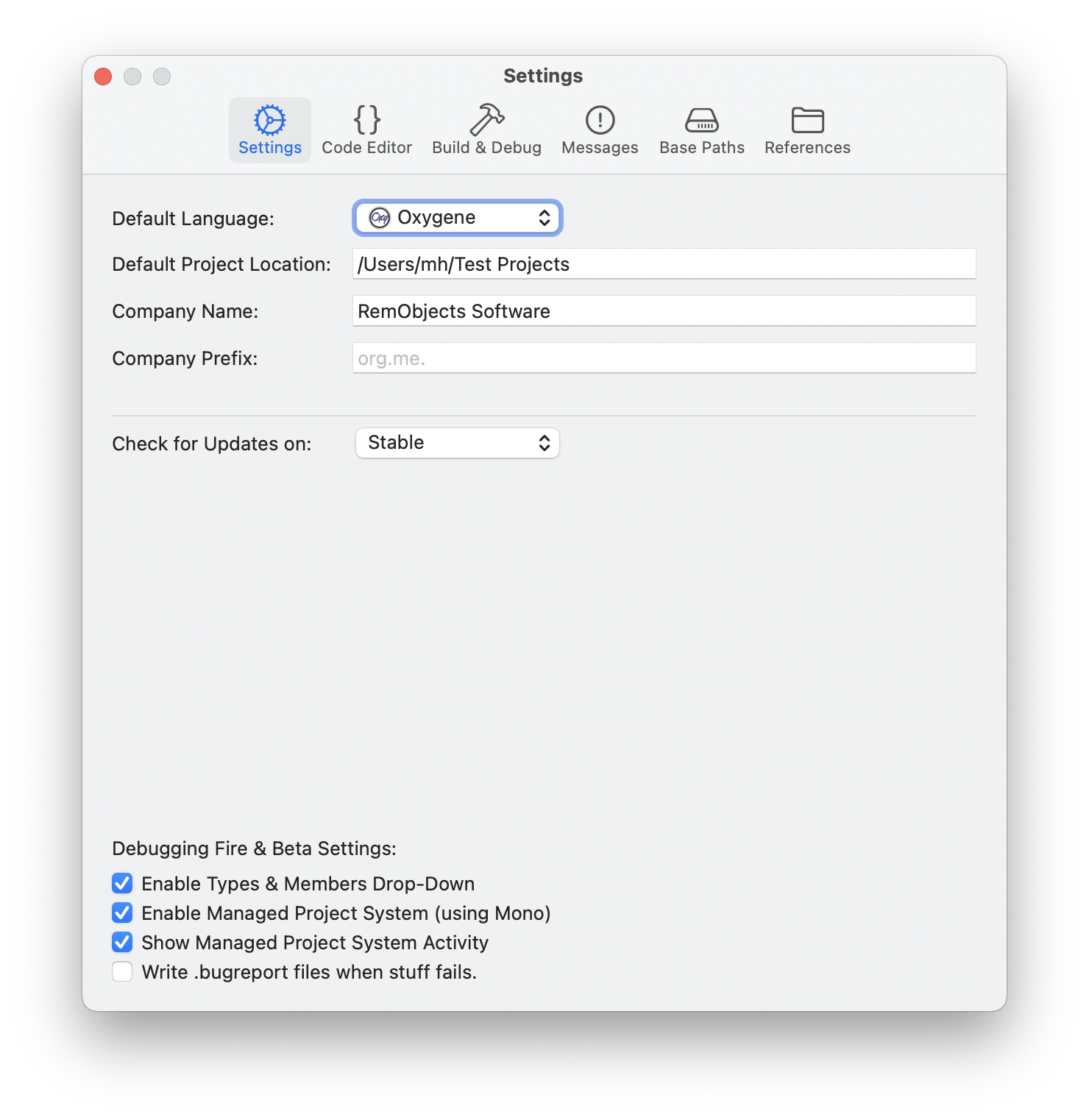

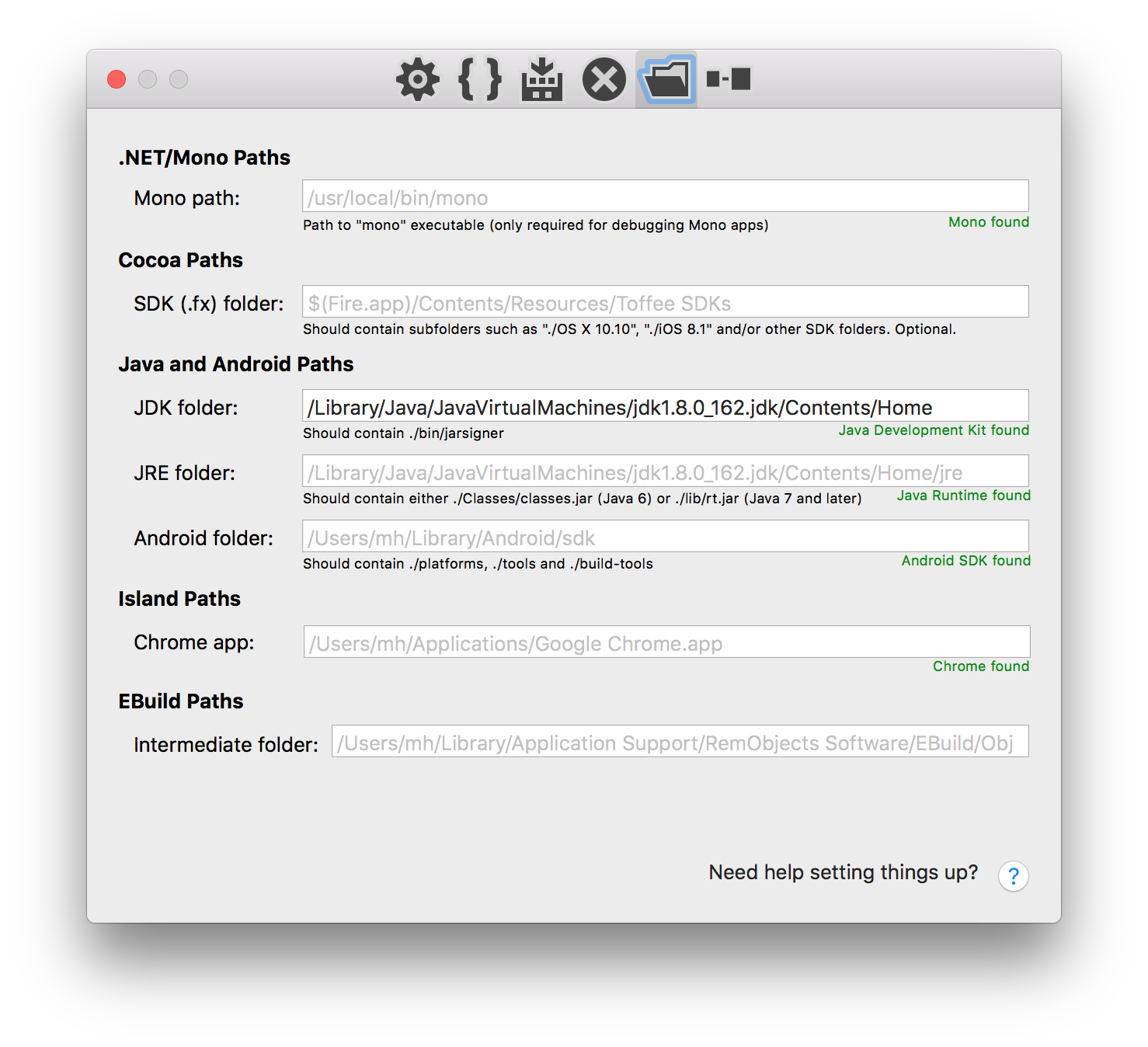

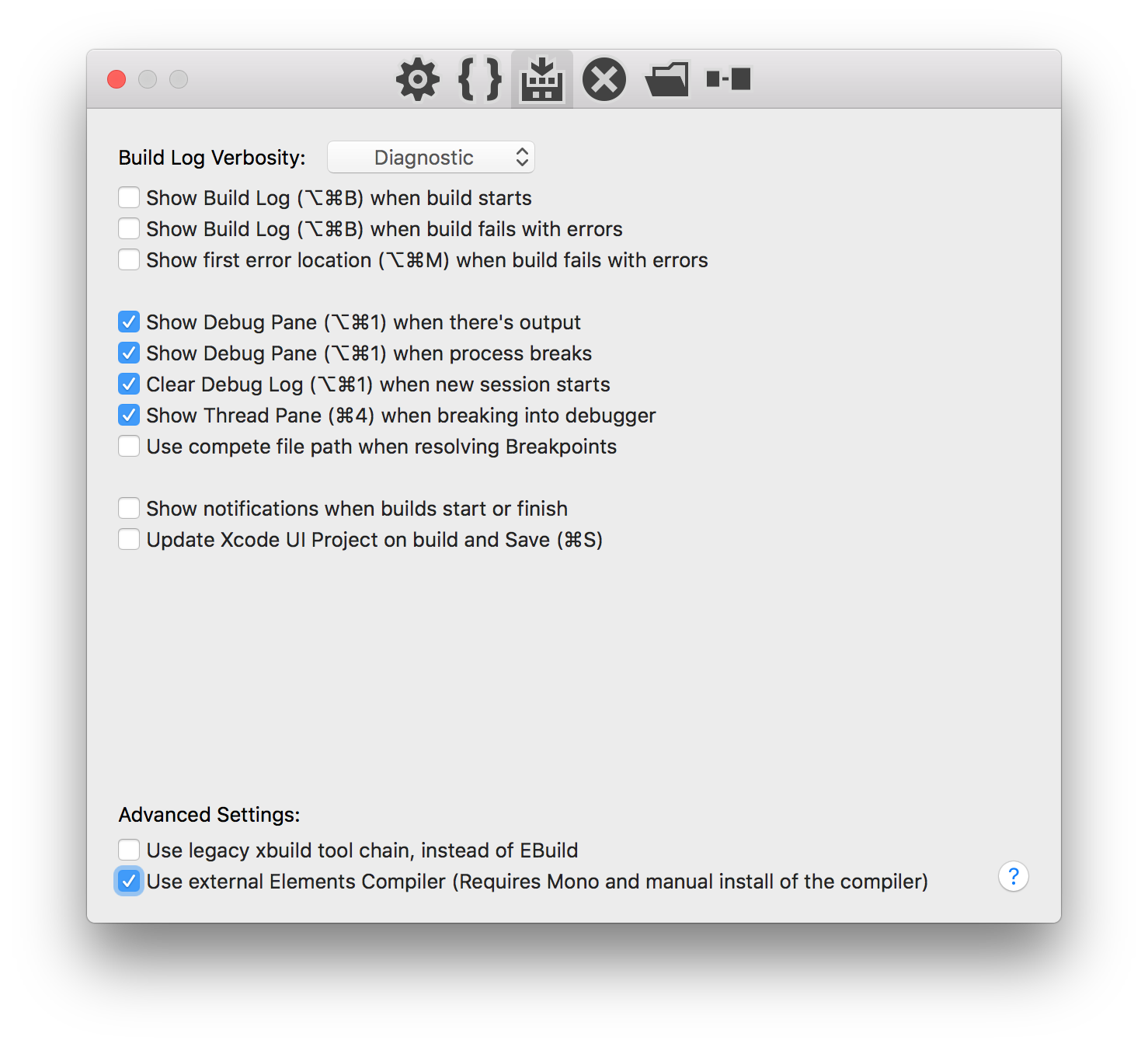

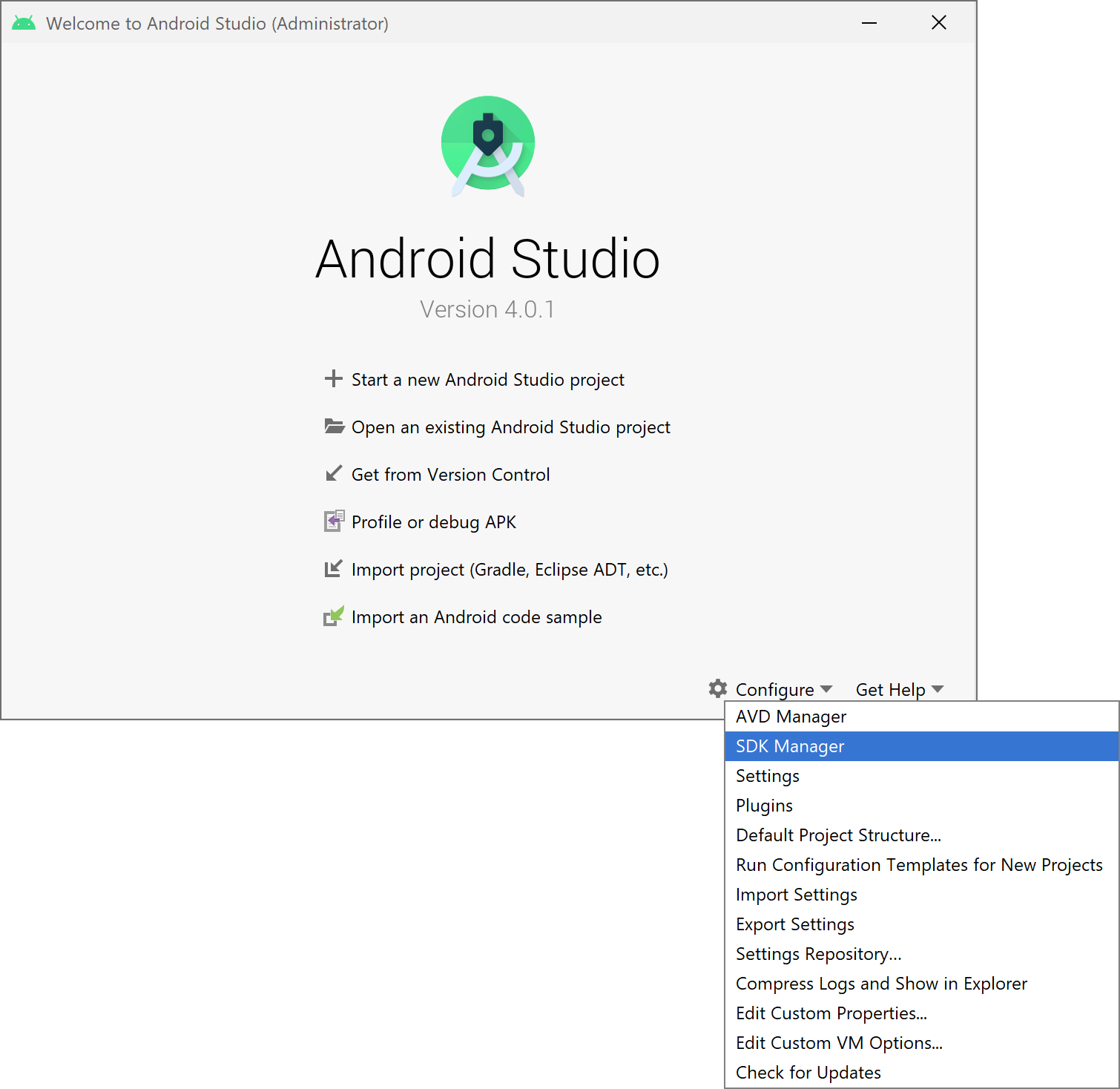

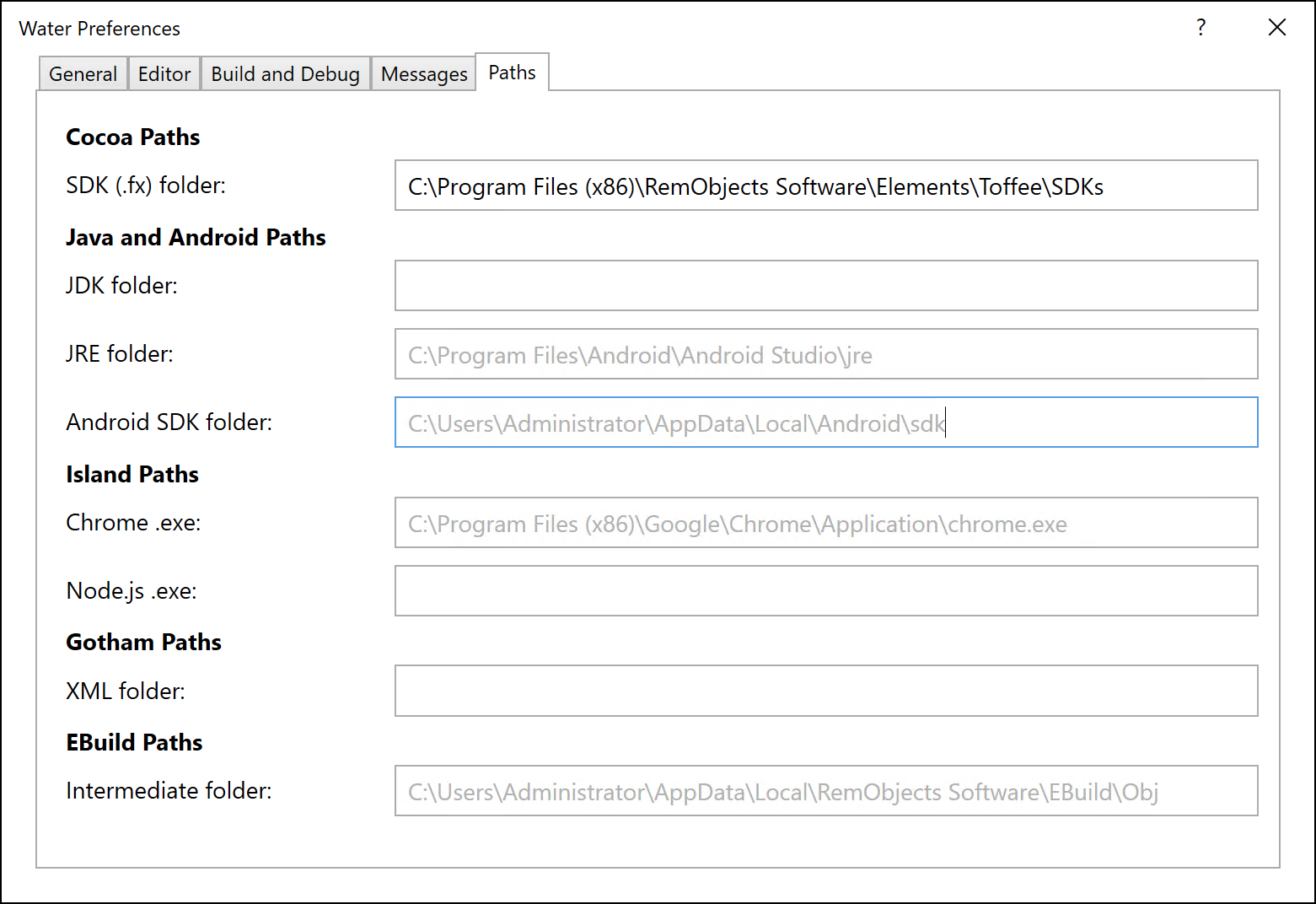

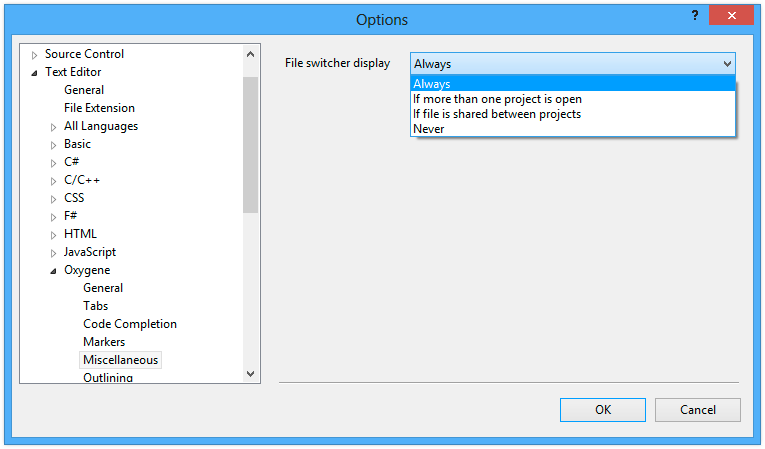

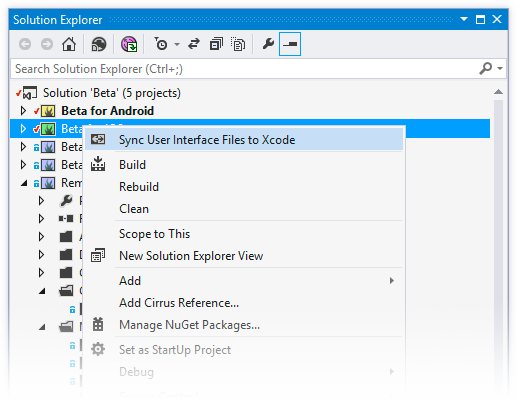

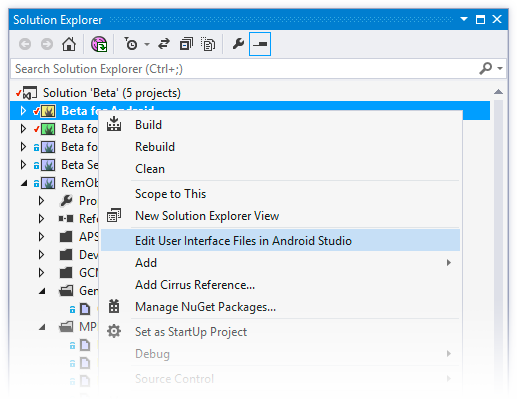

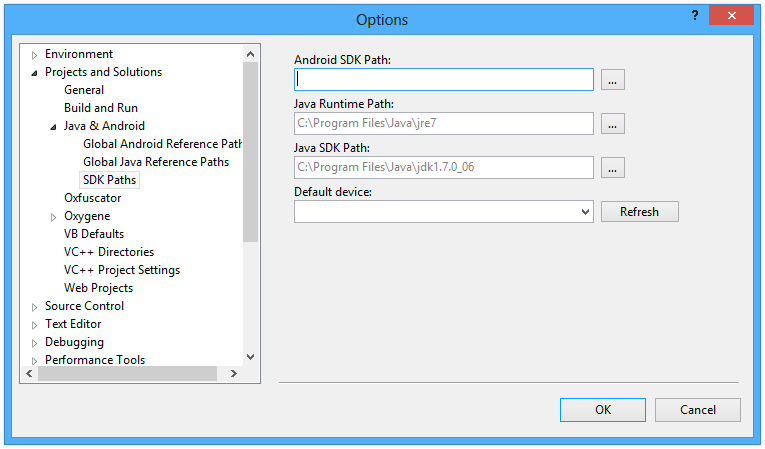

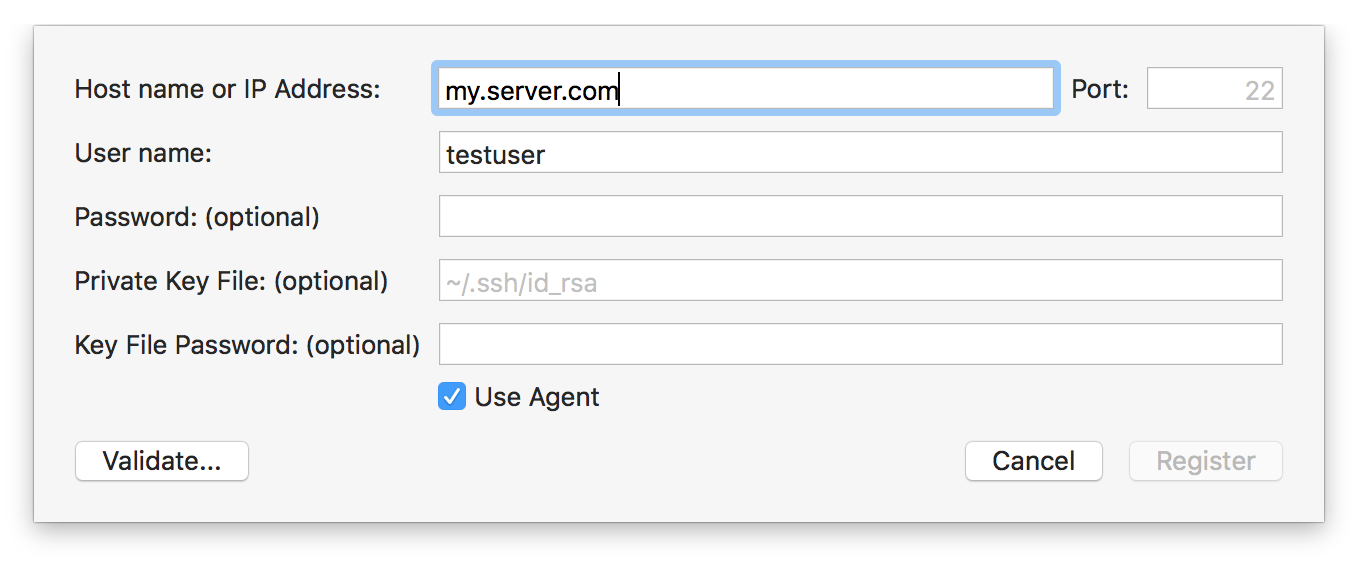

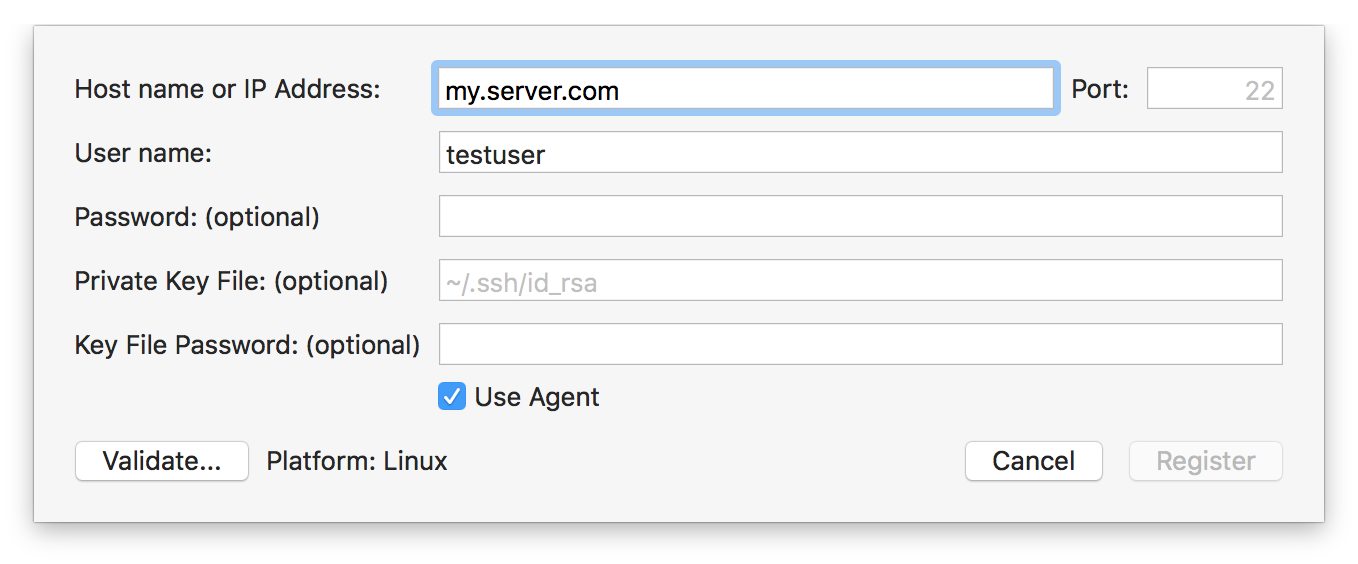

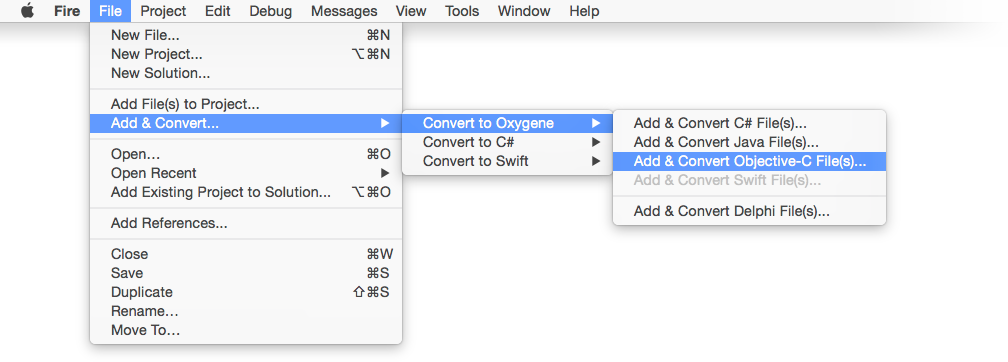

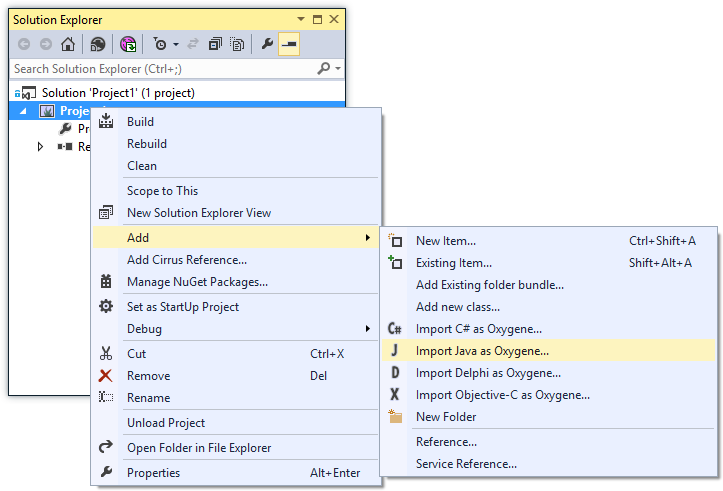

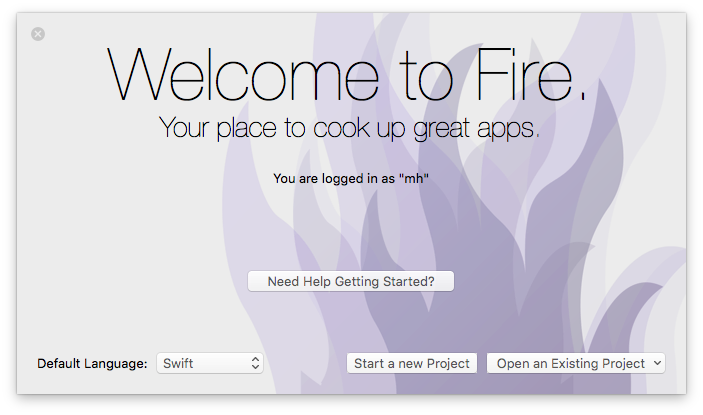

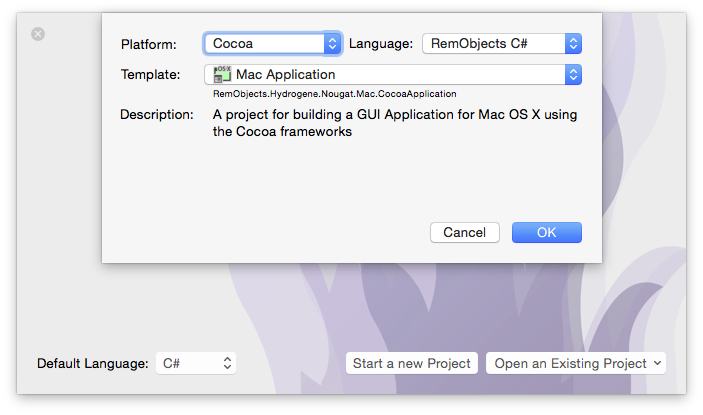

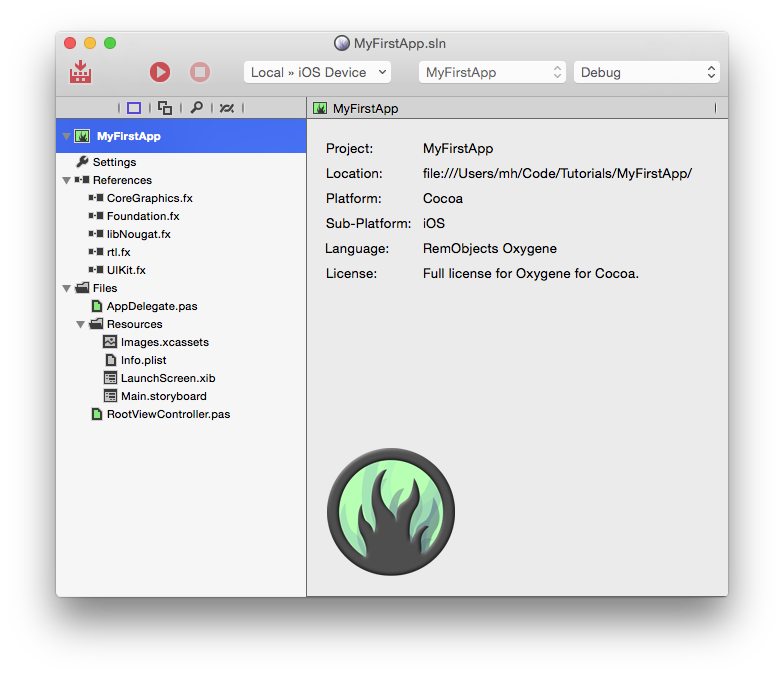

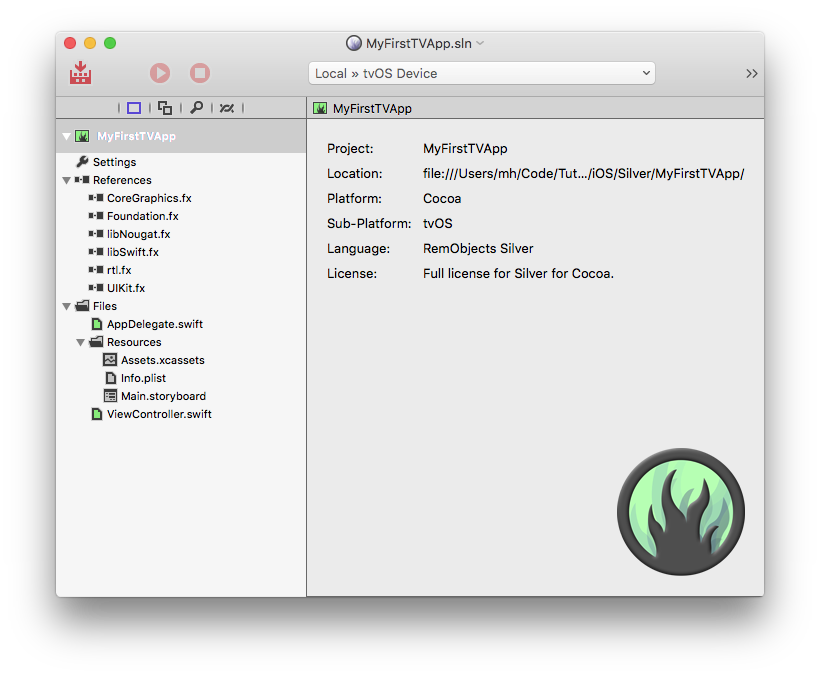

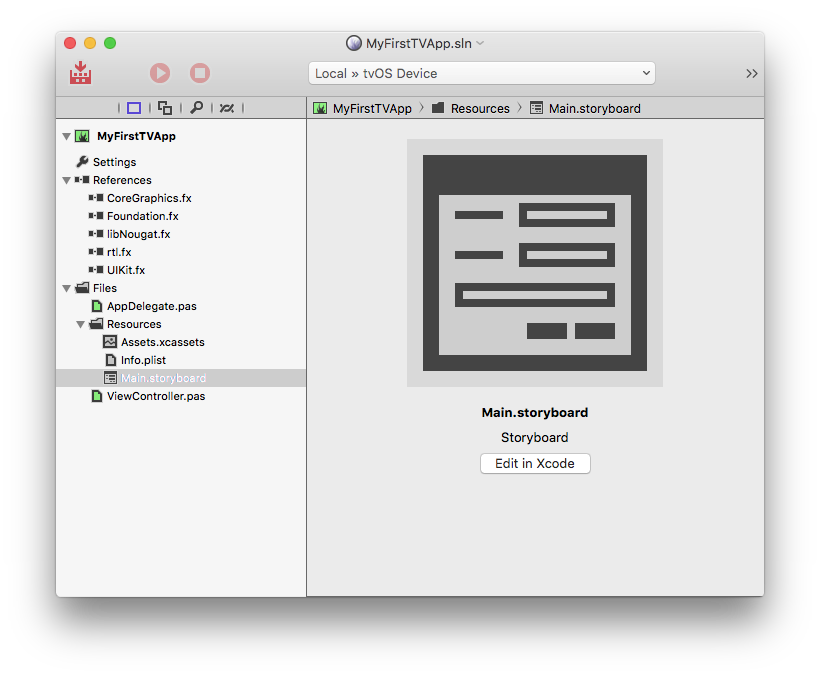

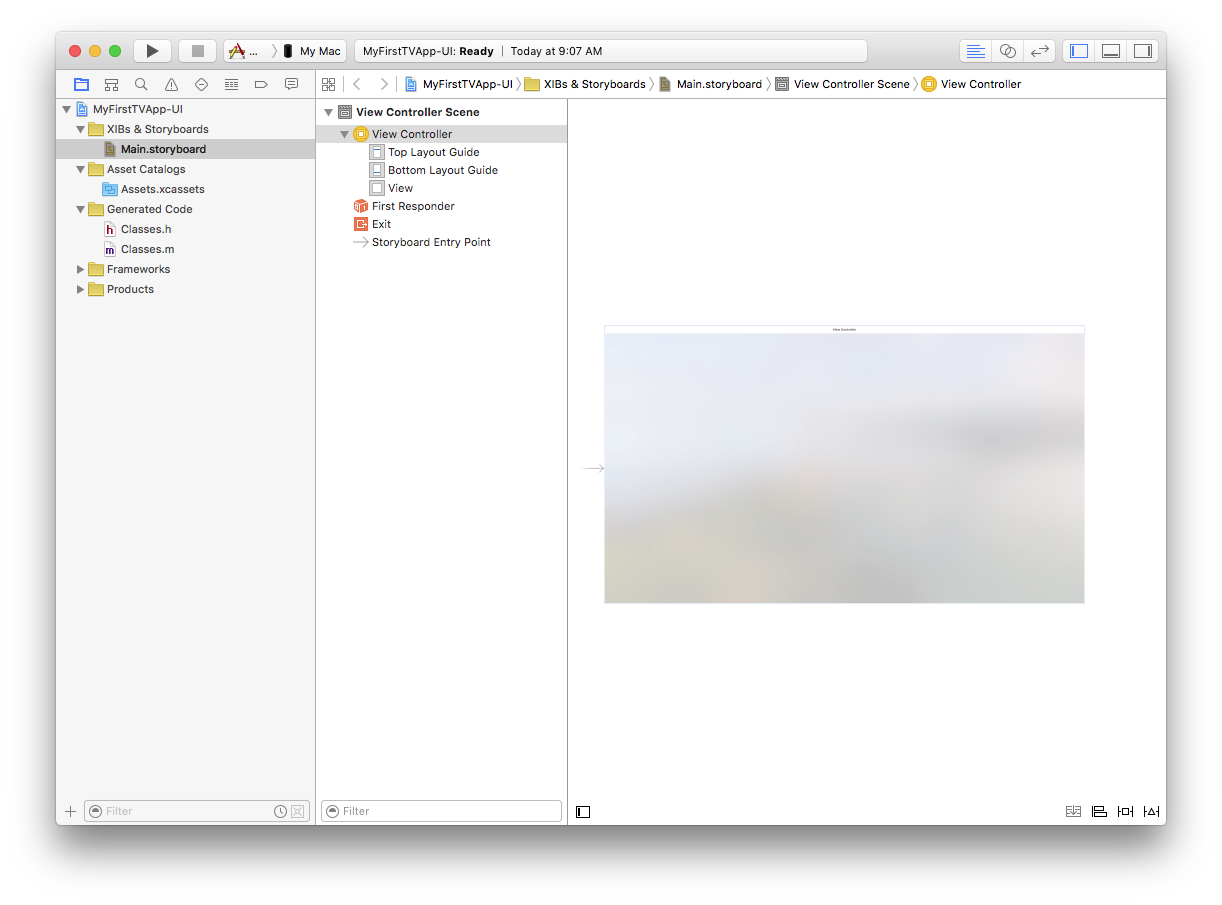

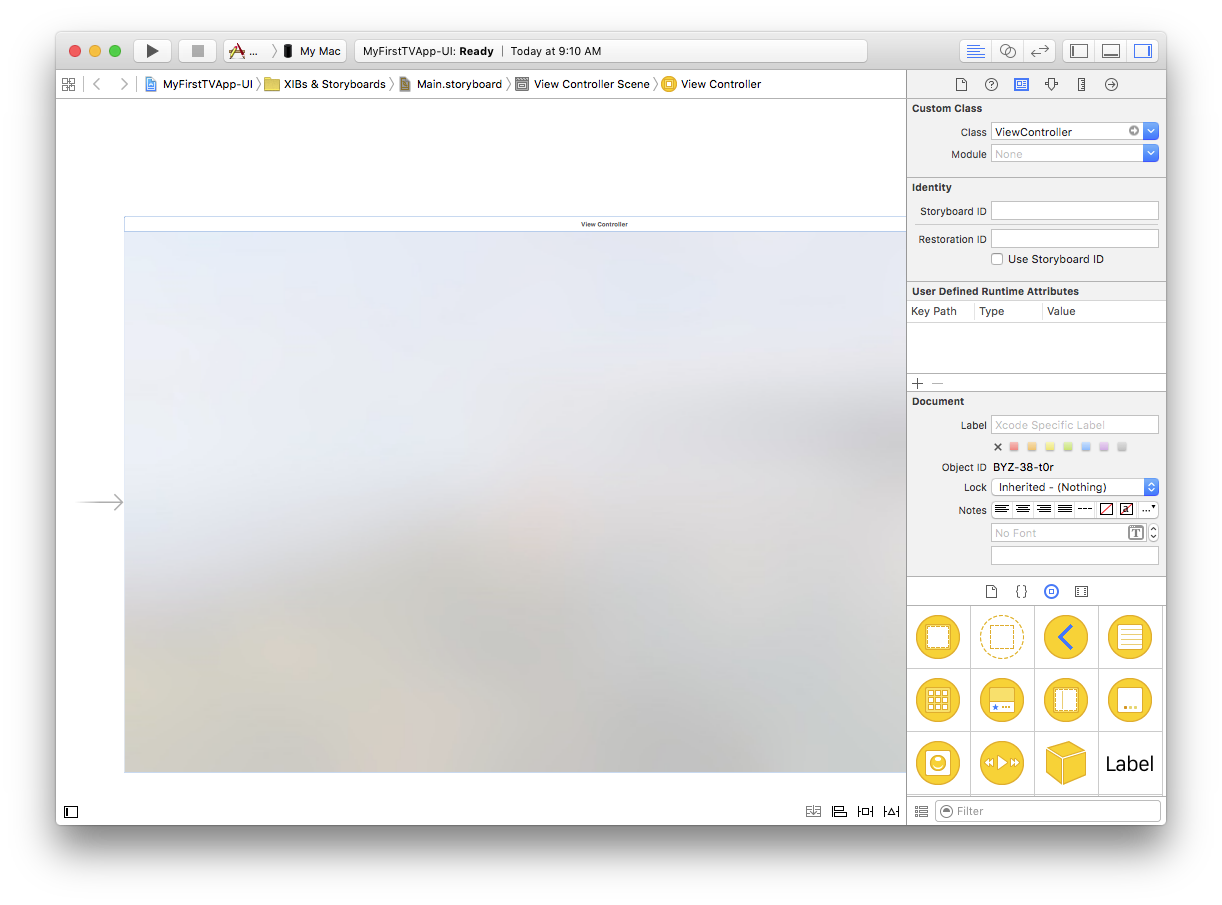

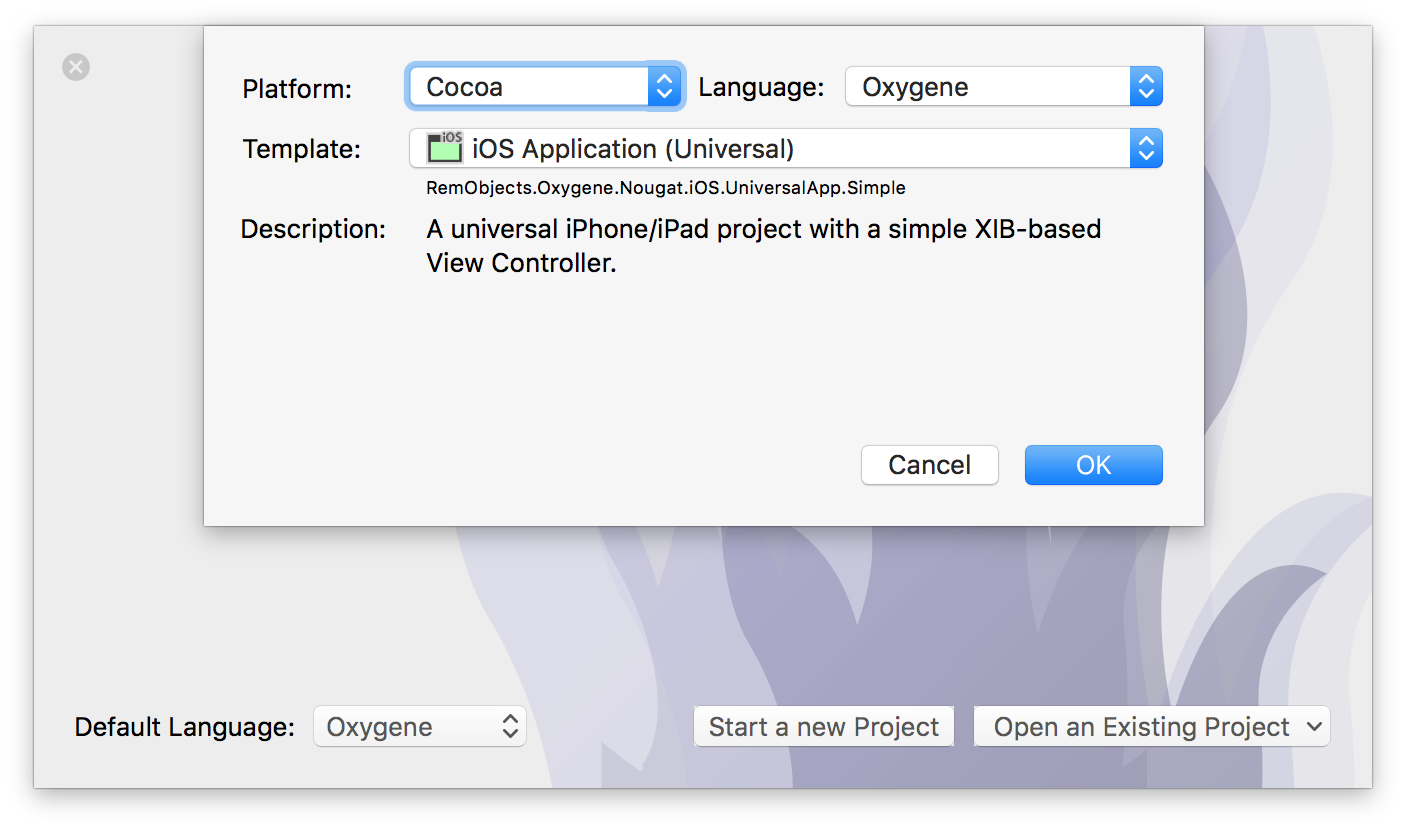

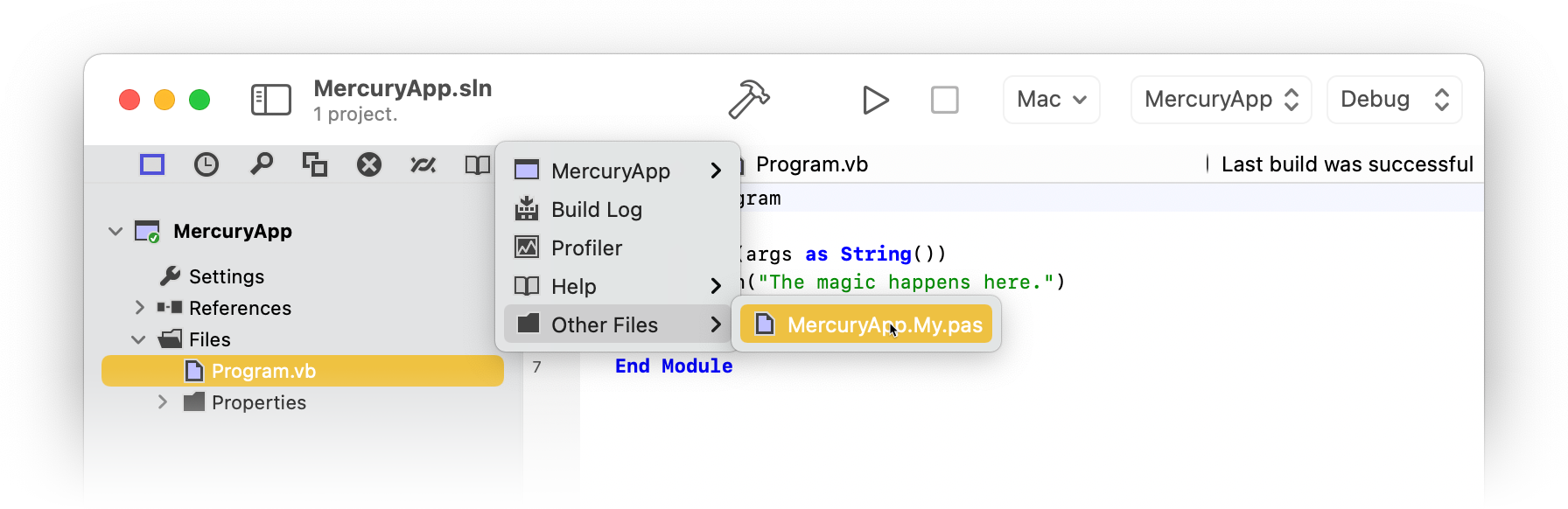

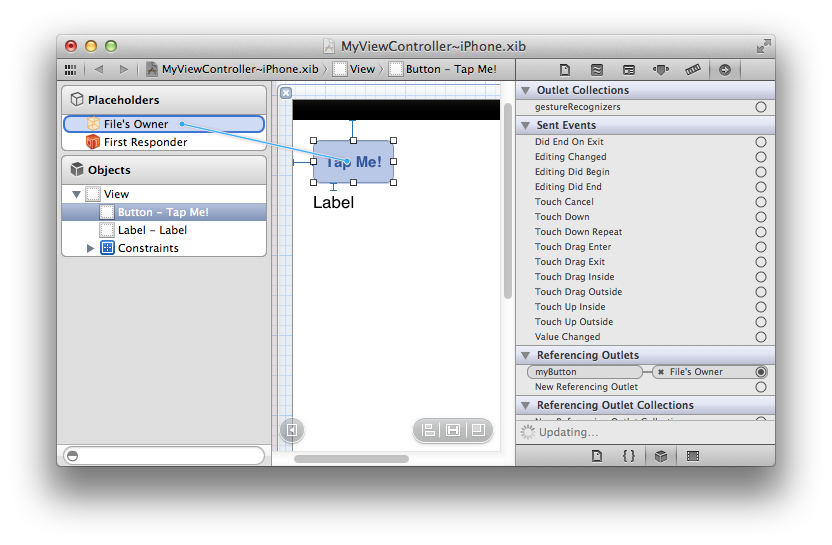

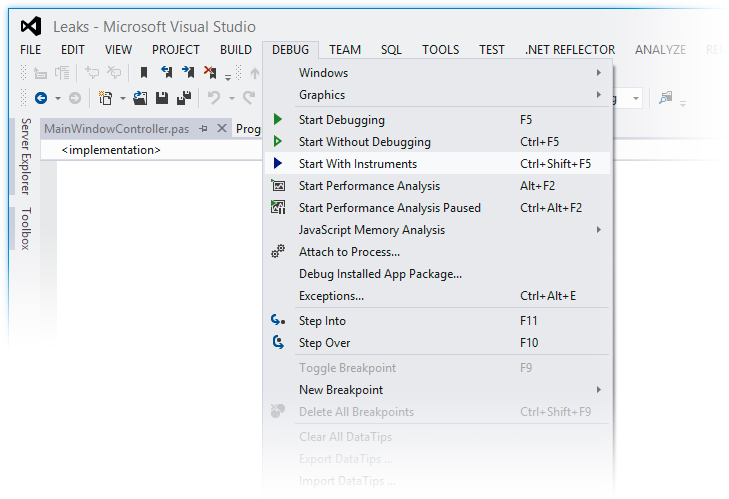

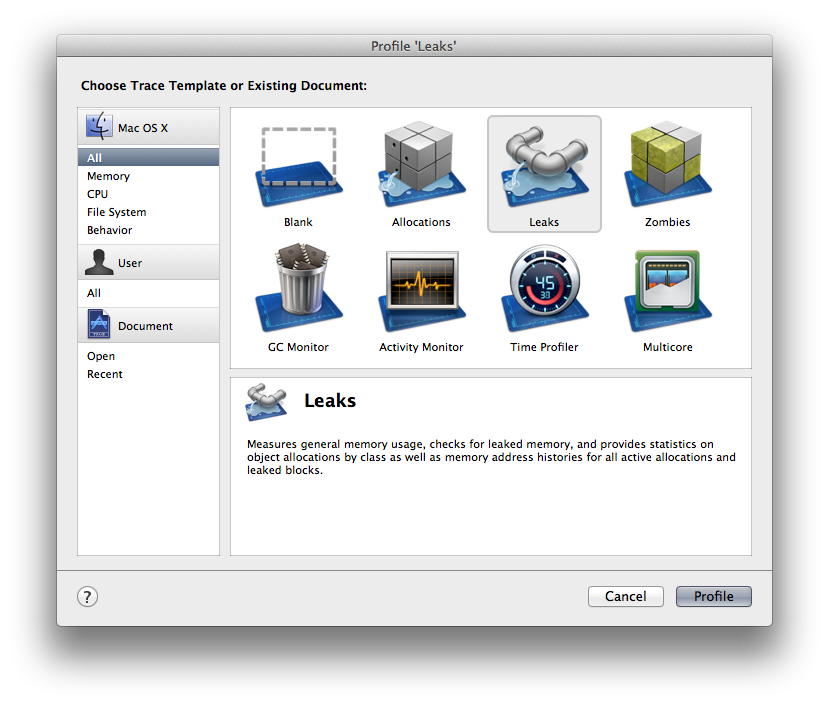

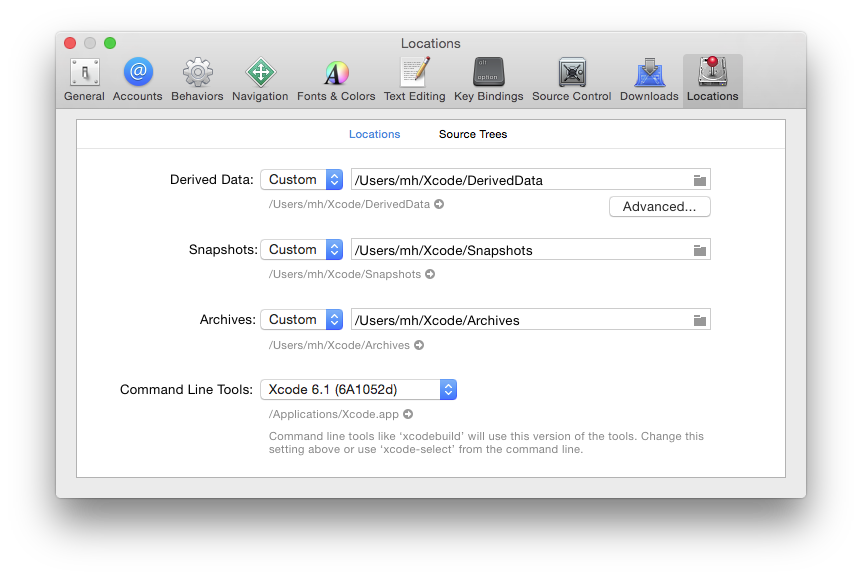

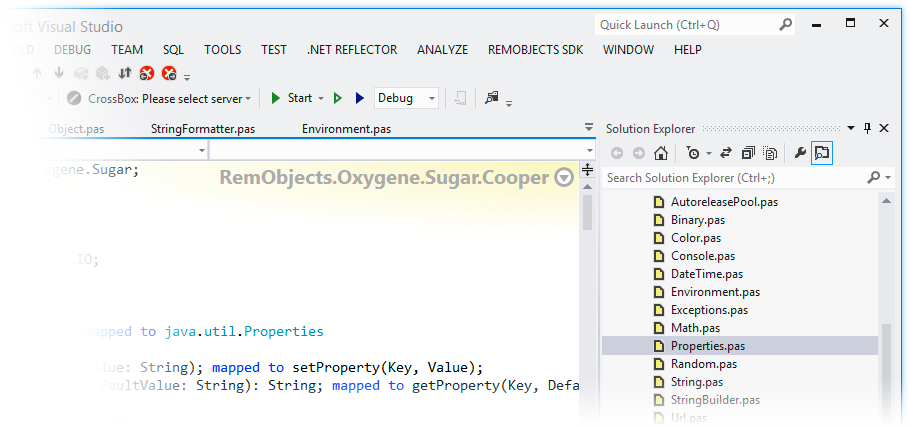

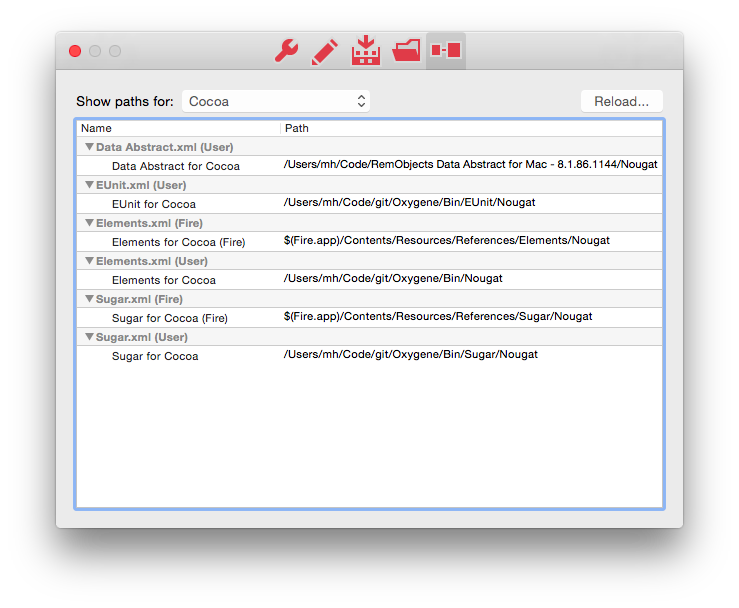

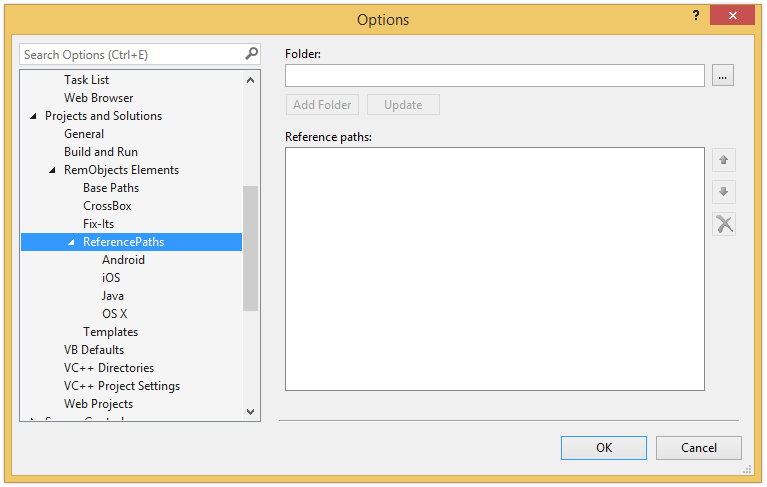

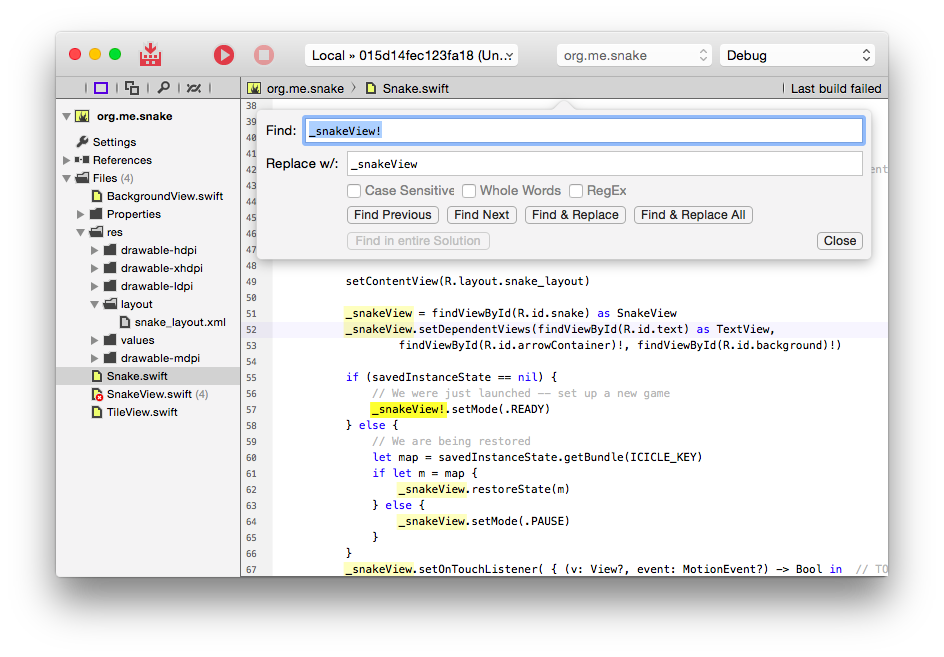

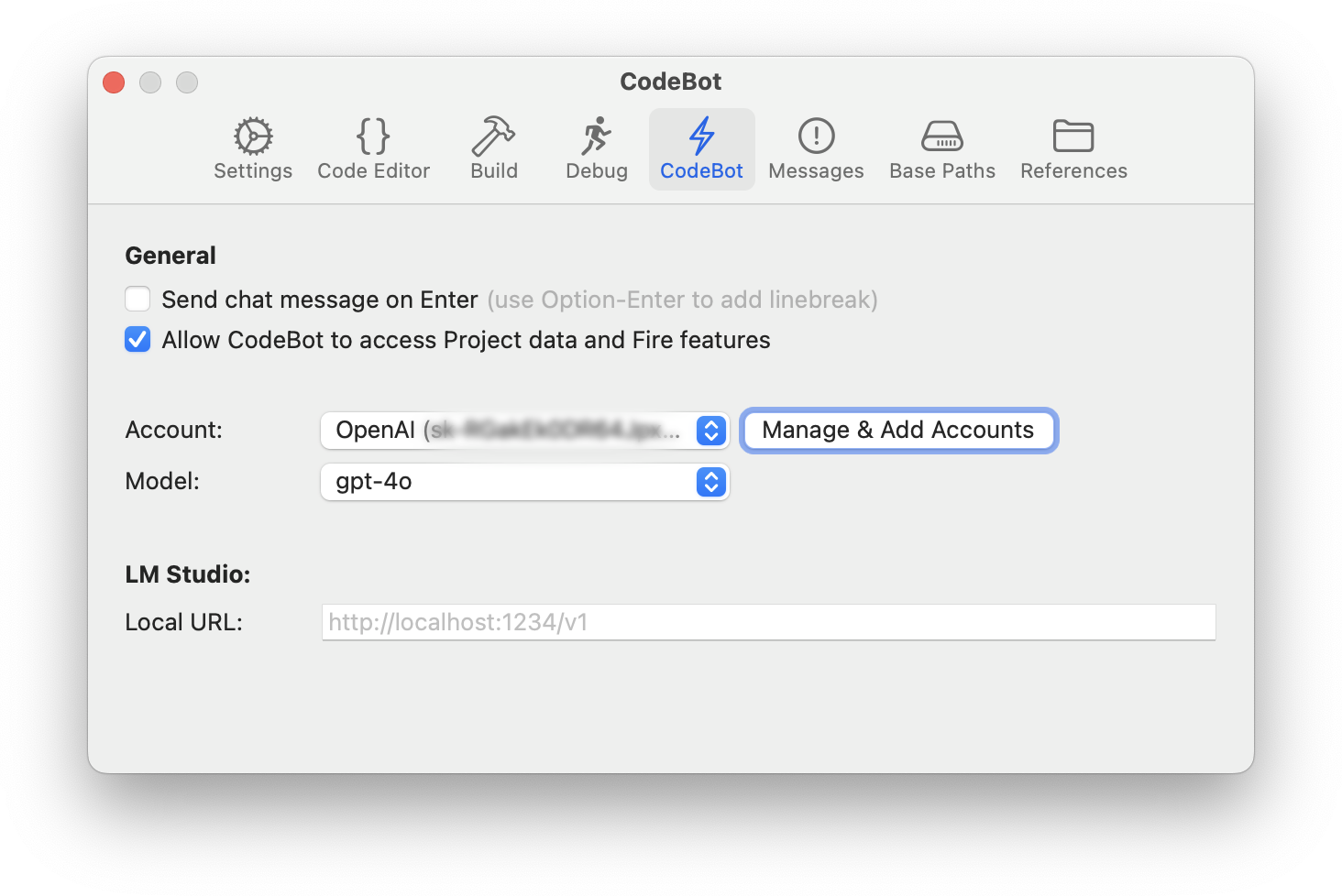

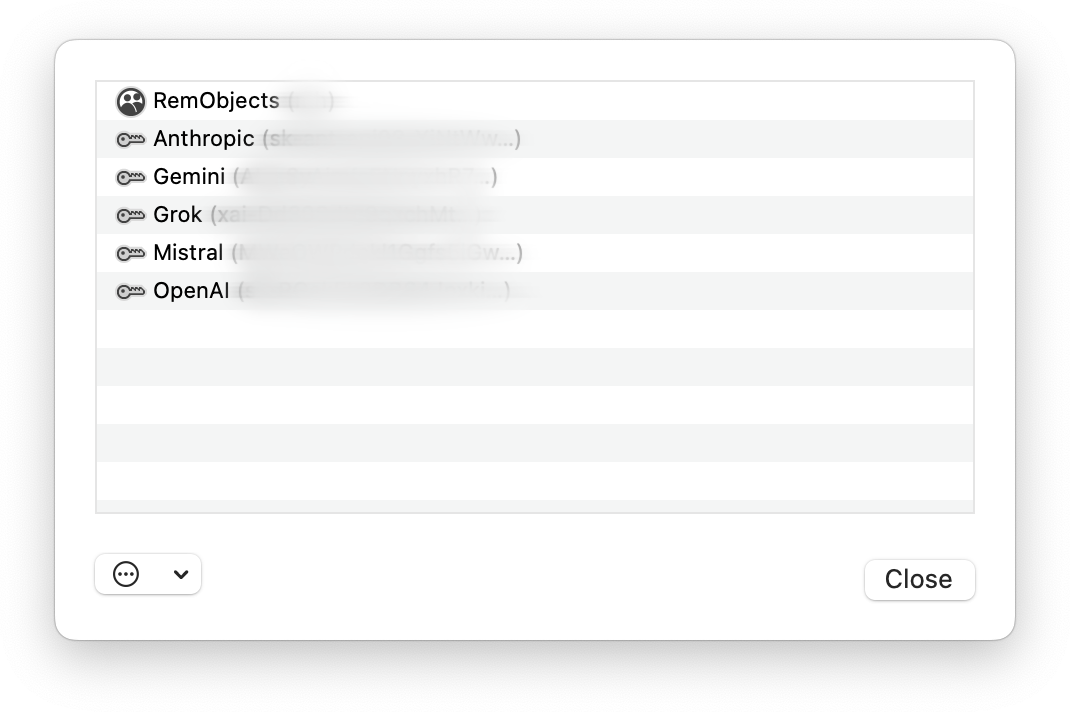

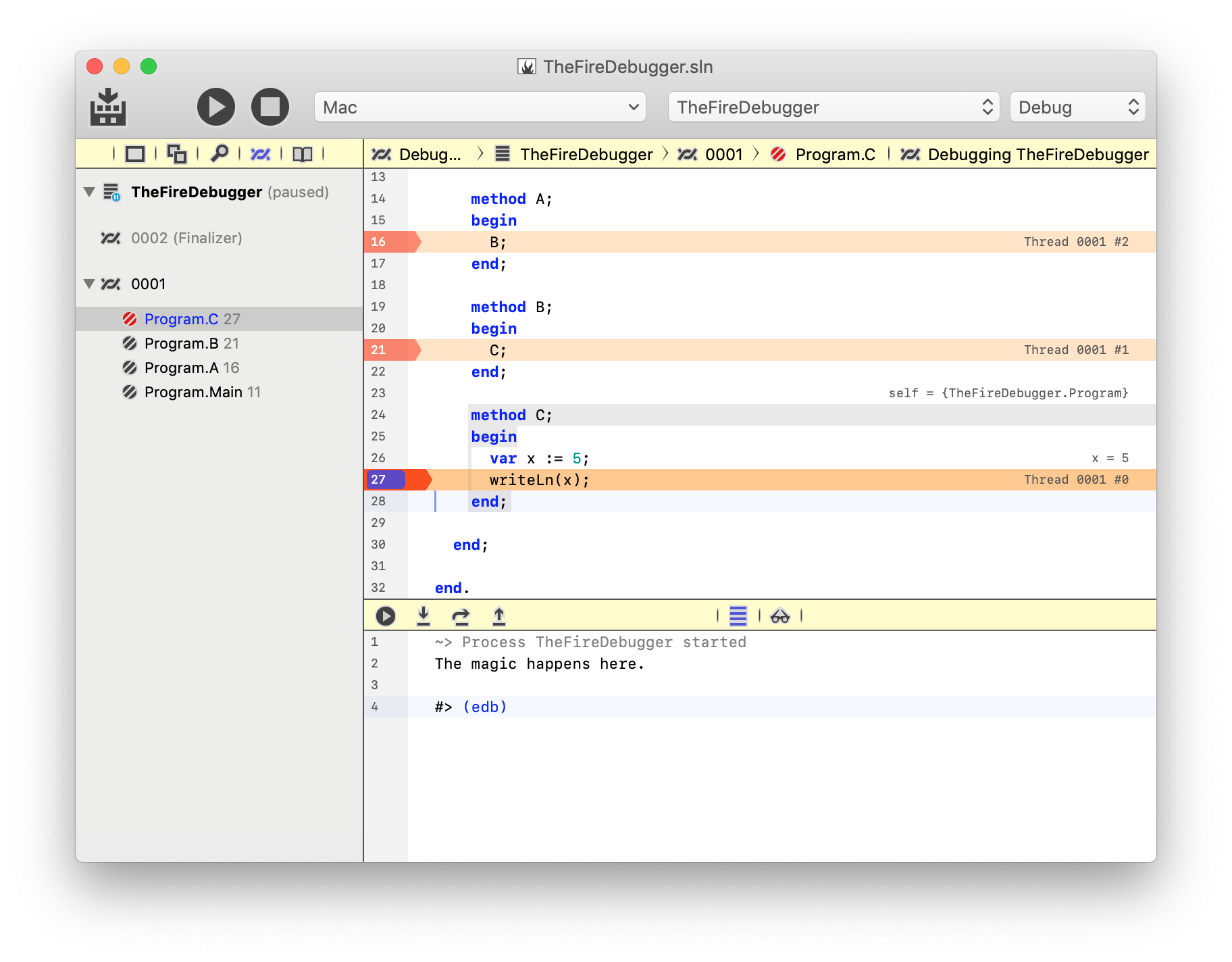

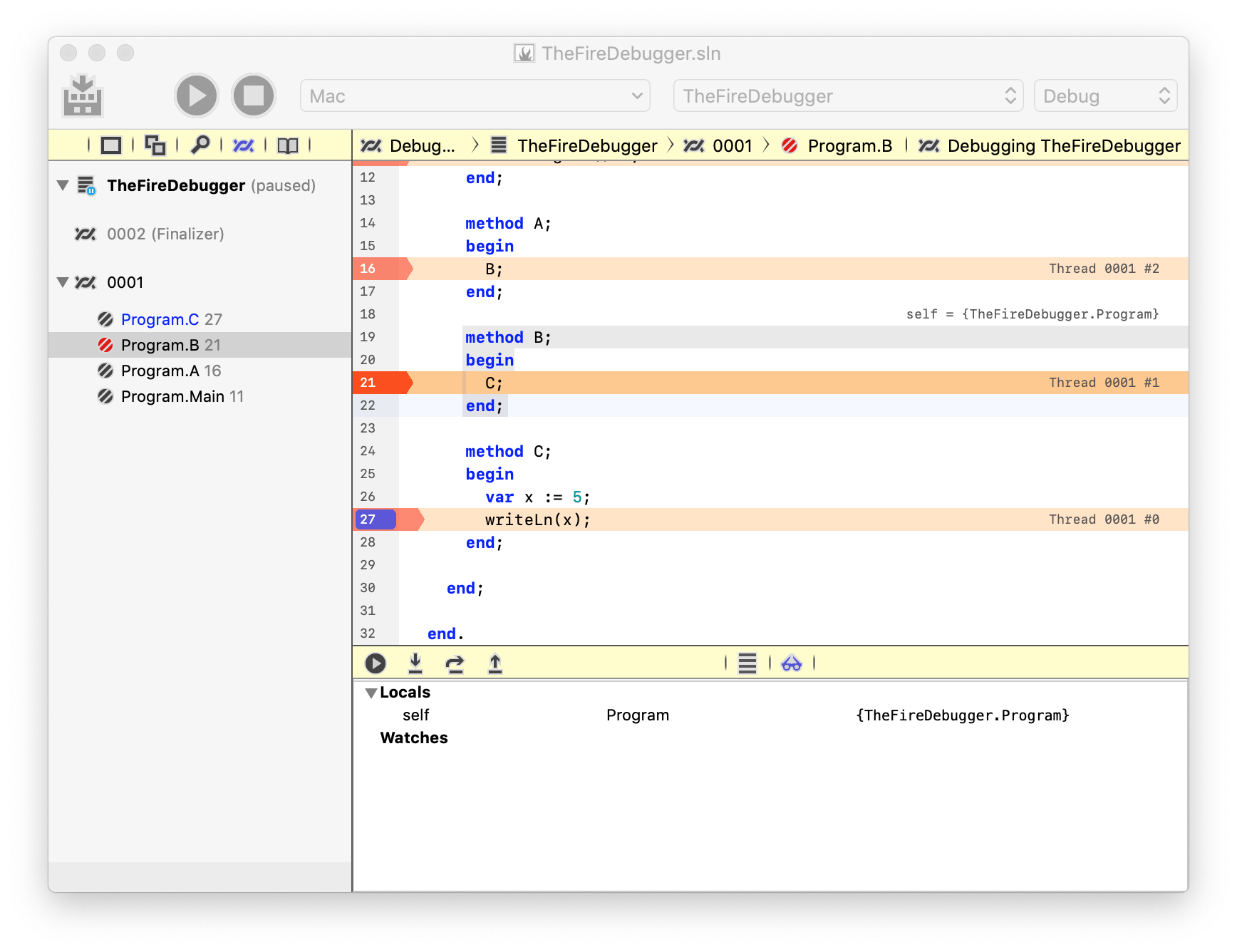

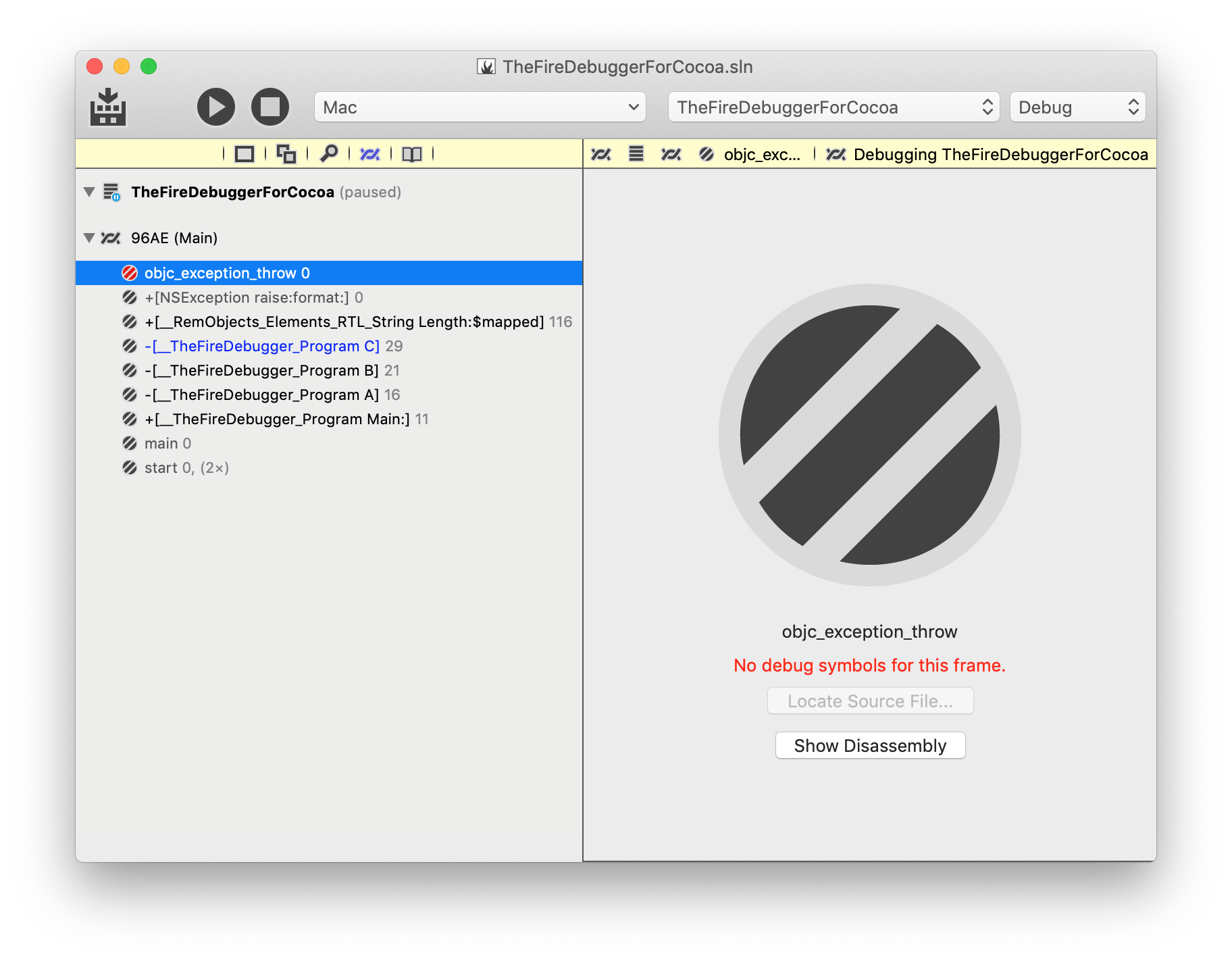

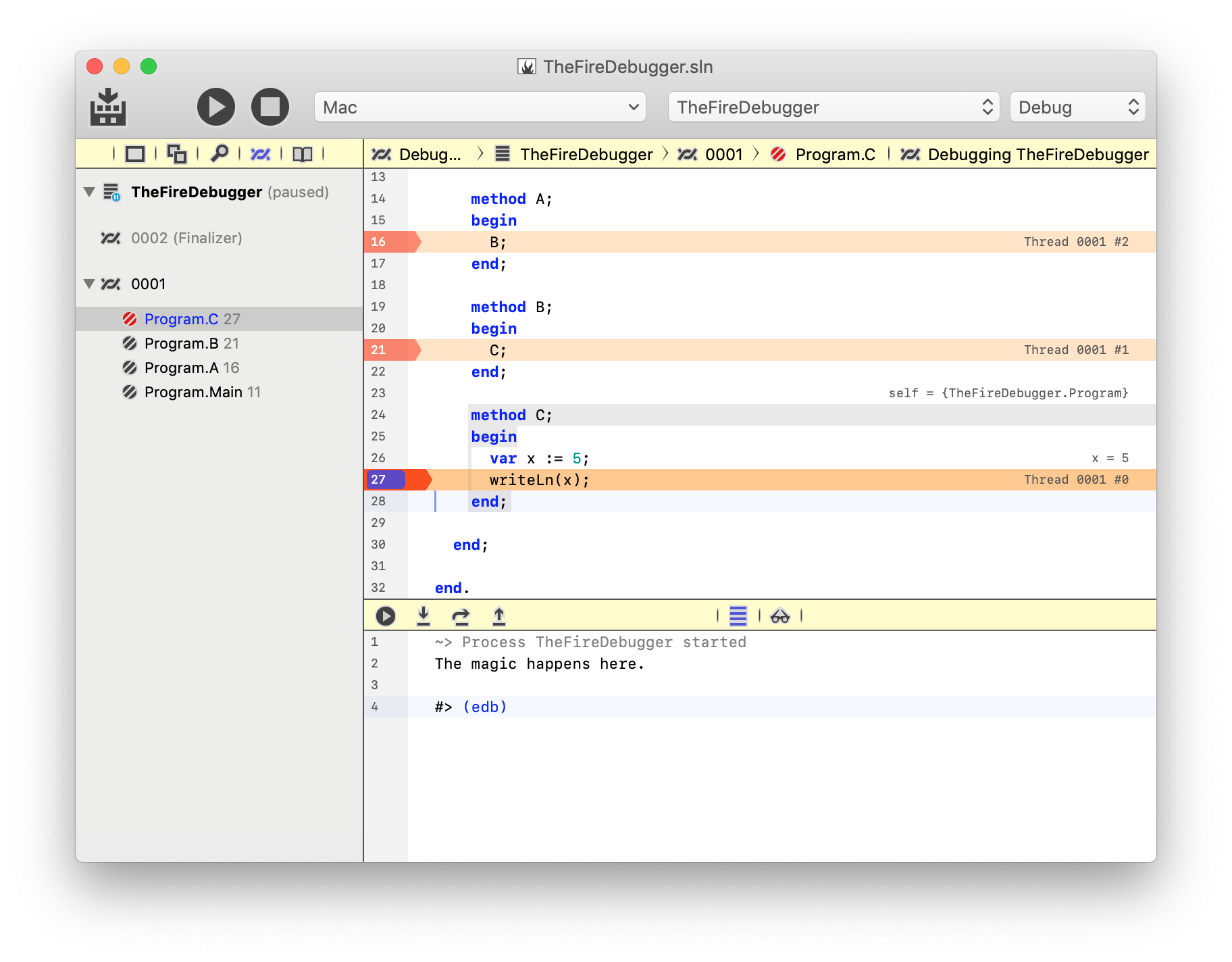

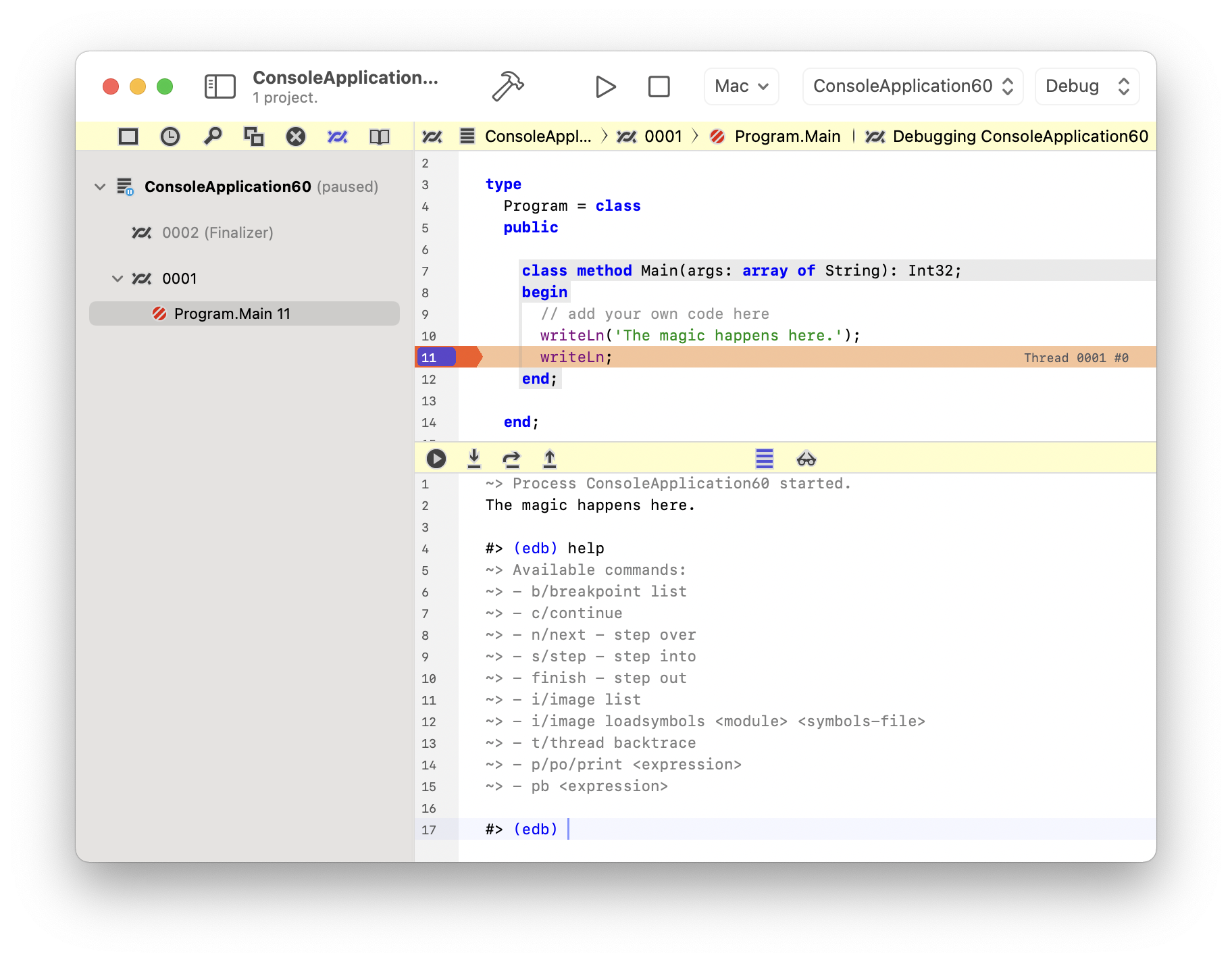

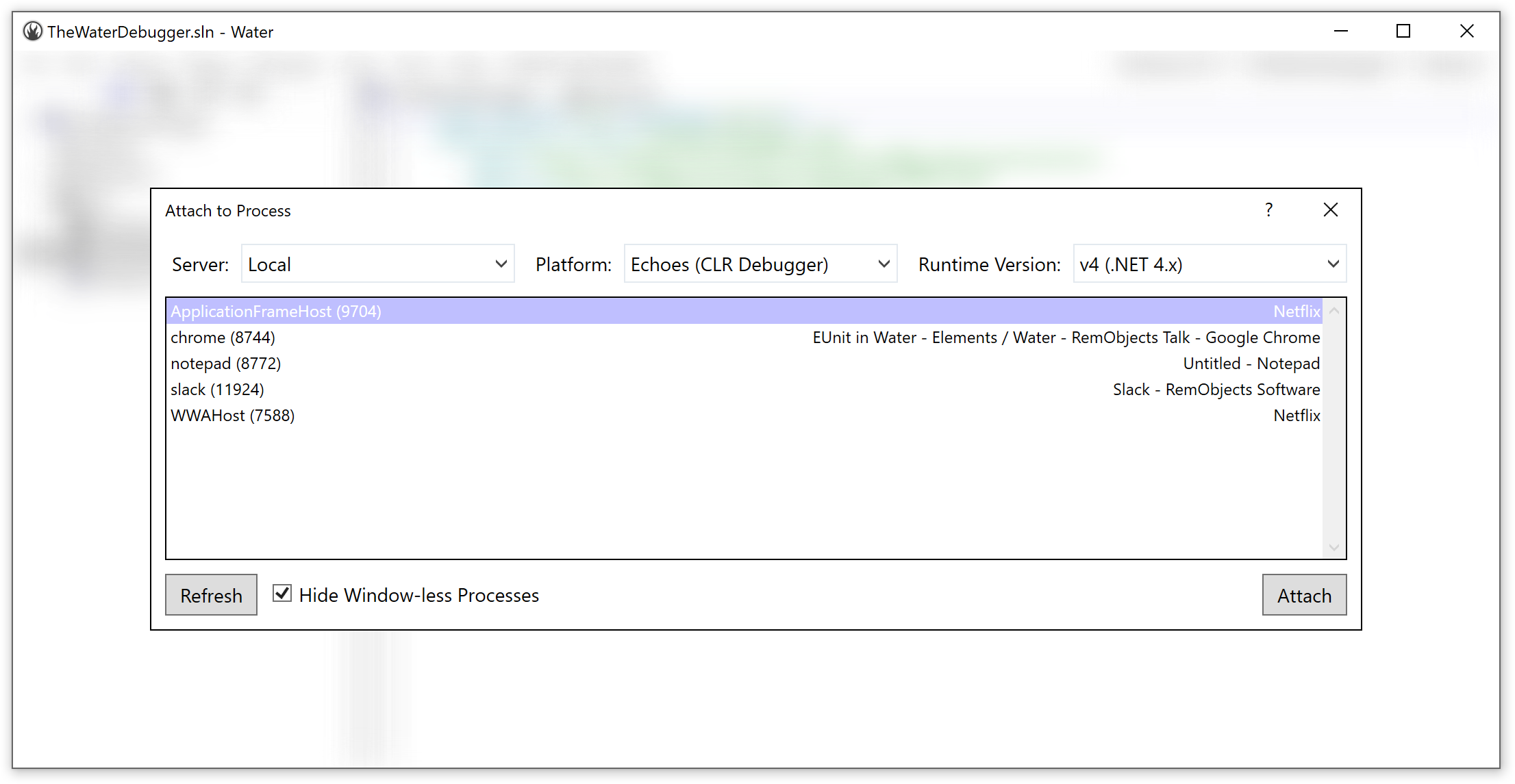

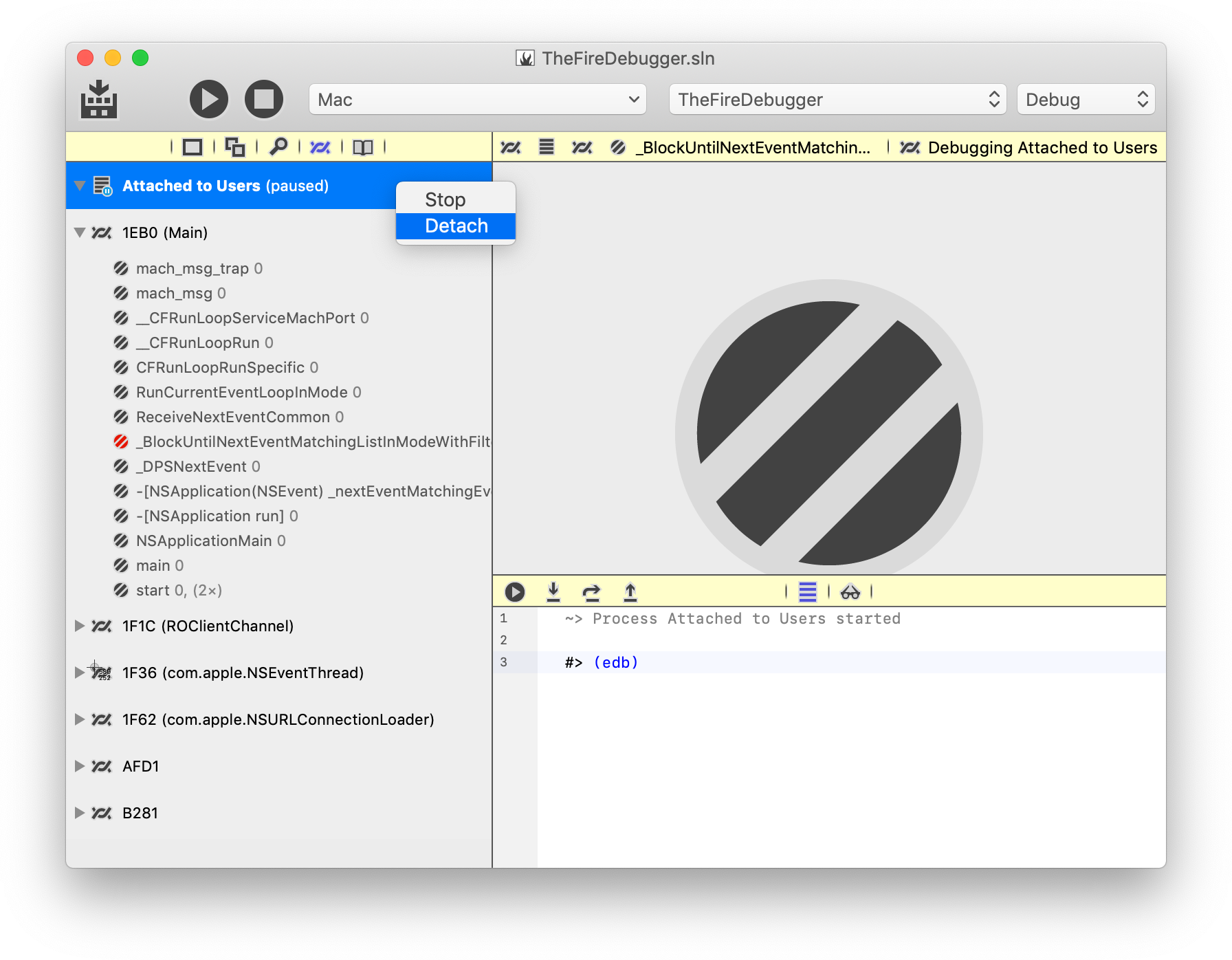

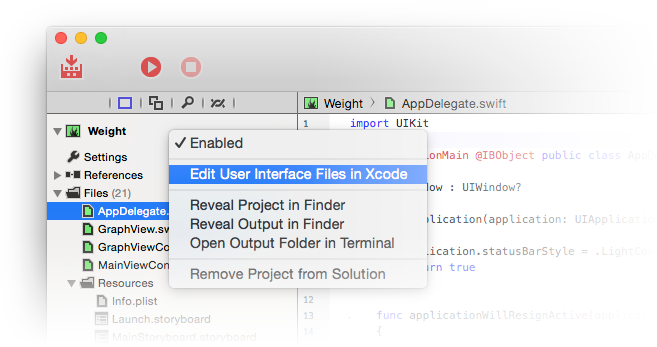

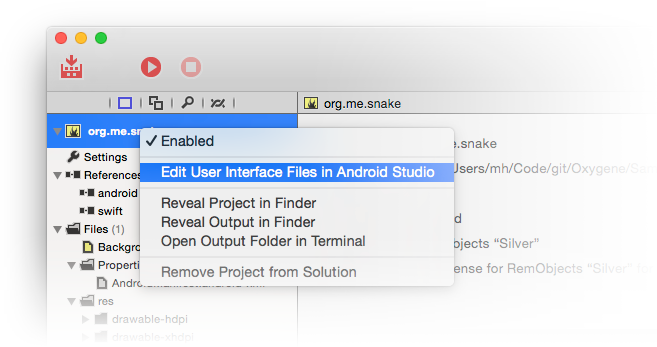

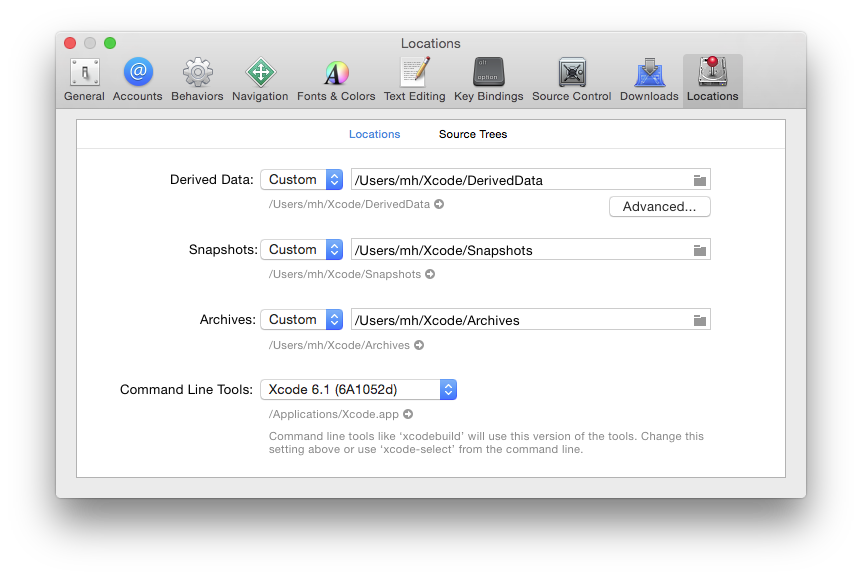

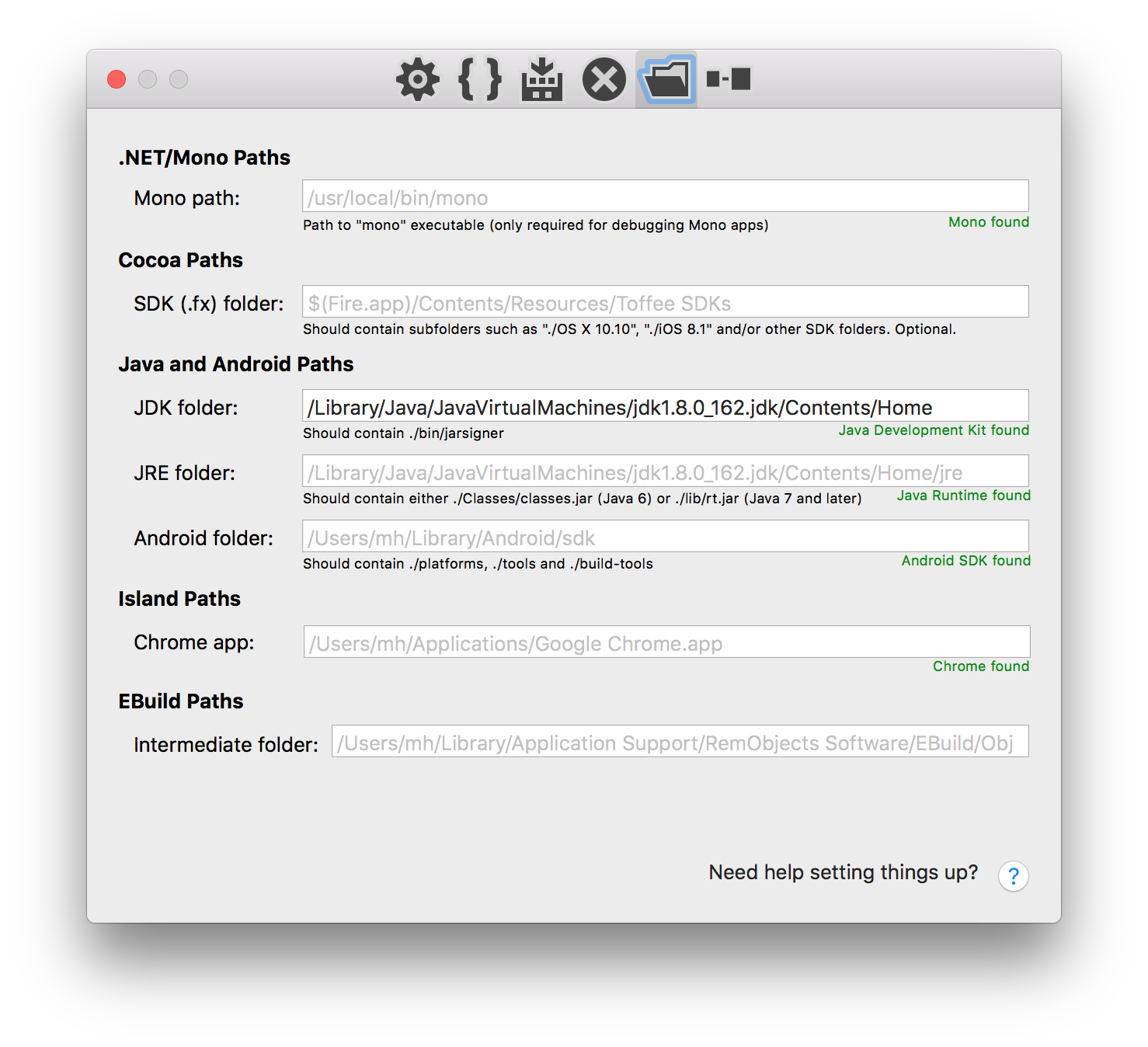

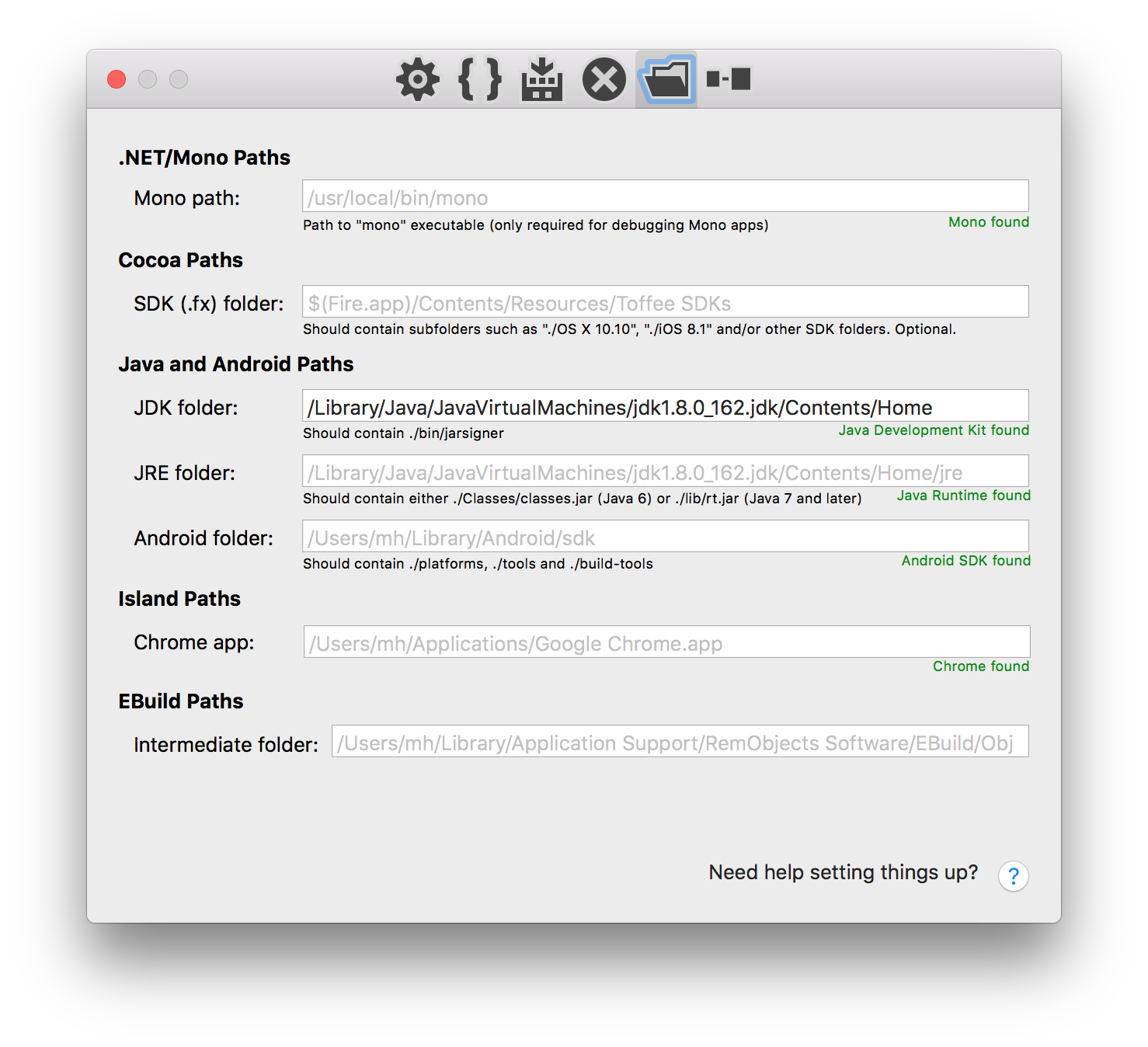

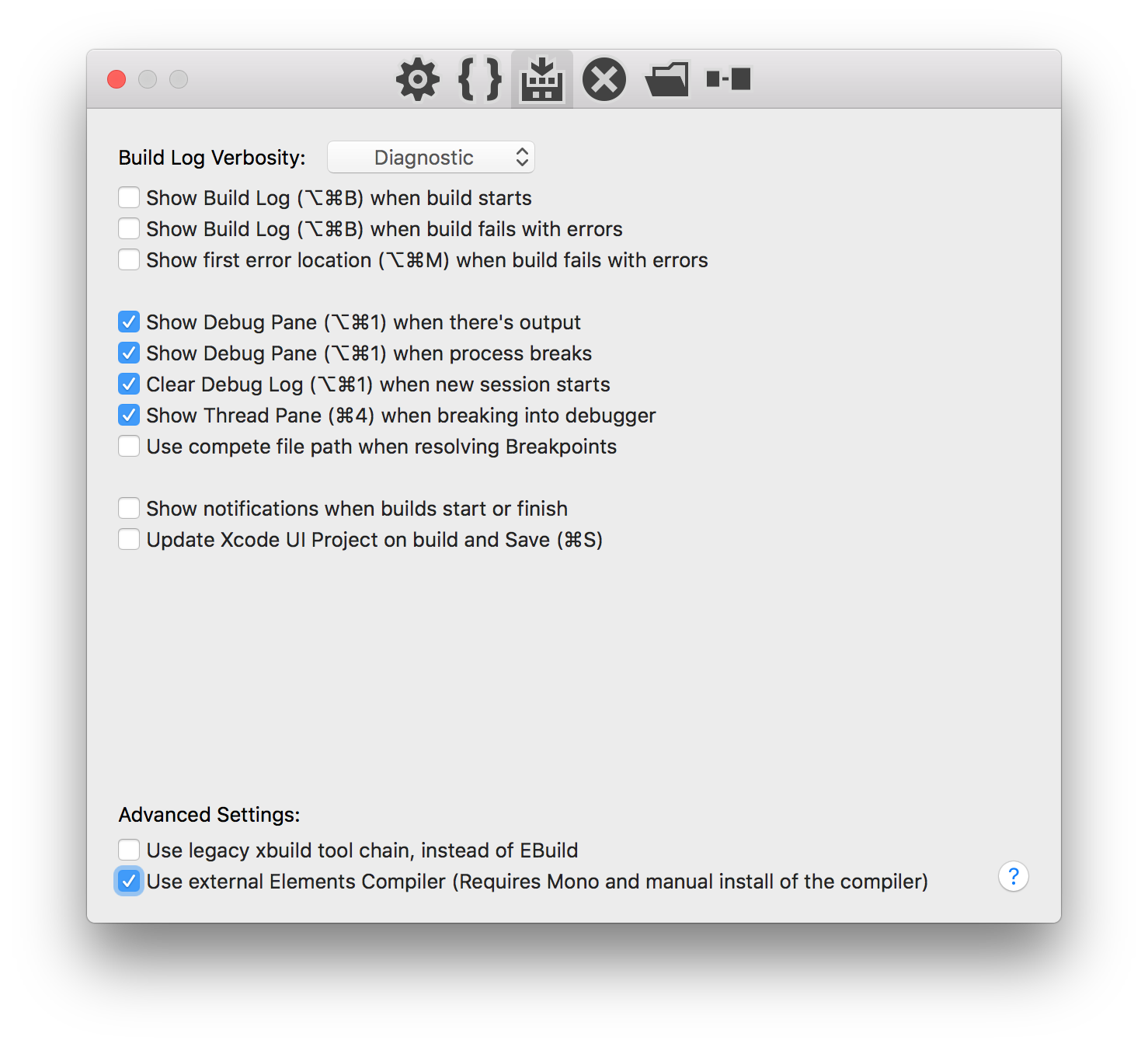

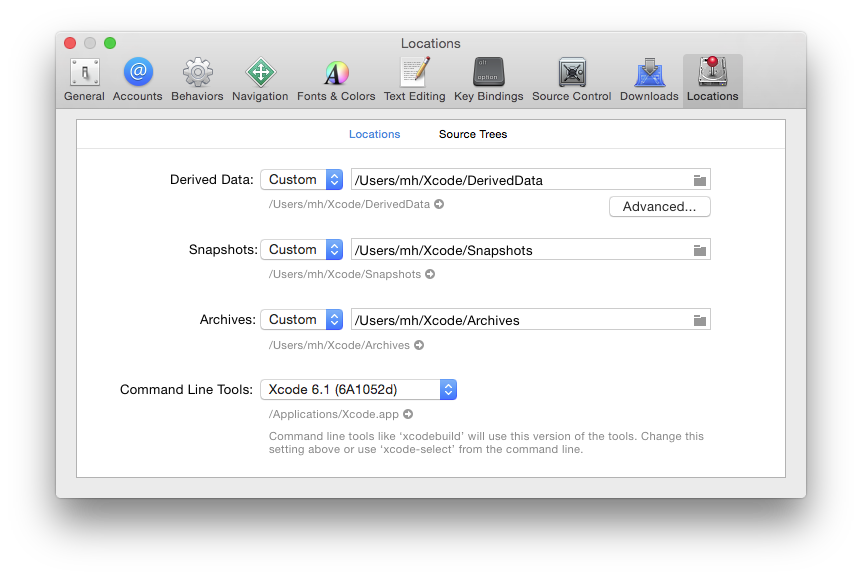

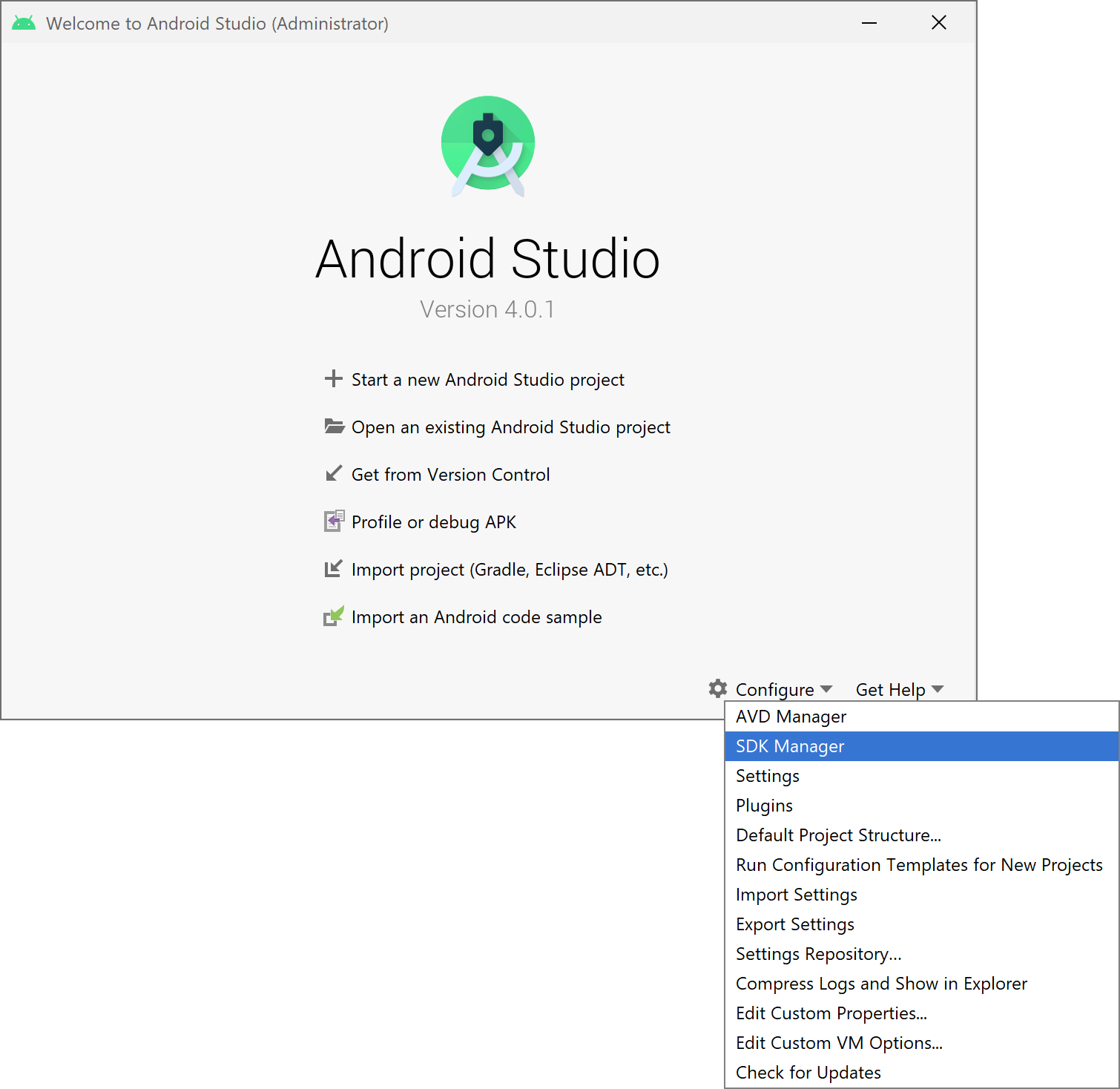

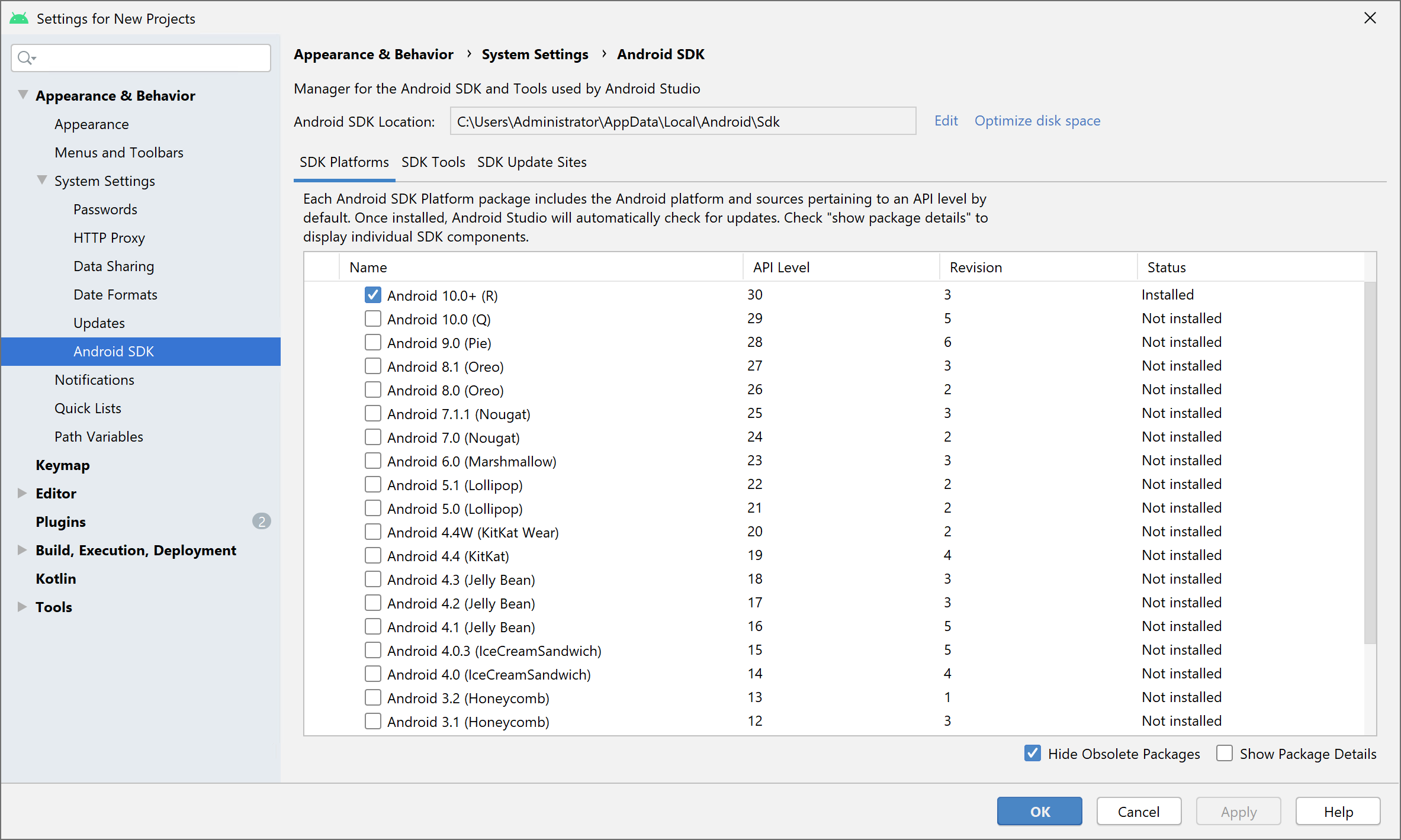

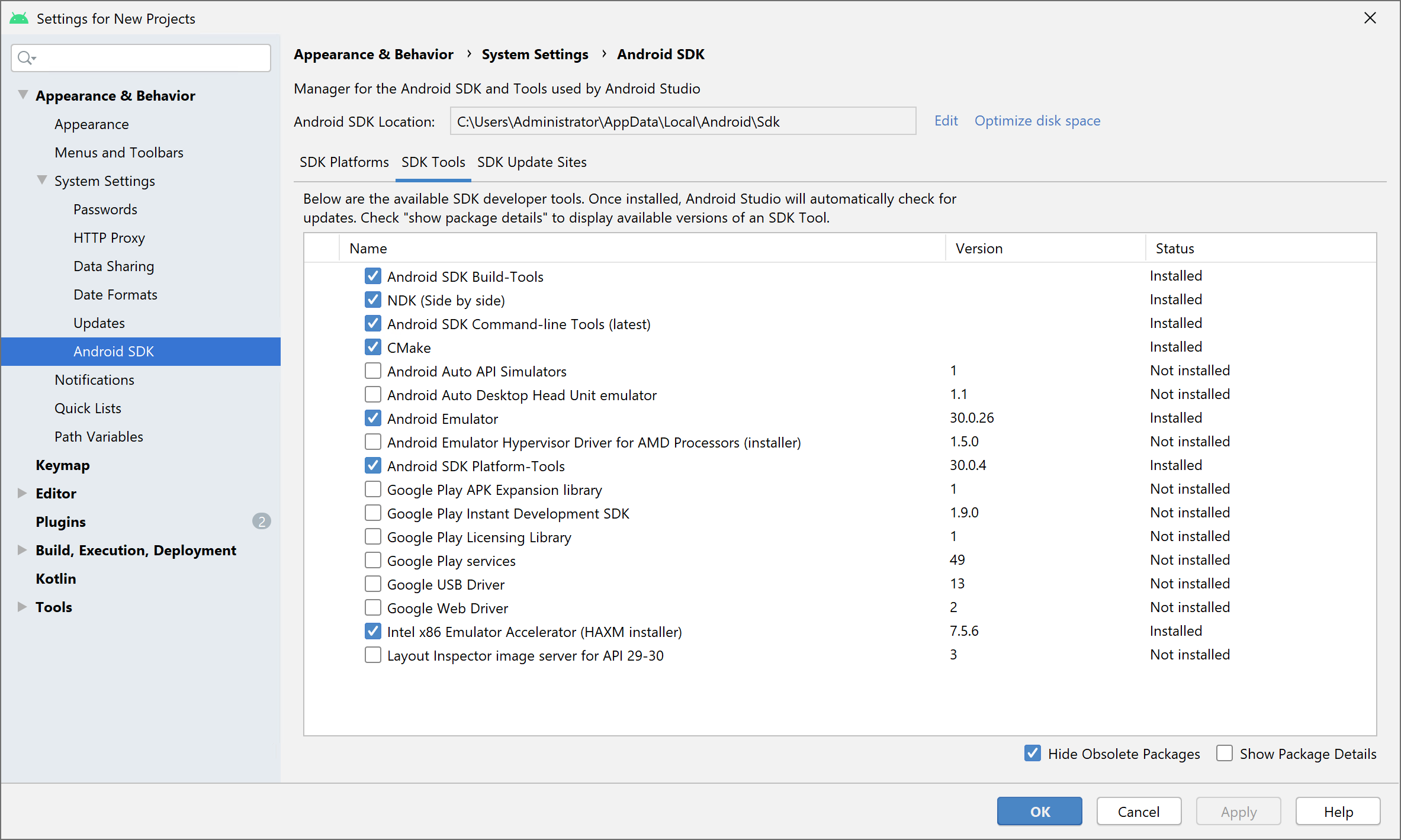

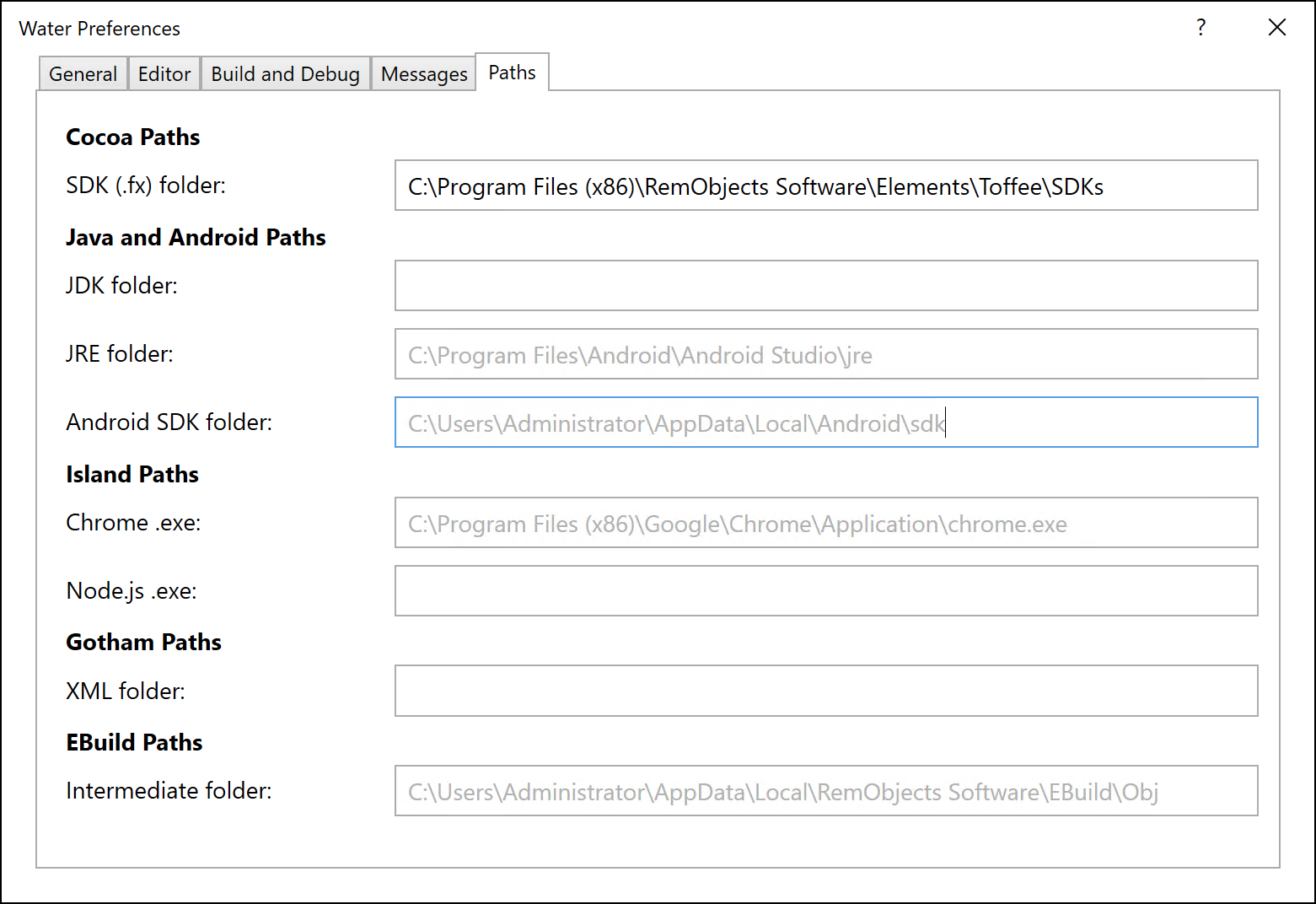

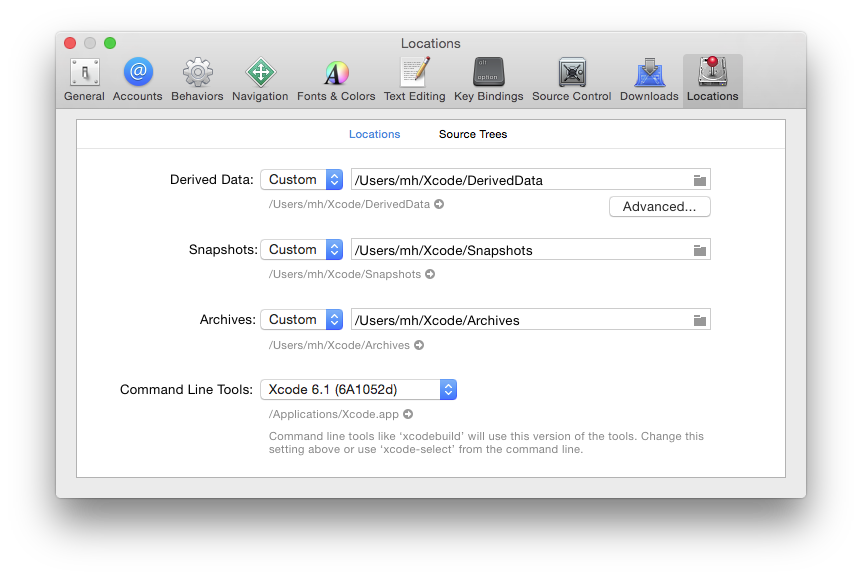

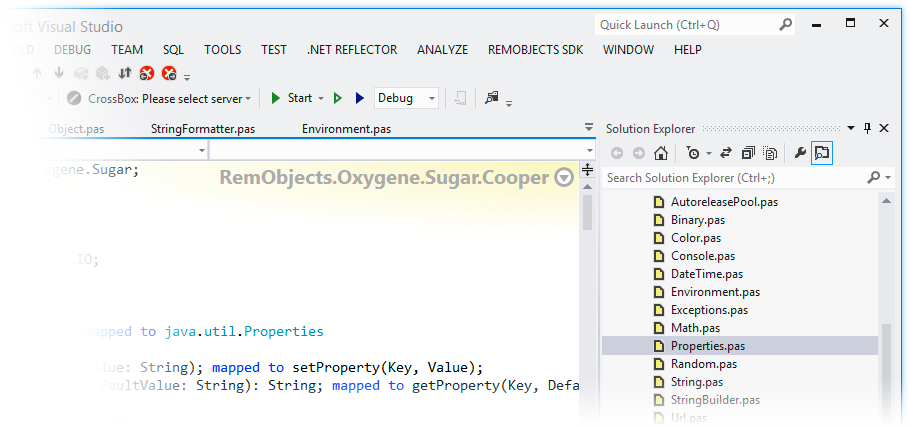

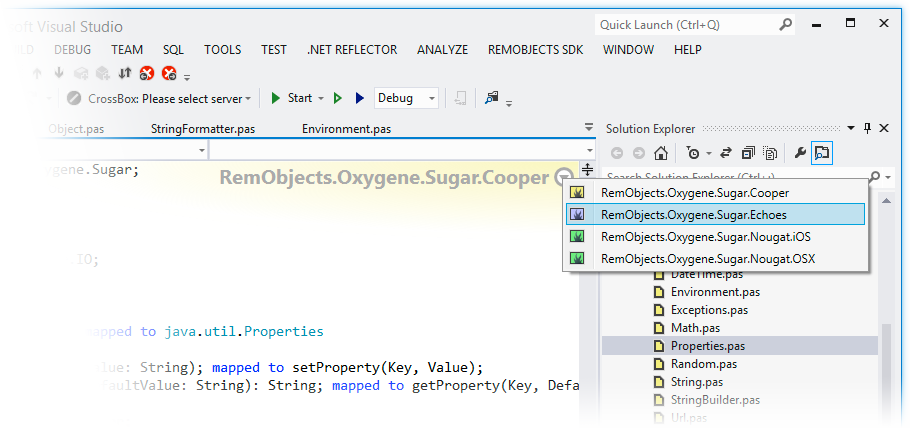

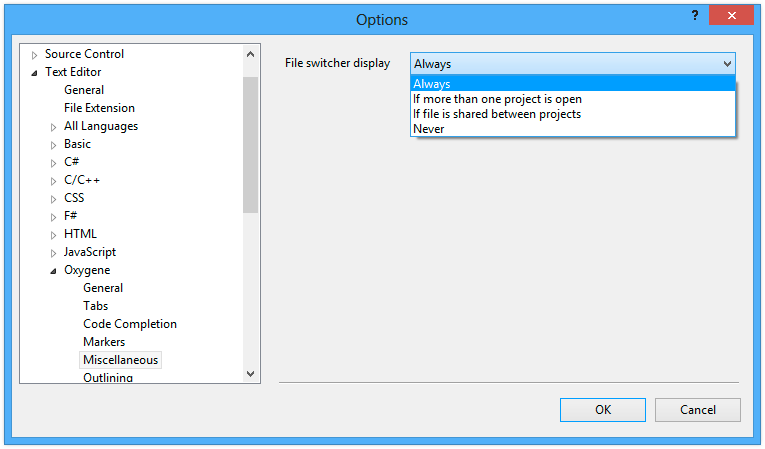

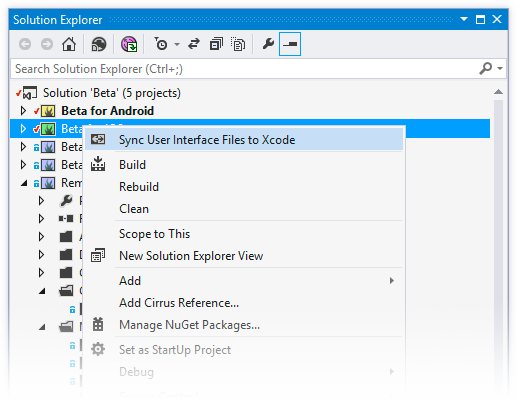

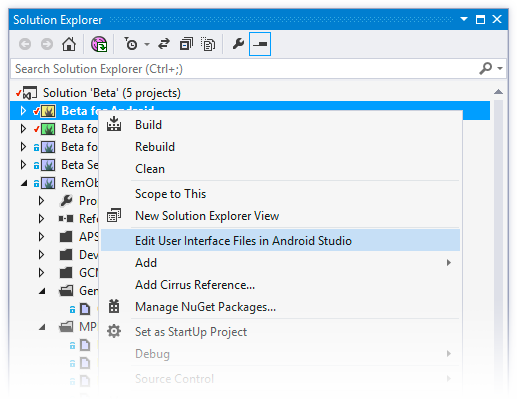

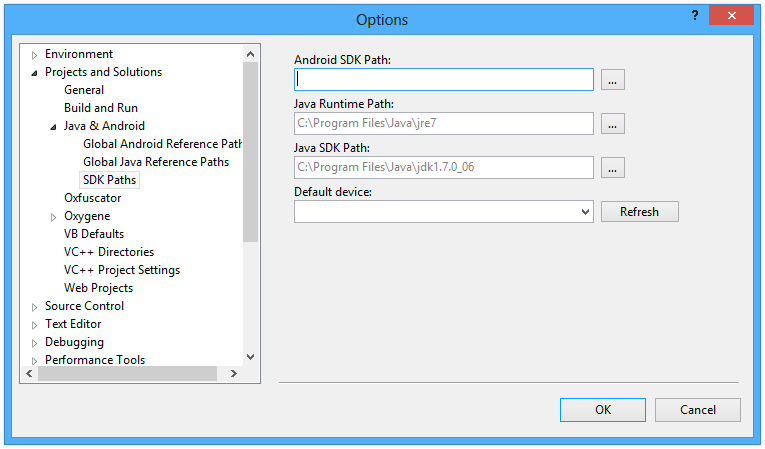

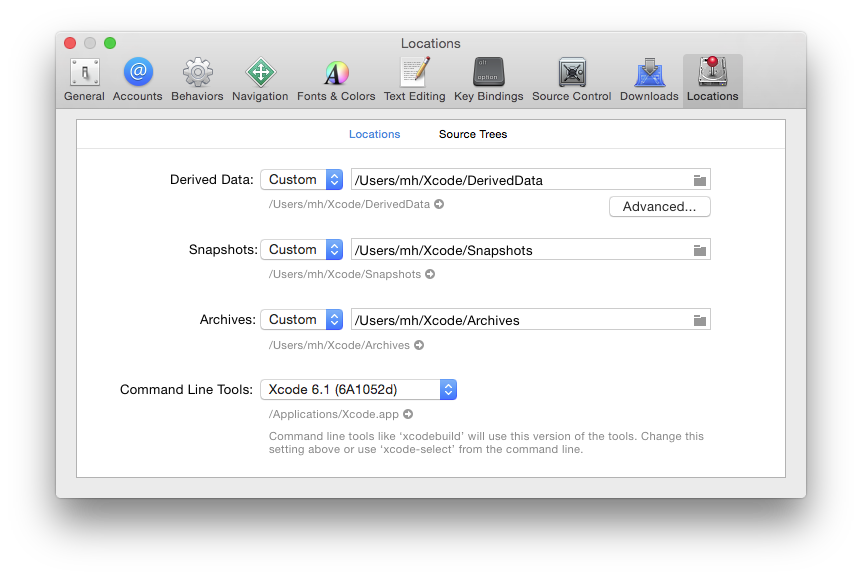

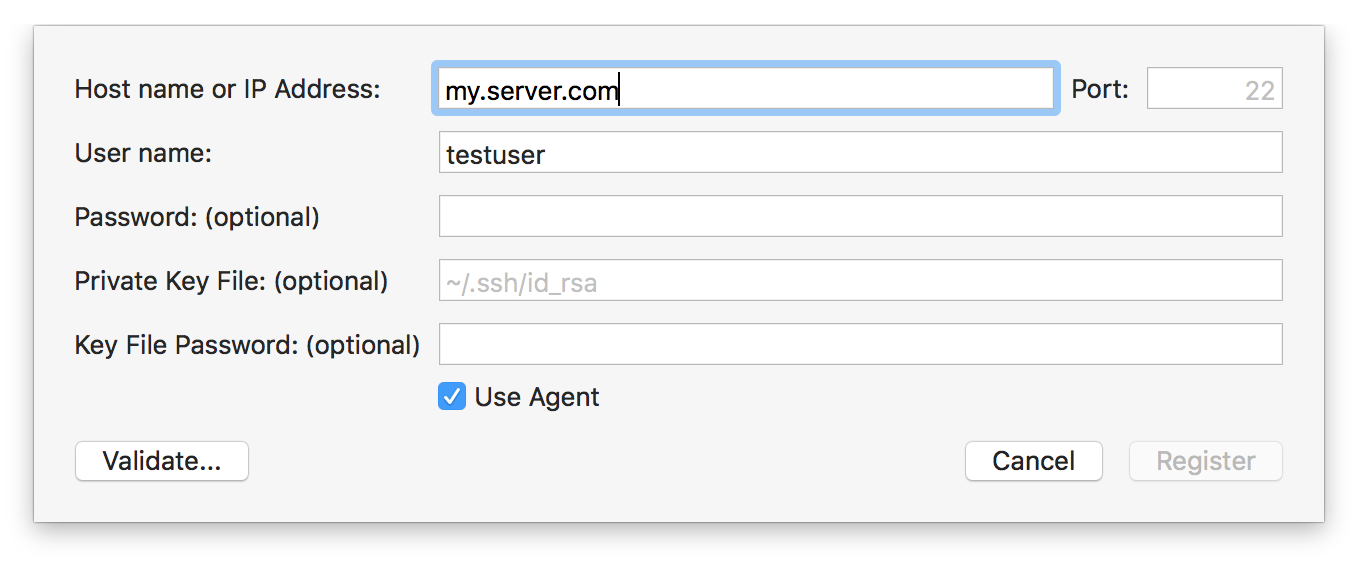

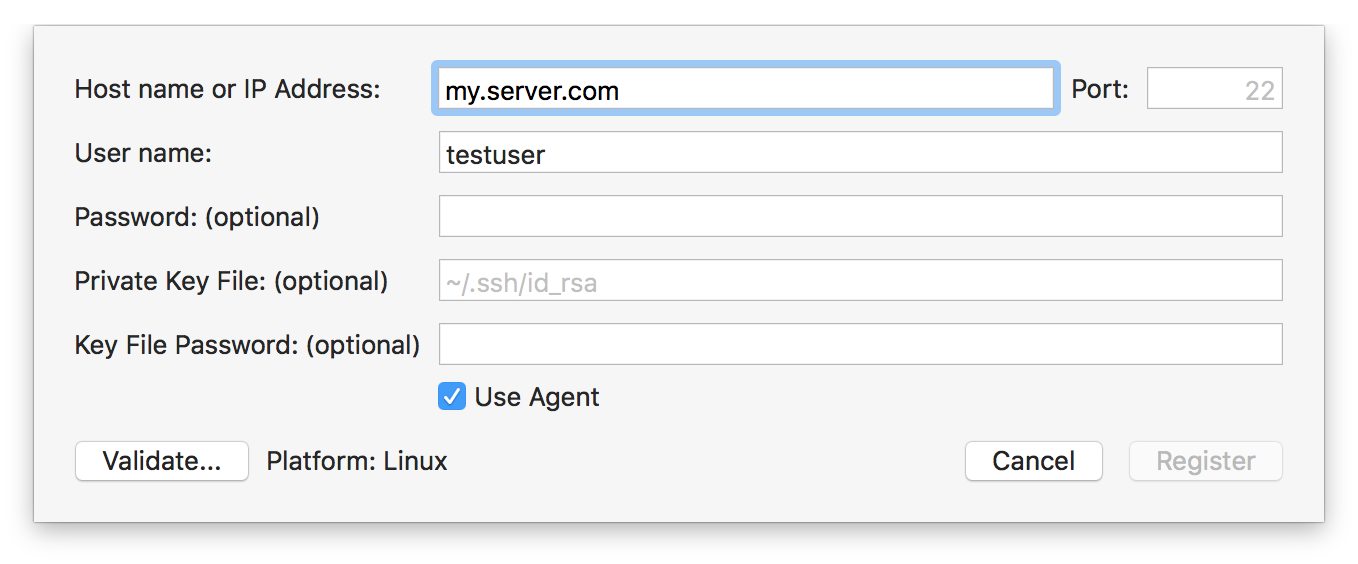

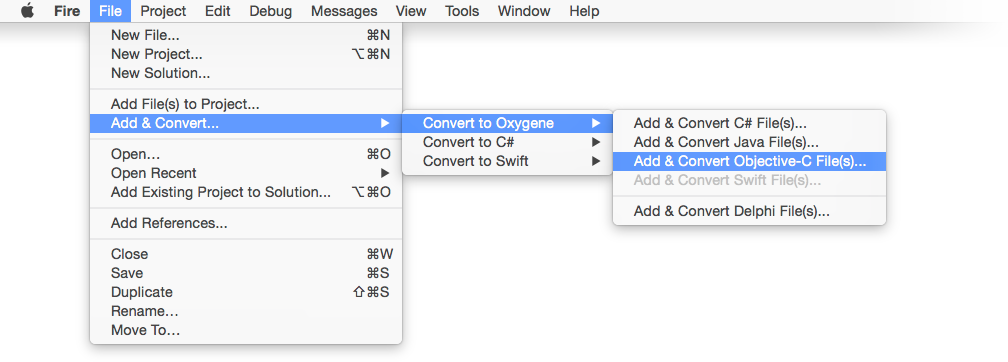

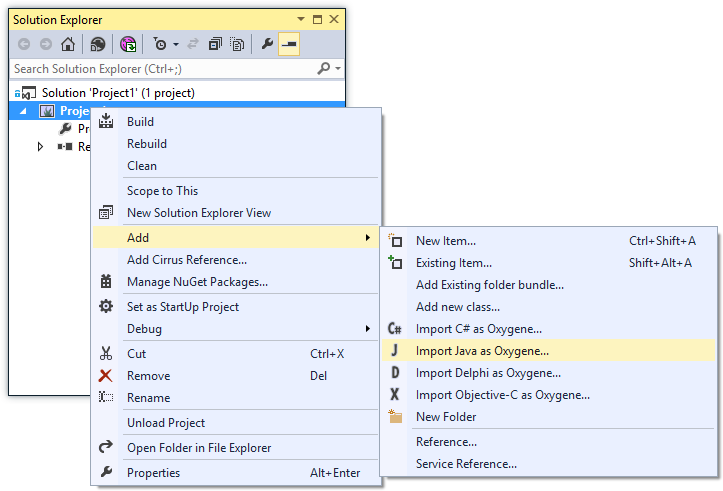

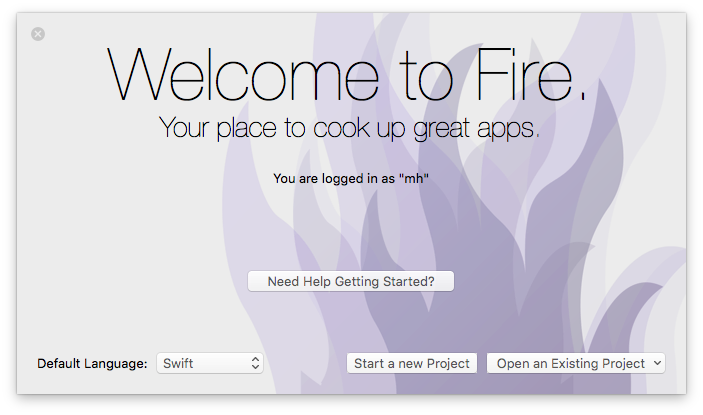

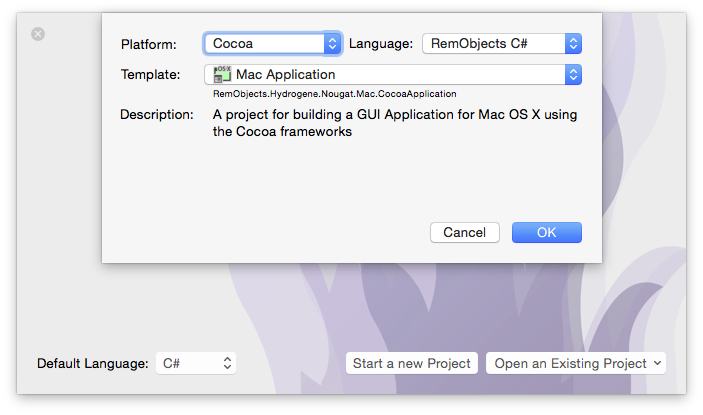

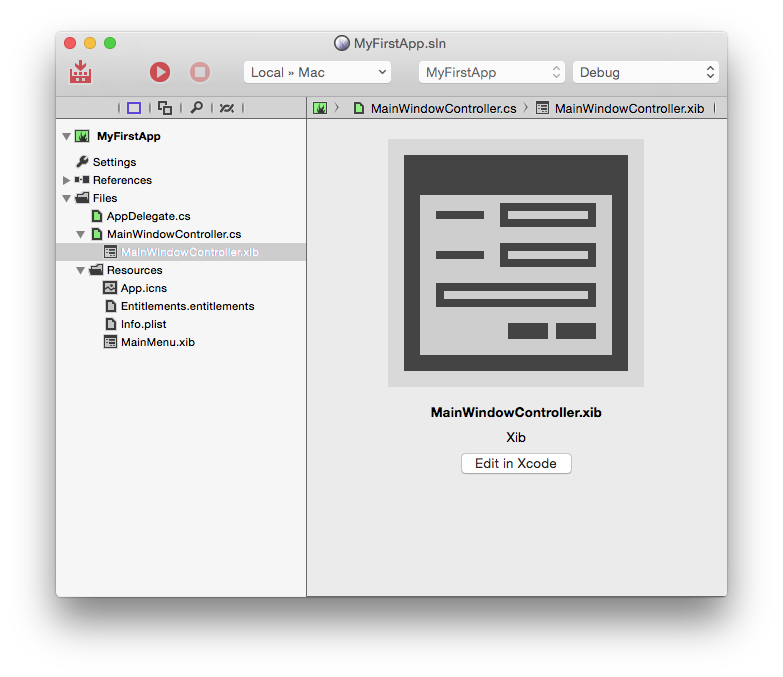

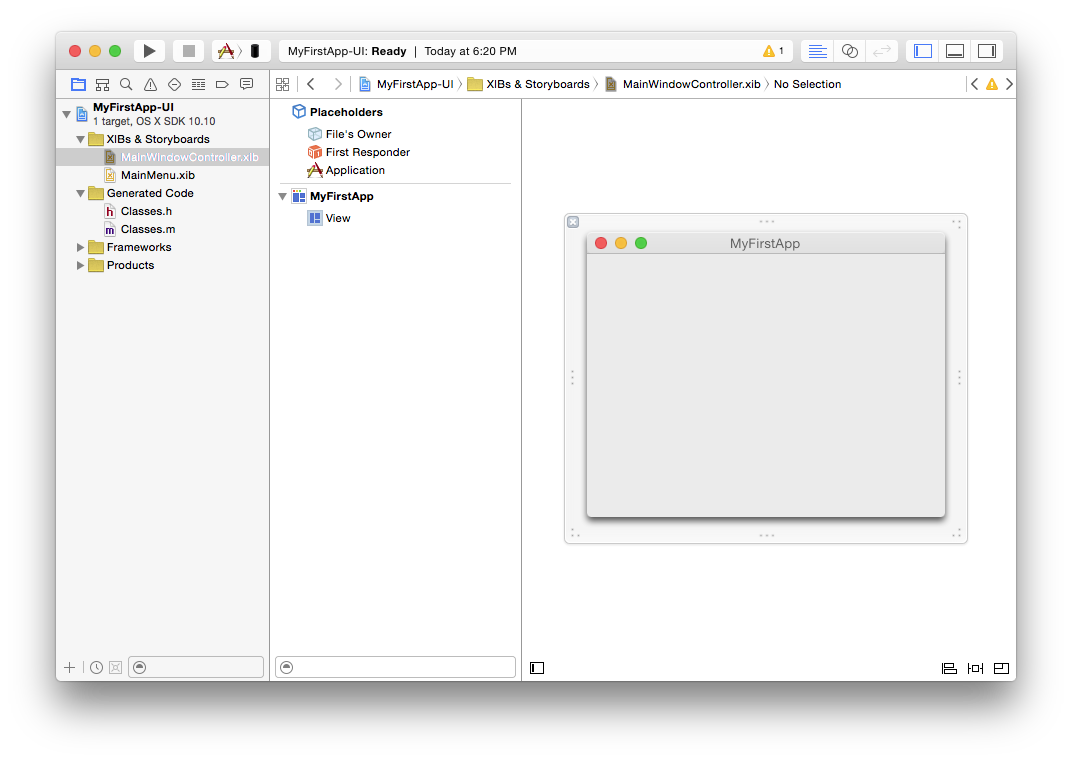

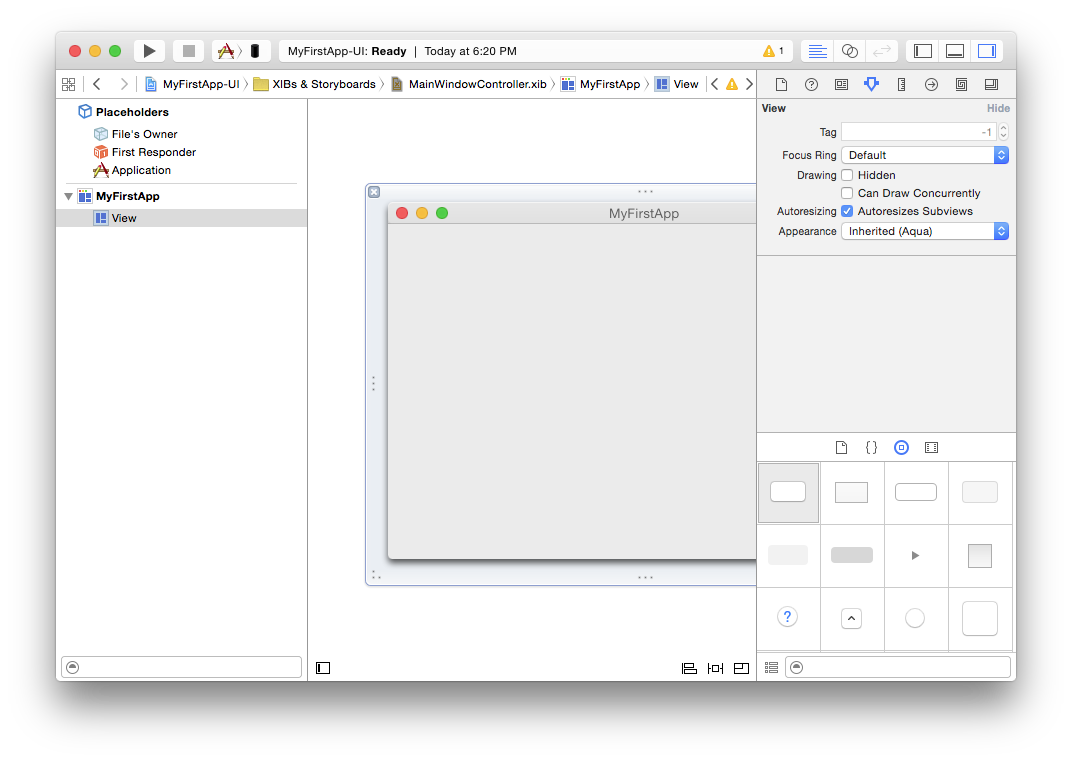

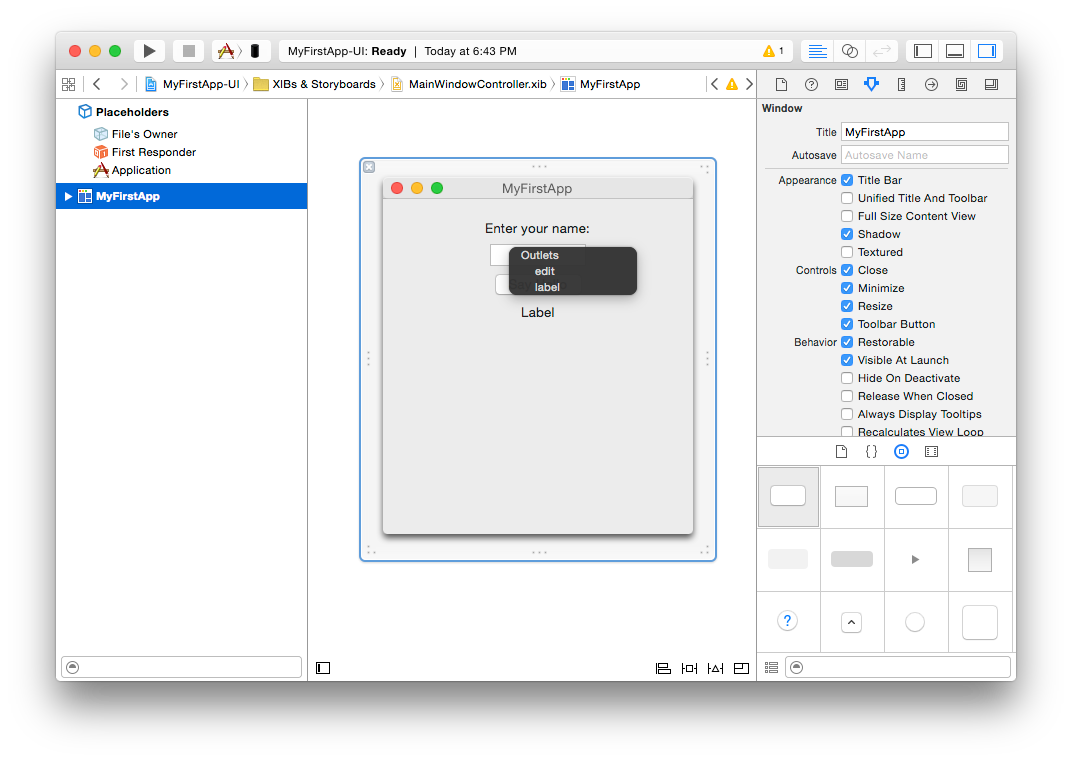

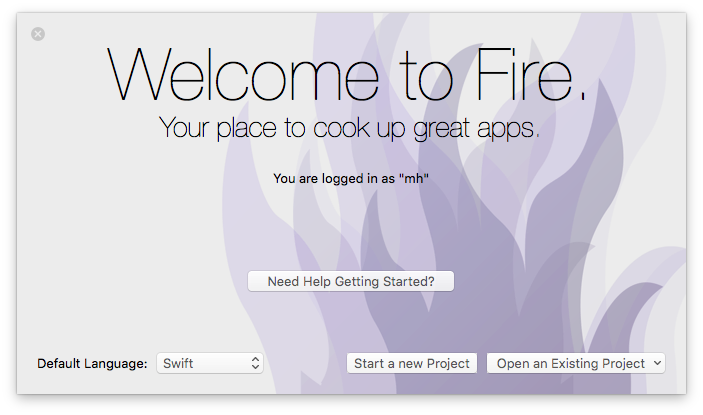

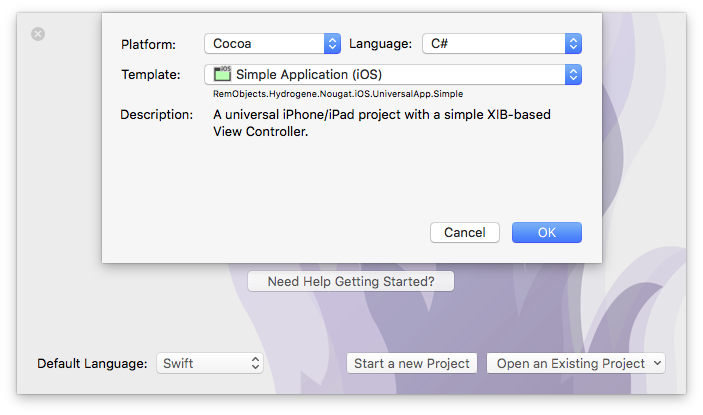

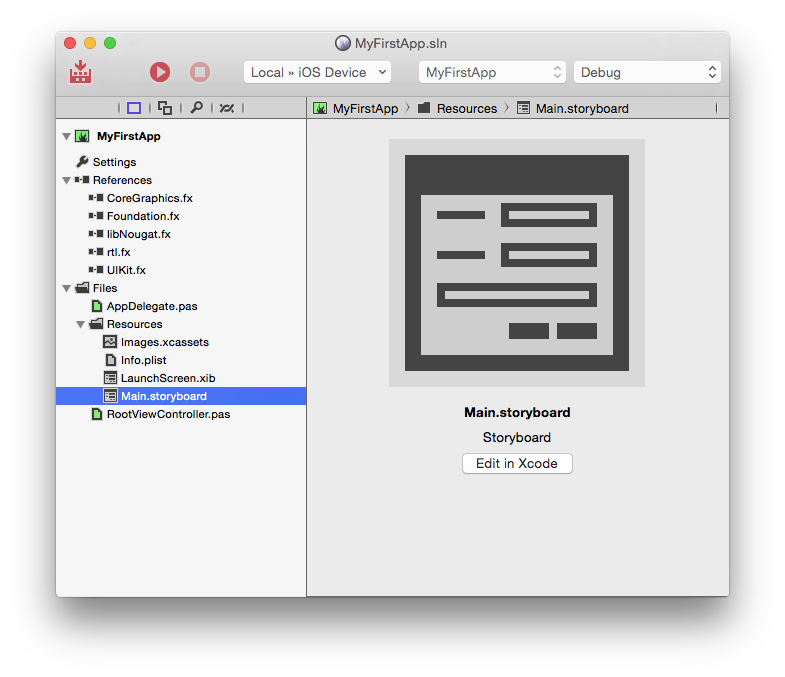

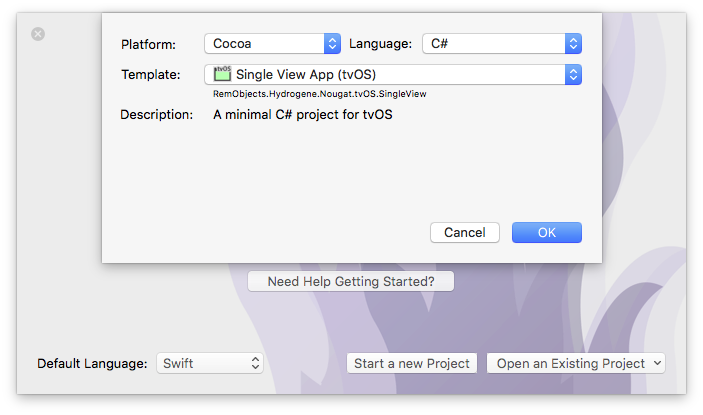

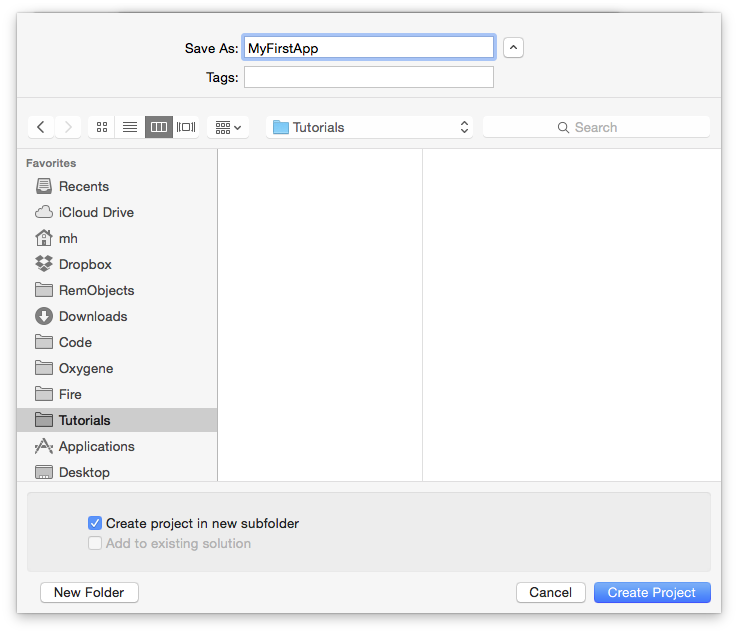

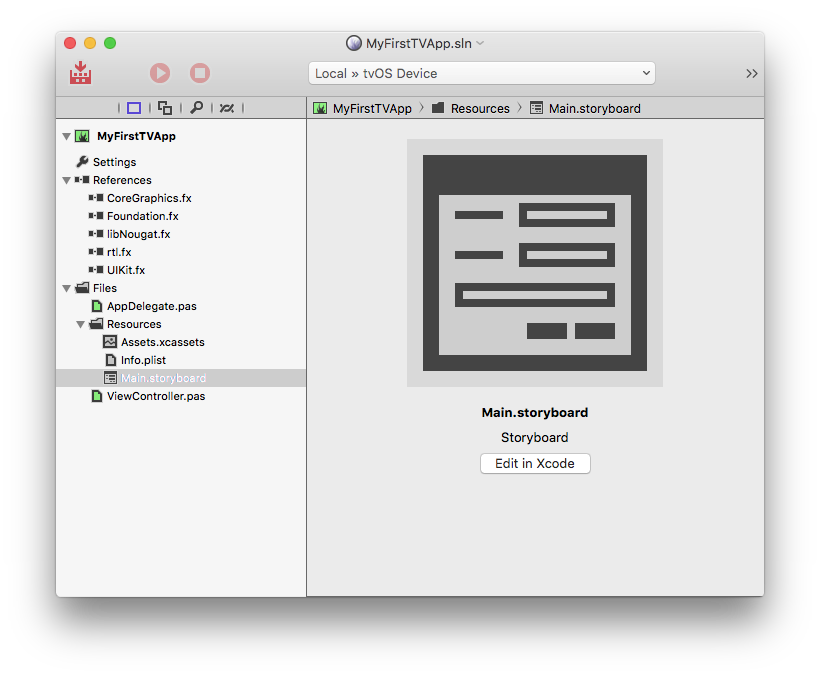

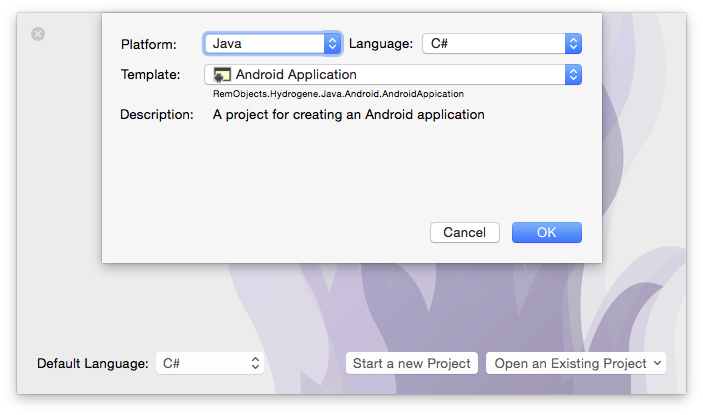

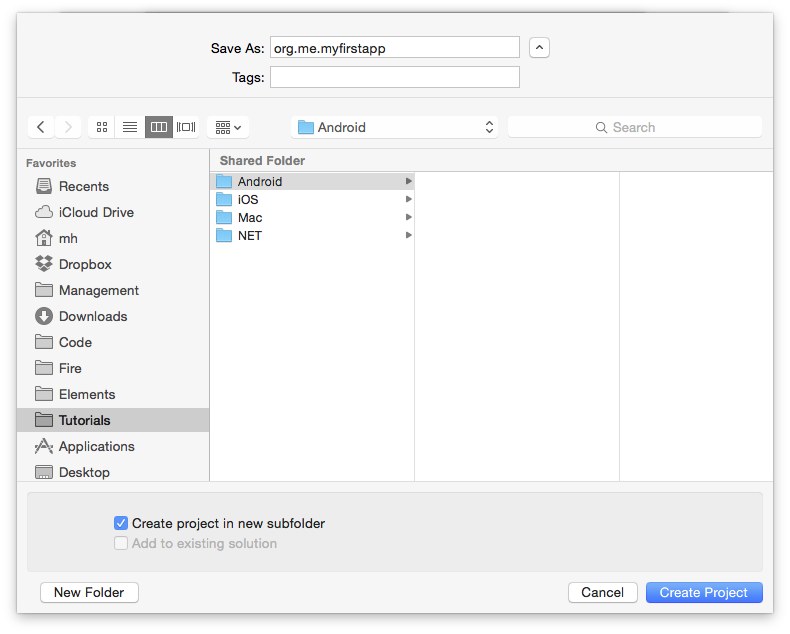

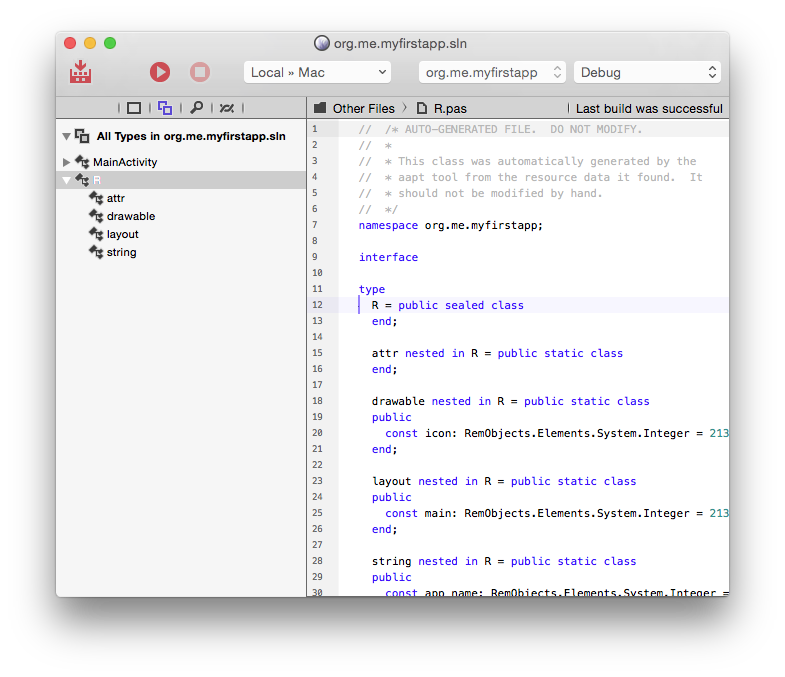

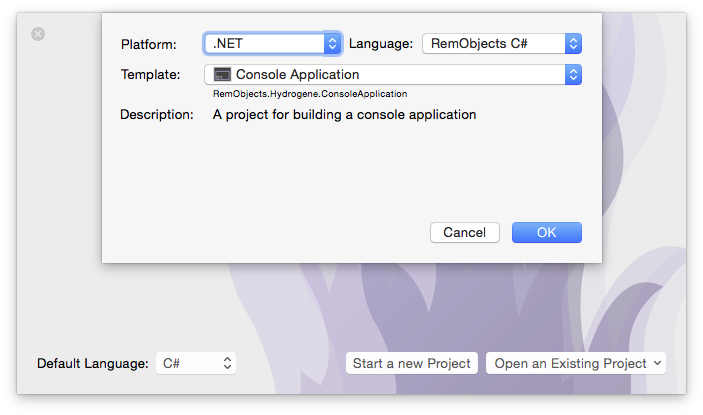

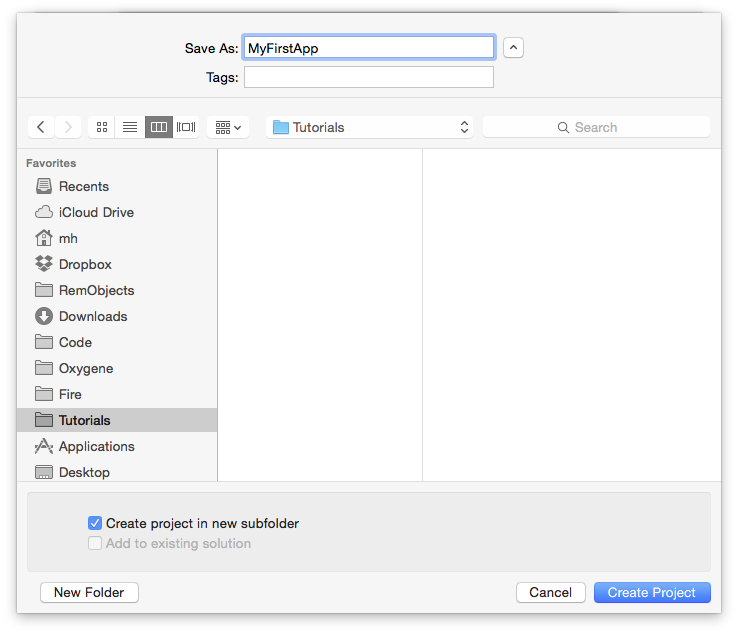

Then there are sections about working in the two IDEs for Elements, Visual Studio on Windows, our own Fire for Mac and Water for Windows. We also cover more general Tools and Technologies relevant to both.

-

The Compiler, EBuild and Tools sections dive deeper into the underlying compiler and build chain technolofies, and related tools.

-

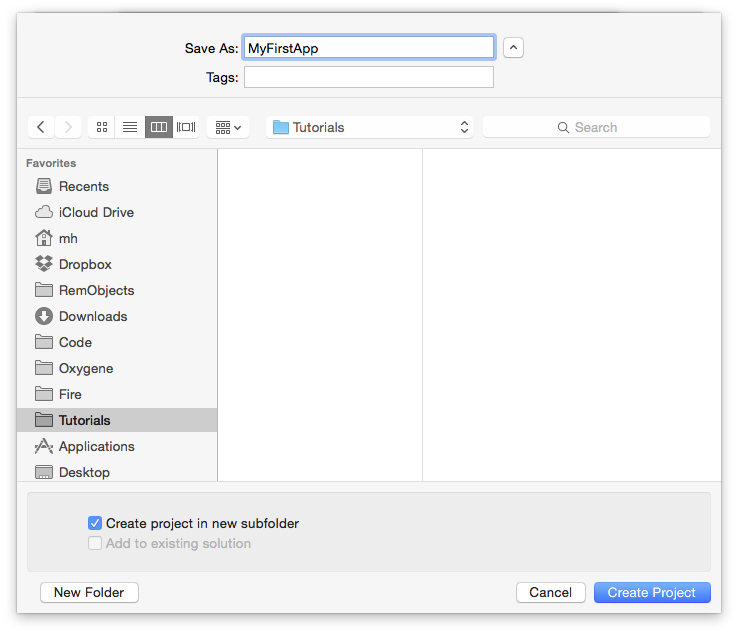

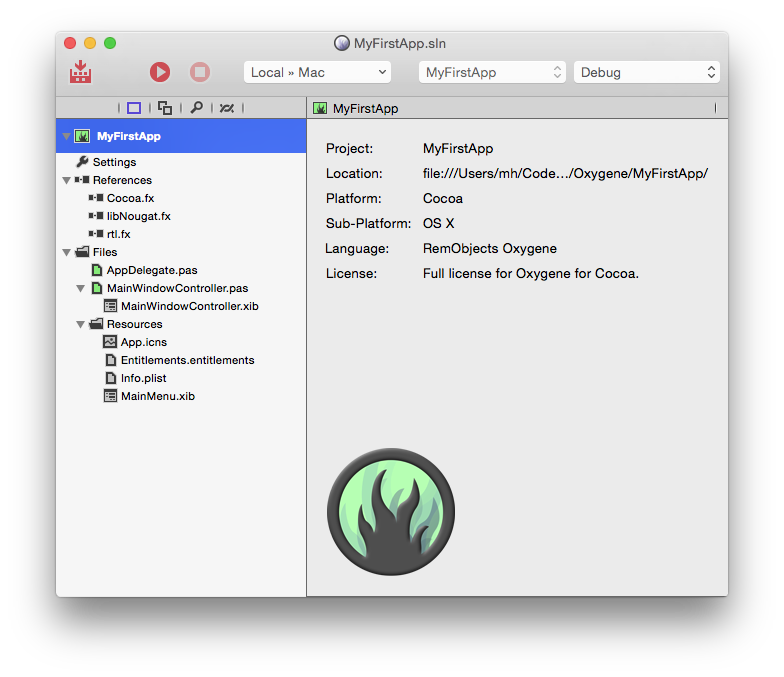

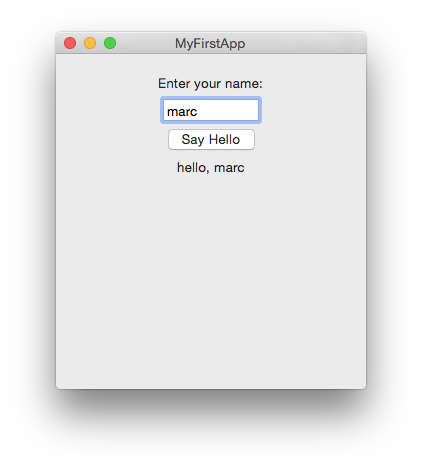

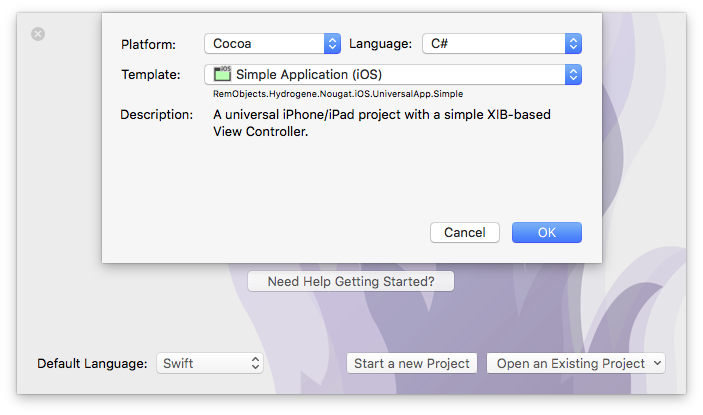

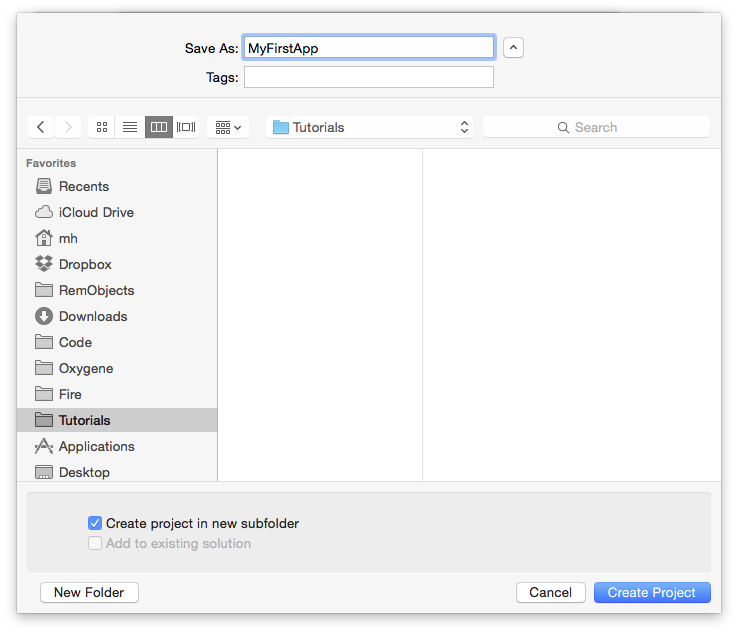

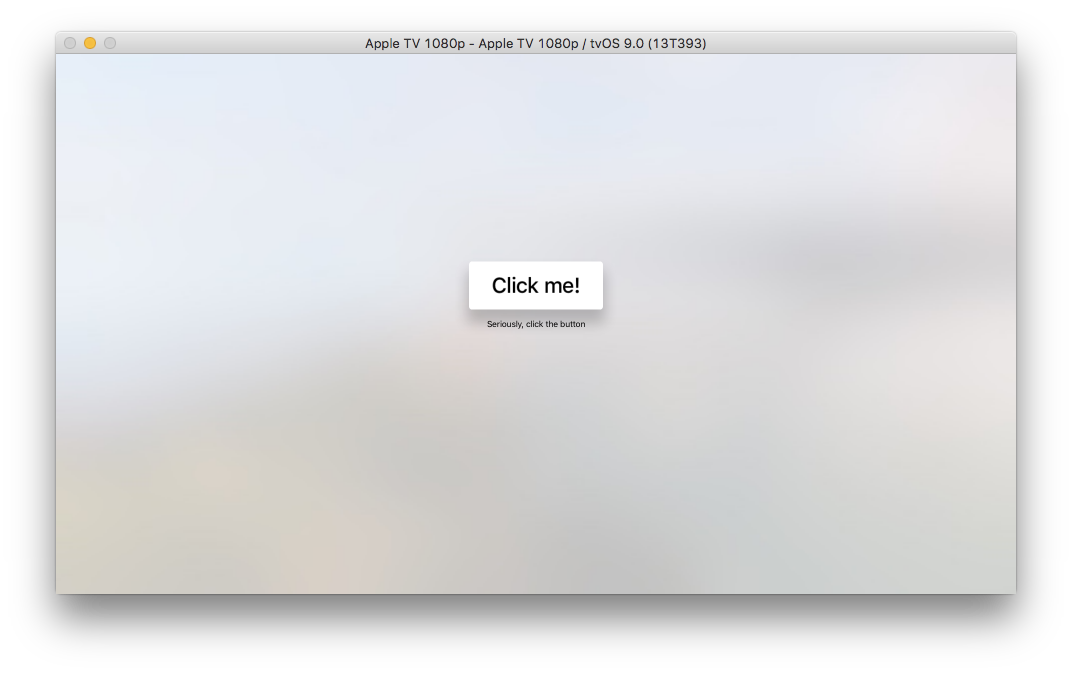

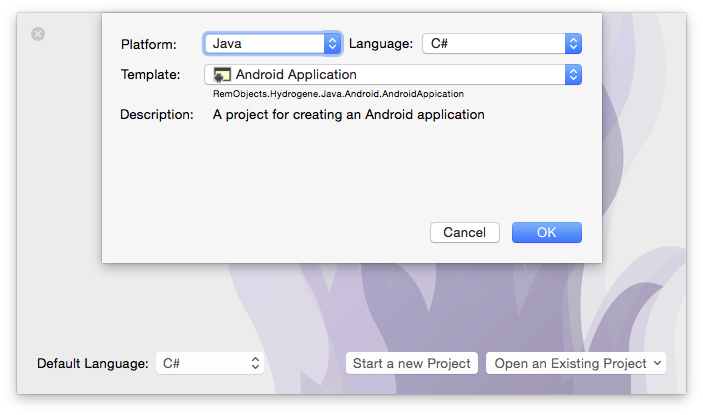

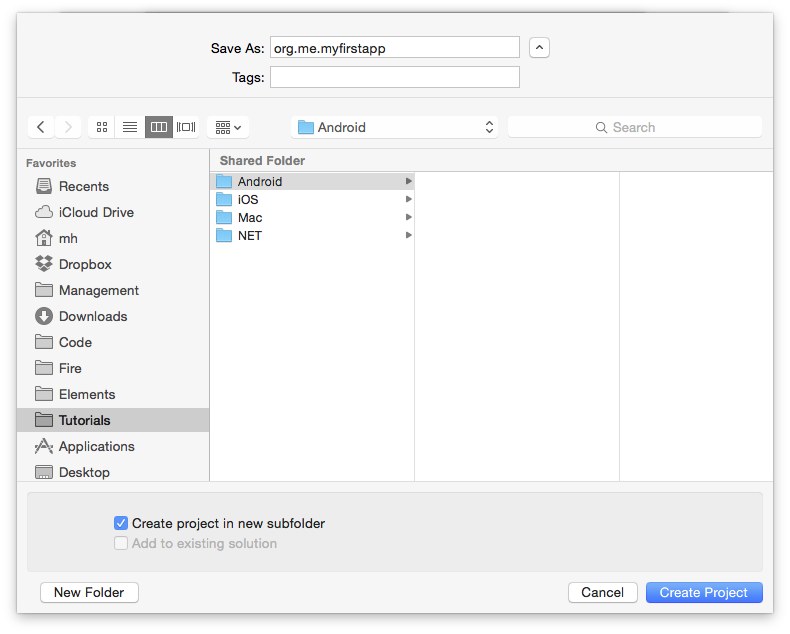

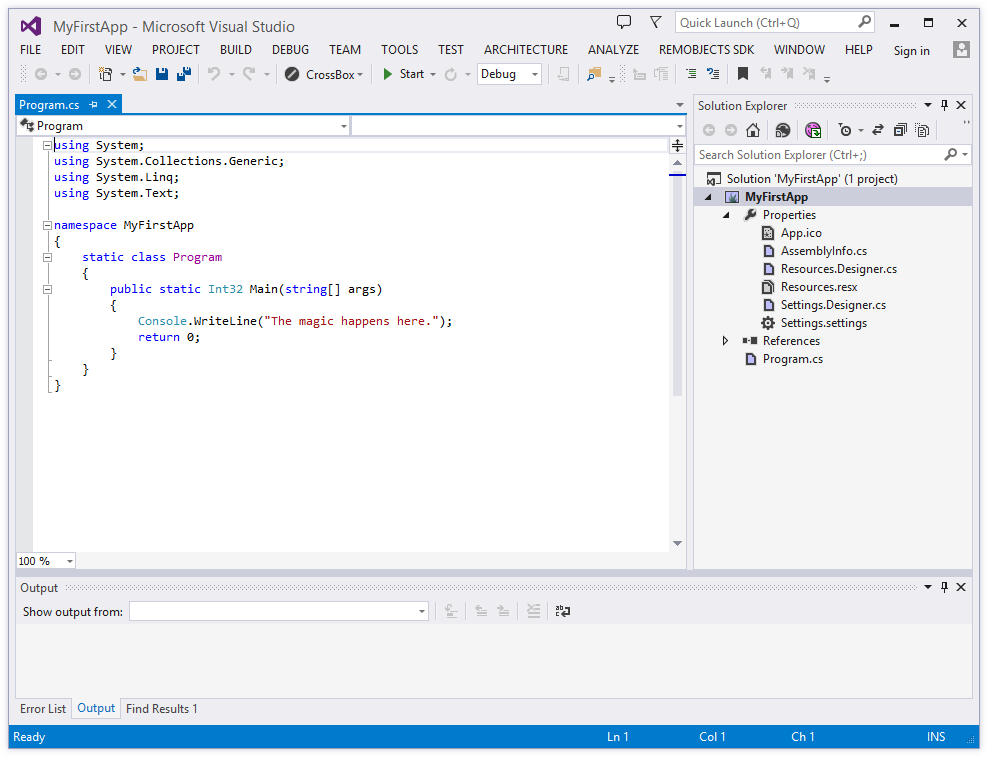

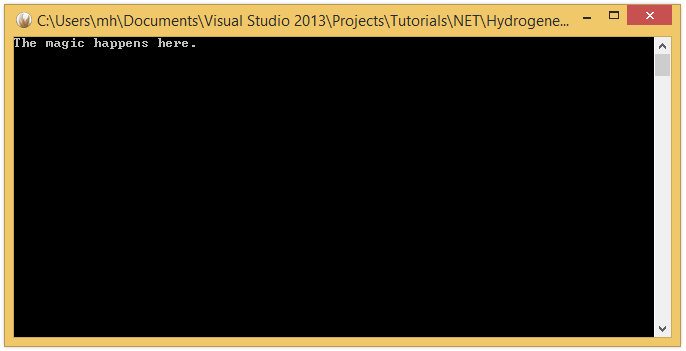

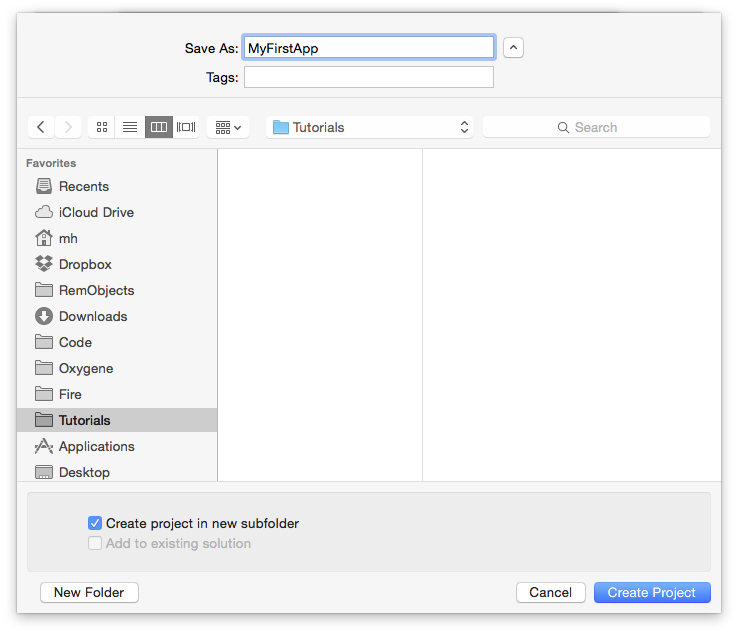

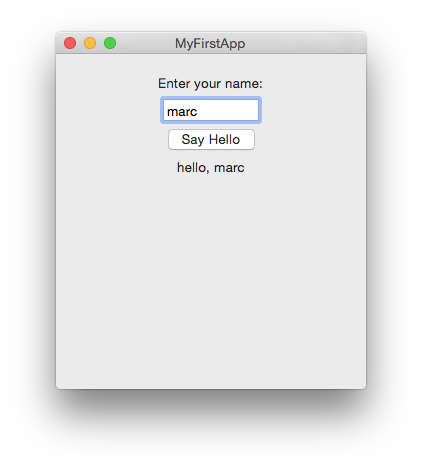

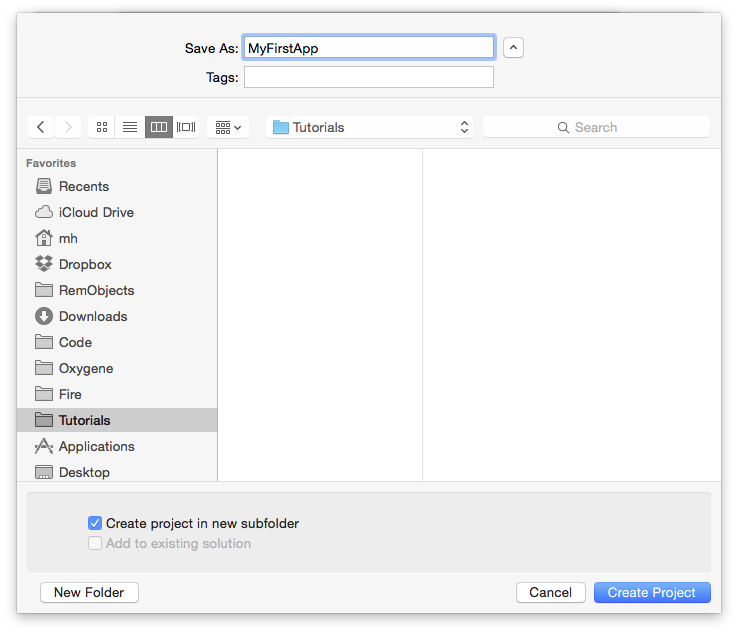

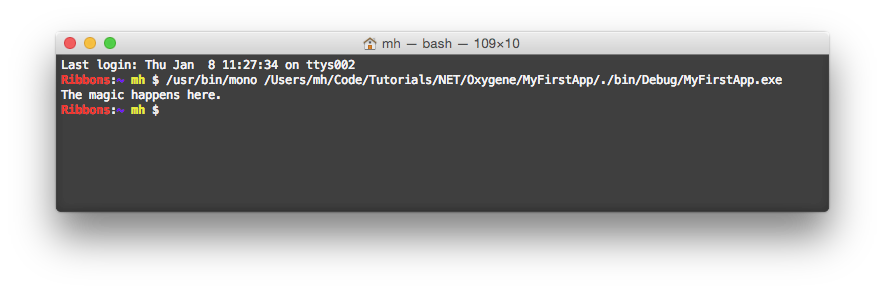

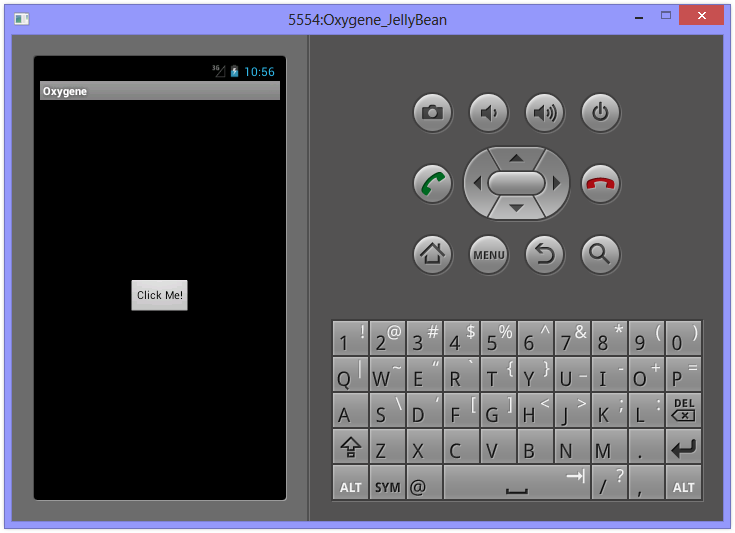

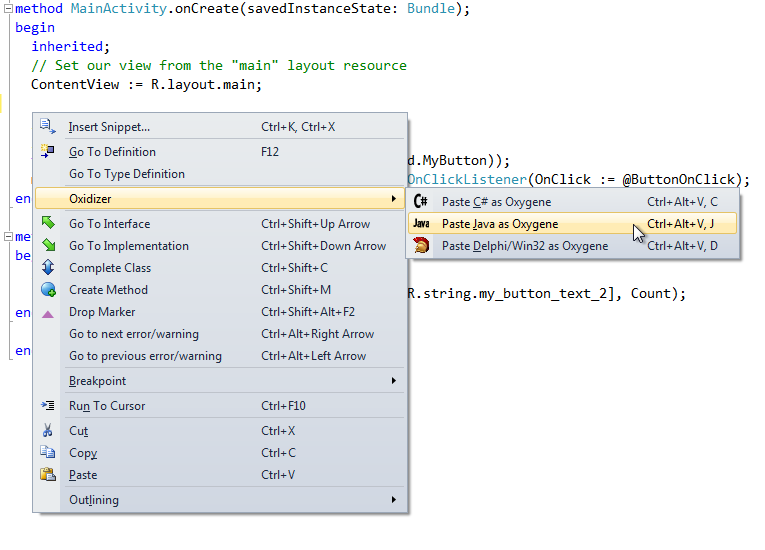

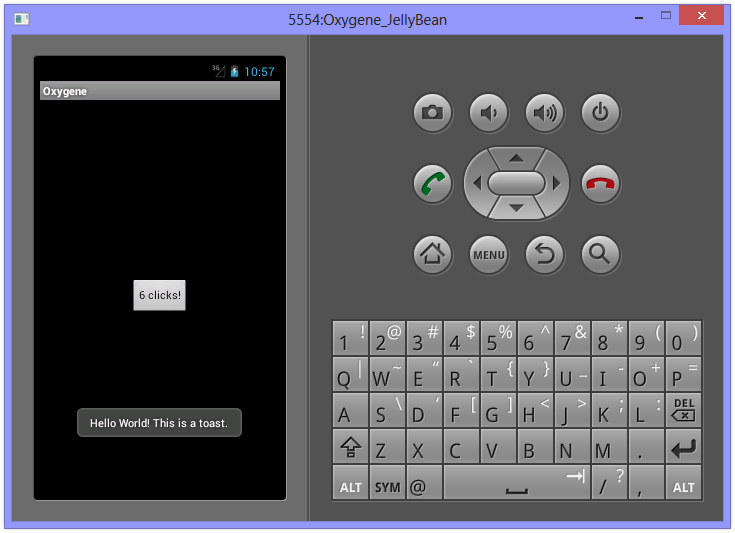

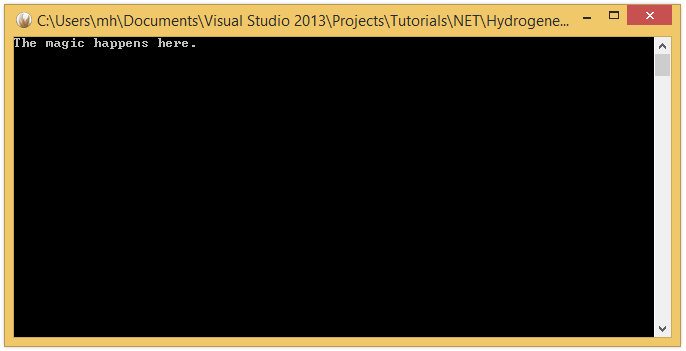

There are a range of Tutorials on various topics, from getting to learn the language(s) to diving into creating your first app for a given platform. We'll be adding more of these over time.

-

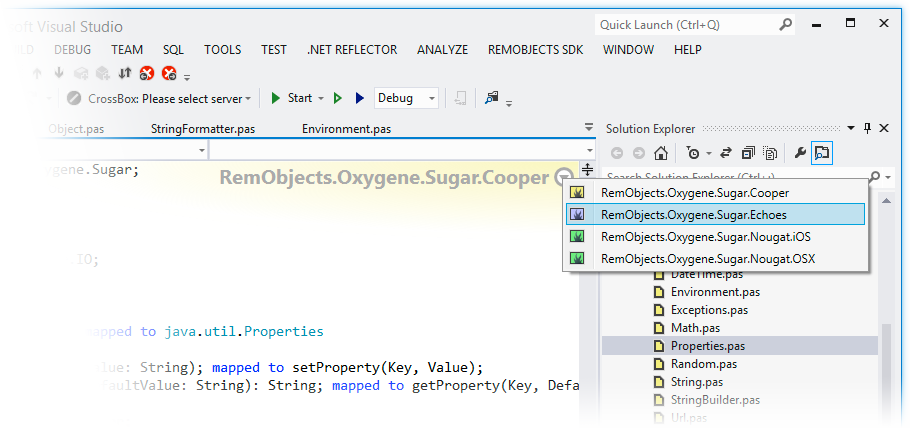

Finally, there's the API Reference, where we document the handful of optional libraries that ship with Elements, such as Sugar, as well as the System Functions, and Standard Types.

More Resources

Outside of this documentation site, we think you will find these links useful:

Support & Discussion Forums

Other Useful Links

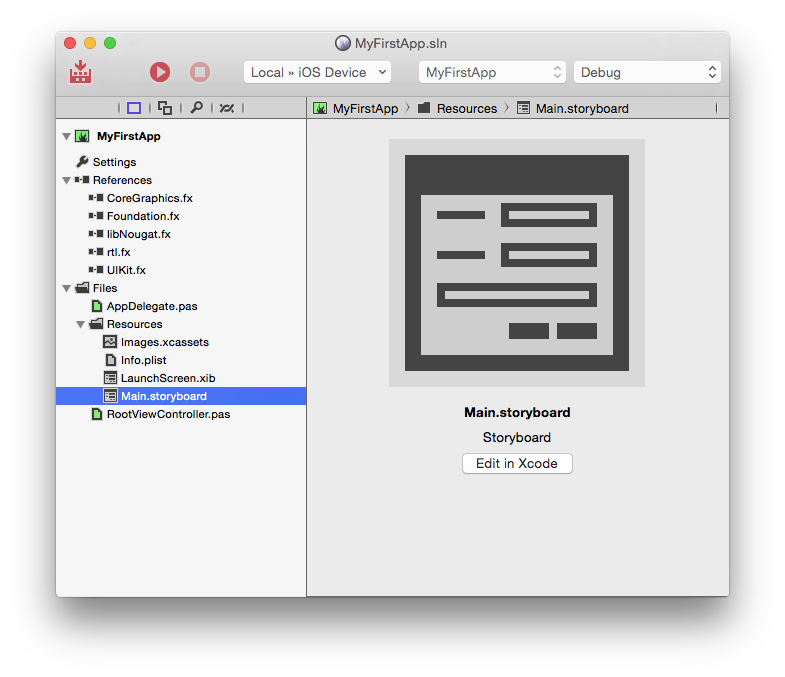

RemObjects Oxygene

One of six languages in the Elements family, Oxygene is based on the foundation of Object Pascal but – in contrast to our C# and Swift implementations – we have been aggressively driving Object Pascal forward over the past ten years, significantly improving the language year after year after year.

As a result, Oxygene is a language that is decidedly Object Pascal, and will make you feel immediately at home if you come from a Delphi or Object Pascal background, but at the same time is a modern language for the 21st century, with many, many advanced language features that take your productivity to the next level.

The Oxygene compiler will continue to evolve rapidly, with new features coming to the language with almost every release.

Learn More

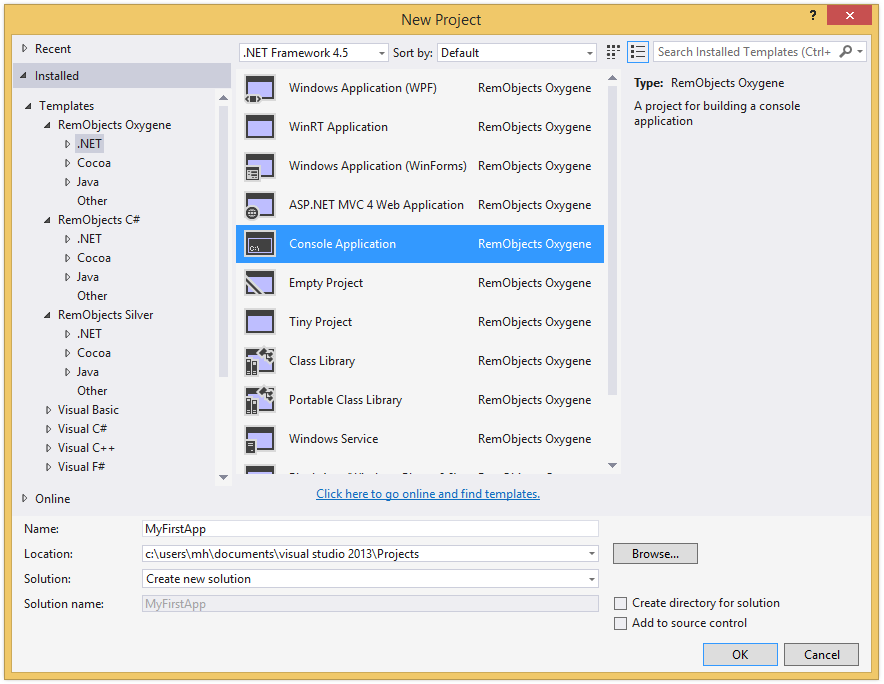

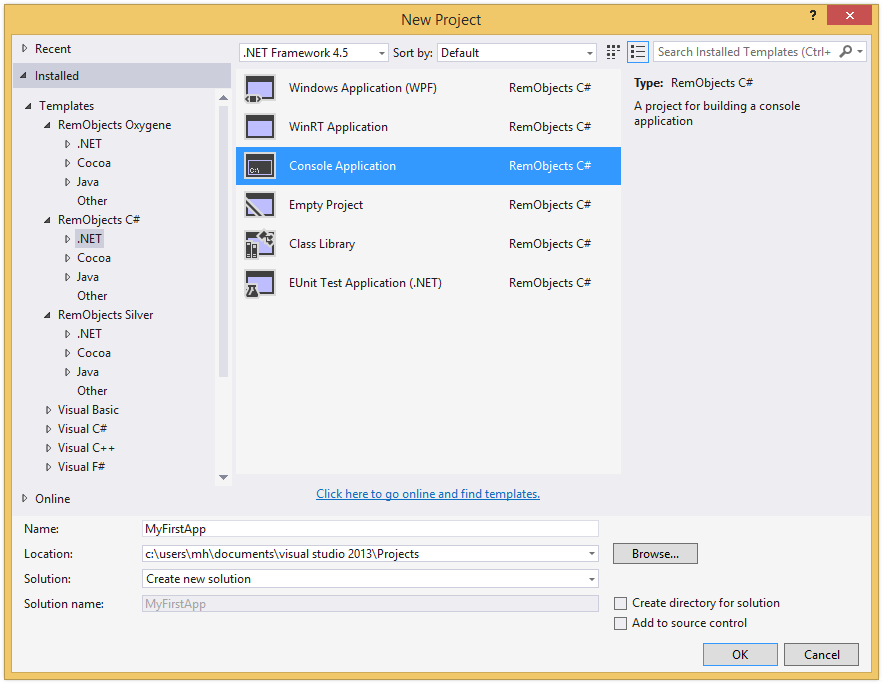

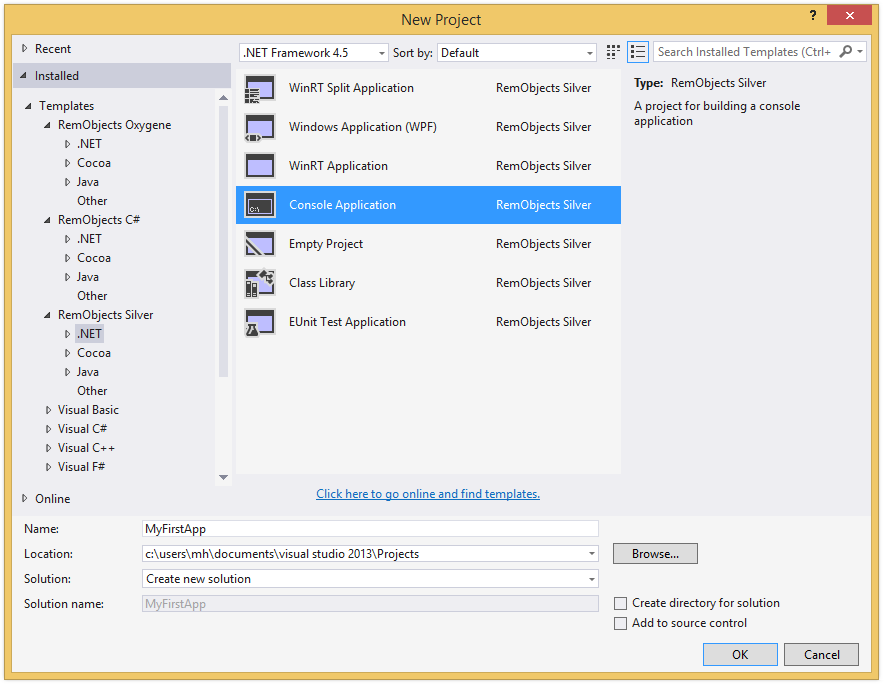

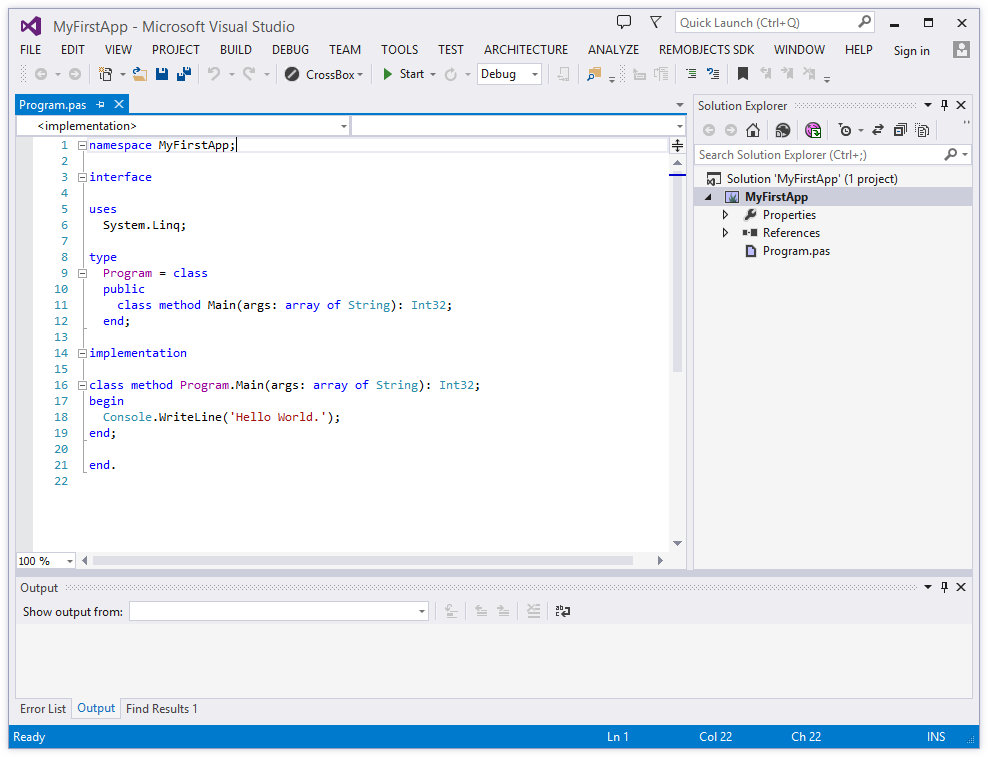

Getting Started

Support

The Language

Oxygene is a powerful general purpose programming language, designed to let developers create all imaginable kinds of projects on a wide variety of platforms.

To achieve this, it provides a combination of language features that ease the development processes — from basic Object Oriented language concepts found in most modern languages (such as the concept of classes with methods, properties and events) to sophisticated specialized language features that enable and ease specific development tasks (such as creating safe, multi-threaded applications) — many of them unique to Oxygene.

All of the provided features are based on the foundation of Object Pascal and stay true to the language design paradigms that make Pascal great, readable and discoverable.

Like all the Elements languages, Oxygene comes with support for four distinct Platforms: .NET, Cocoa (the platform behind macOS and iOS), Android, Java, Windows, Linux and WebAssembly.

Object-Oriented Background

At heart, Oxygene is a purely object oriented language, meaning that all code is contained in Classes and other Types, which can expose Members such as Methods, Properties and Events.

On the Cocoa and Island platforms, additional allowances are made for accessing non-object-oriented platform APIs, like the C runtime functions.

Keywords

Object Pascal, just like Pascal before it, is easily readable by both humans and computers. This is by design, and is accomplished through the use of English words instead of symbols to define most of the language. For example, blocks of code are delimited with the begin and end keywords in sharp contrast to the C family of languages (which includes Elements' three other languages, C#, Swift and Java), which use the curly braces { & } to accomplish the same task.

This extends through all aspects of the language, aiming for a "read as plain english" approach for features, where feasible. For example, where C#, Swift and Java use a cryptic ? to mark nullability, Oxygene uses the nullable keyword, and where C-style languages use & and |, Oxygene relies on readable operator names such as and and or.

Case & Naming Conventions

The language itself, is not case sensitive, but it is case preserving, and Oxygene – unlike older Pascal dialects – is case-preserving, and will emit warnings (and suggest automatic fixes) if identifier case is used inconsistently. However two identifiers that only differ in case are not permissable.

By convention, reserved words (Keywords) in Pascal are written in lowercase, but the compiler will permit and recognize uppercase and mixed case keywords, as well.

In older Pascal dialects, like Turbo Pascal and Delphi, it was convention to prefix class names with a T; this is no longer the case with Oxygene (although Oxygene does preserve the convention of prefixing interface types with I to distinguish them from regular classes).

Aside from that, there are no formal naming or case conventions in Oxygene. Typically, the naming and case follows the conventions of the underlying framework – which is a mixture of "PascalCase" and "camelCase", depending on the platform. For cross-platform or non-platform-specific code, "PascalCase" is recommended.

Structured Programming

One of the things that differentiated Pascal when it was introduced is that it was designed to be a structured programming language. This translates into two things: The first is that it was designed to support complex data structures like lists and records. The second is that it supports various control structures like for, case, while, repeat, etc., as well as procedures & functions, without the need to rely on goto or jump like unstructured languages (in fact, Oxygene drops the goto keyword that is available in legacy Pascal altogether).

Classes

Classes defined in Oxygene belong to a class hierarchy rooted in a base

object called Object (which maps to System.Object in .NET, java.Object in Java and Foundation.NSObject in Cocoa). Each class declaration either explicitly specifies an ancestor class, or will implicitly descend from Object.

Classes inherit all members from their ancestor classes and are thus said to extend that class – commonly by adding additional members or providing overridden implementations of select members defined in the ancestors.

Classes can be marked as abstract to indicate that they need to be extended in order to be instantiated and used. They can also be marked as sealed to indicate that they cannot be extended further.

Methods and Other Class Members

Methods define the execution flow of applications written in Oxygene. Each method consists of a list of Statements that get executed in turn when a method is called. Statement types include loops, conditional statements, logical and mathematical expressions or calculations, or calls to other members in the same or different classes.

Pascal originally differentiated between procedures and functions (the latter being a procedure that returned a result) by using different identifiers, and early Object Pascal implementations carried this legacy over when introducing object-orientation, although this terminology no longer seemed accurate. The Oxygene language no longer differentiates the two by keyword and consistently uses the method keyword for, well, methods (although the procedure and function keywords remain available as compatibility option).

Properties provide controlled access to data contained within a class instance, and can be read/write, read-only or (in rare cases) write-only. Events allow objects to retain references to call-back methods on other objects that they can call, when necessary.

While methods and properties (next to private fields or instance variables) are the two core kinds of members that make up classes, other more advanced member kinds are supported as well, such as Events, Invariants or Nested Types.

Class members can be declared at different visibility levels to control how they can be accessed from outside the class they are in. The three most important levels include private visibility, which restricts access to the class itself; protected visibility, which allows access only from descendants of the class; and public, which allows any code in the project to access the member (but several more fine-grained visibility levels are available as well).

With Oxygene being a truly object oriented language, all code you write will generally be contained in classes (or encoded in other types), with no "global" functions or other elements.

Other Types

Classes are the core of any object oriented program, but they are complemented by a range of other kinds of types that enrich the language:

- Interfaces are abstract types that define a set of shared methods, events or properties that can be implemented by one or more (otherwise unrelated) classes. Classes that implement the same interface can be accessed in identical ways by code that might otherwise be unaware of the concrete class types. This makes it easy to write code that can act on "similar" classes, without those classes having to share a common ancestor. Many developers consider Interfaces to be a cleaner replacement for multiple inheritance, which Oxygene intentionally does not support.

- Records behave similar to classes, but are stack-based rather than heap-based. They can also contain fields, methods and properties, like classes do.

- Enums are simple types that provide a collection of named values.

- Standard types include Simple Types such as integers, floats and strings, as well as more complex types such as arrays, sets and Modified Types.

Advanced Concepts

The Oxygene language contains numerous advanced language concepts, many of which are common to most modern languages, some of which are inspired by other less mainstream languages and yet others which are entirely unique to Oxygene.

-

Class Contracts allow optional code to

be included with class definitions and method implementations to

enforce class consistency. These contracts can be enforced at

runtime, leading to more precise and timely error reporting when

constraints are not met as expected.

-

Sequences and Query

Expressions are deeply integrated

into the language to work with various types of lists of objects, iterate them, and

perform advanced queries (such as filtering, sorting or combining)

on them.

-

Several language constructs for

Parallelism are integrated into the

language to make it easy to write multi-threaded code that scales

well from single-CPU to multi-core computers. These include

Parallel Loops, Future

Types, Asynchronous Expressions and more.

-

Duck Typing and the

Dynamic type provide more weakly typed

language constructs akin to more dynamic languages such as

Objective-C or JavaScript, where needed.

-

Generics provide for classes to be

customizable to work with different types without having to write

separate implementations. For example, a generic list class can be

implemented (or indeed provided by the underlying frameworks) and

then be instantiated to be a list of a very specific concrete type.

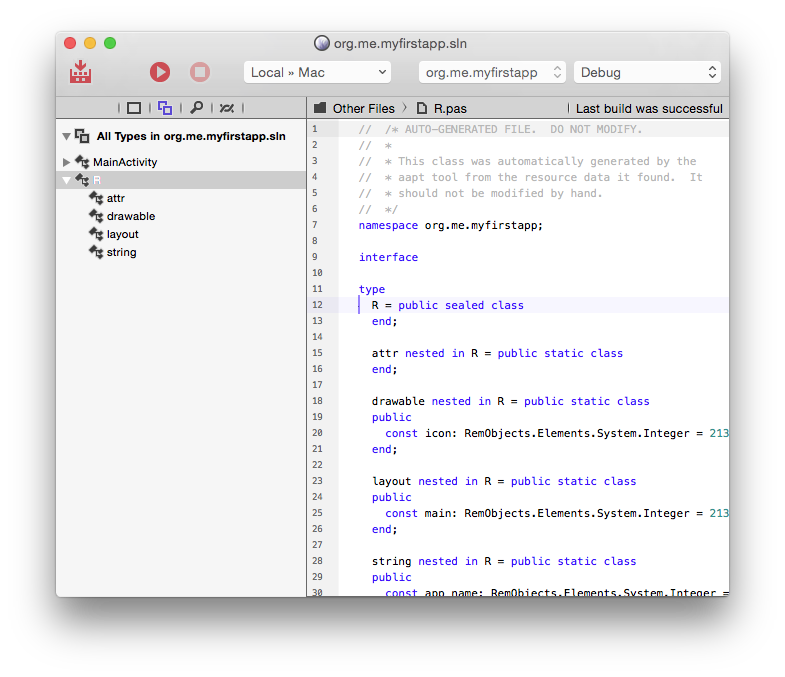

File Structure

The Code File Structure topic explains how an Oxygene .pas file is structured and how the types and code are laid out, within.

The language aims at being ~99% identical and compatible between the platforms, but there are a few subtle differences owed to the underlying platforms, covered in the Platform Differences Overview.

With very few minor exceptions dictated by the underlying runtimes, the Oxygene language is designed to be virtually the same across all three supported development environments: .NET, Cocoa and Java.

This means that the language syntax is 99% identical on all three platforms, and that a lot of the code you write can be shared between the platforms (as long as it does not use platform-specific APIs, of course), and that all the language knowledge and experience you build up using Oxygene can be applied to all platforms. Using the same language also makes it easier and more intuitive to work on apps for the different platforms, without having to "switch gears" between – say – C#, Java and Objective-C all the time.

And the open source Elements RTL base library makes sharing

code between projects across all three runtimes even easier.

Code File Structure

All the code in an Oxygene project is divided into code files. A code file can contain a single class (often recommended) or more than one class or auxiliary type. By default, all types defined in a code file are members of the same Namespace; that namespace is specified at the top of the file via the namespace keyword.

Multiple files, or even multiple projects, can of course contribute to the same namespace – in fact, for small to medium projects, it will be common for all types to be situated in the project's one and only namespace. You can read more about this in the Namespace and Uses topic.

Classic Interface/Implementation Split

After the namespace declaration, traditionally each code file is divided into two parts, the interface and the implementation section.

The interface section is similar to the separate header file found in many languages from the C family. It defines the public interface of the code found in that code file, including Types and their Members' signatures.

The implementation section contains the actual, well, implementation. This includes code that implements the classes defined in the interface section. The implementation section provides a level of encapsulation of the complexity of the implemented code.

The advantage of this separation is that it provides a convenient human- and computer-readable summary of the APIs in the code file. This speeds up human navigation and comprehension of the types when consuming the classes elsewhere.

Types can be defined in the implementation section as well, but that makes them private to the file and inaccessible from rest of the project (similar to unit level Visibility).

Both the interface and the implementation sections may include a uses clause that can bring additional namespaces "into scope". uses clauses are covered in more detail in the Namespace and Uses topic

namespace LutherCorp.WorldDomination;

interface

uses

LutherCorp.DominationTools;

type

WorldDominator = class

public

method AchieveWorldDomination;

end;

implementation

method WorldDominator.AchieveWorldDomination;

begin

// Do something

end;

end.

The end of every code file is indicated with the end keyword followed by a period. Everything beyond that point will be ignored.

Unified Class Syntax

Oxygene also supports declaring and implementing types in a more unified syntax, where the body of a Method can be directly attached to its declaration. This provides a code layout that is more similar to modern C derivatives such C# or Swift.

There are advantages and drawbacks to both code-styles, and Oxygene does not enforce a struct choice; the two styles can me mixed, with some methods having their implementation at the top, and others being deferred in classic style to the implementation section.

If the implementation section of a file contains no code, both the interface and the implementation keywords can be omitted:

namespace LutherCorp.WorldDomination;

uses

LutherCorp.DominationTools;

type

WorldDominator = class

public

method AchieveWorldDomination;

begin

// Do something end;

end;

end;

end.

Again the end of the code file is indicated with the end keyword followed by a period, and everything beyond that point will be ignored.

See Also

Code Style Guide

This document aims at providing a style guide for how to structure and format code written in the Oxygene language.

The guidelines laid out here are by no means mandatory, and an Oxygene developer may choose to adopt all, some, or none of the guidelines provided. However, our IDEs are designed to work best when code follows the guidelines, and we try to adhere to these guidelines for all code in Elements and its surrounding libraries, ourselves. Code submissions and pull requests for the open-source Elements libraries must meet these guidelines, as well.

Keywords

- All keywords shall be used in their lowercase form.

- Use the

method keyword instead of procedure or function.

- Use the

block keyword instead of delegate, method, procedure or function, for Block declarations.

- Use the

namespace keyword instead of unit, at the beginning of a file.

Casing & Naming Conventions

While Oxygene is case insensitive, it by default preserves case and warns when identifiers differ in case between their declaration and use. These warnings should be avoided, and care should be taken to use the proper case. We recommend enabling Auto-Fix for case issues.

- Again, all keywords shoul;d be lower case

- Type names should use a PascalCase form, without the

T prefix common in other Pascal dialects. Upper-case abbreviation prefixes should also be avoided where posisble. Use Namespaces instead.

- Interface types should use an

I prefix, followed by a PascalCased name.

- Public type Members (that should exclude fields) should use PascalCase names unless the platform conventions for a single-platform project suggest otherwise.

-

Variables usually use a single-letter lower-case prefix, followed by a PascalCase name:

- Fields should use an

f prefix: fName

- Local Variables should use an

l prefix: lName

- Parameters should use an

a prefix: aName

- Only loop Variables or very short-lived helpers variables may use short, lowercase names:

i, x.

Code Block Structure

If an if uses a begin/end block, any connected else clause must also use begin/end, and vice versa. In other words, an if clause with a block should not be mixed with a single-statement else clause, or vice versa.

Bad:

if Something then

DoThis

else begin

DoThat;

AndThisOtherThing;

end;

Better:

if Something then begin

DoThis

end

else begin

DoThat;

AndThisOtherThing;

end;

The else should always start on a new line, never follow the end or the first statement.

If an if, else, for, while or similar statement does not uses a begin/end block, the then or do keyword should always be followed by a linebreak and the following statement should be indented in a new line.

Better:

if Something then

exit;

Statements with a begin/end block, should never be nested inside statements where the begin/end block, was omitted.

Bad:

if Something then

if SomethingElse then

for a in list do begin

...

end;

Better:

if Something then begin

if SomethingElse then begin

for a in list do begin

...

end;

end;

end;

Spacing

Consistent spacing should be used throughout all code in a file.

Types spanning more than a single line should be preceded and succeeded by a single empty line, to separate them from their siblings. However, there should be no empty line between the type keyword and the first type that follows it.

Single-line types (e.g., aliases, short enums, etc) should be in a single block without spacing, and the types may be grouped logically together (e.g., several related enums) with an empty line in between them.

Inside a Class (or Record) declaration, members should be spaced consistently. Single-line members (such as Properties, Fields, Events, or Methods w/o inline implementation) may be grouped logically, with individual groups separated by a single line.

Methods, constructors, or Properties that span multiple lines (and/or include an inline implementation) should be separated from each other by a single empty line; even if they are the first, last, or only member in the current type or visibility block.

Groups of members (e.g., several methods relating to a single feature) may be visually separated with a single comment line "//" or three comment lines.

Namespace and Uses

In Oxygene, all Types (and Globals) are contained in a namespace. You can think of a namespace as a longer version of a name, that helps group related types together, and to avoid name ambiguity between types with the same name (but in different namespaces).

A type"s full name is its namespace, followed by a dot, followed by the type's short name. The namespace itself may contain additional dots, to indicated a nested hierarchy of namespaces:

MyCompany.AHelperClass

MyCompany.MyProject.SomeClass

Here, both "MyCompany" and "MyCompany.MyProject" are (separate and distinguished) namespaces. And "AHelperClass" and "SomeClass" are the short names of two classes defined therein.

It is common to use a company or individual's name as the root portion of namespaces for custom code, to (virtually) eliminate the chance of overlap with code written by other people. For example, all RemObjects code uses namespaces starting with "RemObjects."; that is followed by the name of the product, e.g. "RemObjects.Elements.", "RemObjects.DataAbstract.", and so forth.

Declaring A Namespace

In Oxygene every source file starts with the namespace keyword, followed by the name of the namespace that everything else contained in the file be put into (optionally, this name can be omitted, and type will be generated namespace-less. But this is very rarely done, and discouraged).

namespace MyCompany.MyProject;

...

end.

This declaration affects two things:

- By default, all types declared in the file will become part of this namespace.

- All types from this namespace — no matter where they are defined — will be "in scope" for the file.

What does this mean, exactly? Let's have a closer look.

1. By default, all types declared in the file will become part of this namespace. This means that if we define a type as follows:

namespace MyCompany.MyProject

type

MyClass = class

...

end;

then the MyClass class will automatically be considered part of the MyCompany.MyProject namespace. Its so-called *full name will this be "MyCompany.MyProject.MyClass". And this full name is how the class can be referred to everywhere.

2. All types from this namespace will be "in scope" for the file. This means that if we add a second (or more) files to our project and also begin it with namespace MyCompany.MyProject, these files will be part of the same namespace, and all types from that namespace will be in scope in all the files (no matter which file they were declared in). As such, we can refer to the above class simply as MyClass — because it is in the same namespace.

One can add as many files to a project as needed and they can all share the same namespace. In most (smallish) projects that only have a single namespace, there is no need to worry about adding namespaces to the uses clause (more on that below), just to access classes from within the project's code base. All types are automatically in scope in all source files (of the same namespace).

Of course, while it is common for small to medium projects to just use a single namespace, it is also common to declare different namespaces across a project in order to better partition parts of the project — for example one could have a MyCompany.MyProject namespace for the bulk of a project, but a MyCompany.MyProject.MySubSystem namespace for a certain sub-system of the project.

Regardless of namespace, all types declared in a project will be available all throughout the project (unless they are marked for unit level visibility only) by their full name, and all types marked as public will also be available outside of the project (i.e. when you are creating a library that will be referenced from other projects).

Code in the MyCompany.MyProject namespace could refer to a type from the sub-system simply by using it's full name:"

var x := new SomeClassFromTheMainNamespace(); // no need to specify the full name

var y := new MyCompany.MyProject.MySubSystem.SubSytemClass();

Uses Clauses

Of course, it would become cumbersome to always refer to types from other namespaces using their full name – especially if the same type, or many types from the sanem namespace(s) are used frequently through-out a source file.

By providing a uses clause with a list of namespaces at the top of the file, these additional namespaces can be brought "into scope" as well, so that anything declared in them can now also be accessed using the short name.

namespace MyCompany.MyProject;

uses

MyCompany.MyProject.MySubSystem;

...

var y := new SubSytemClass(); // MyCompany.MyProject.MySubSystem is now in scope!

A uses clause can list one or more namespaces (separated by comma). Alternatively where convenient, multiple uses clauses can be provided, each terminated by a semicolon. This is especially helpful when using {$IFDEF}s:

namespace MyCompany.MyProject;

uses

CoreFoundation,

Foundation;

{$IF MACOS}

uses

AppKit;

{$ENDIF}

...

Resolving Ambiguities

If more than one namespace in scope declare a type of the same (short) name, using that name will refer the one in the namespace that is most towards the end of the uses clause. Of course you can still use the full type name, to be sure, or to refer to one of the other types of the same name:

namespace MyCompany.MyProject;

uses

MyCompany.MyProject.MySubSystemA,

MyCompany.MyProject.MySubSystemB,

...

var y := new SubSytemClass(); // comes from MyCompany.MyProject.MySubSystemB

var z := new MyCompany.MyProject.MySubSystemA.SubSytemClass(); // explicitly use the type from MySubSystemB

WildCards

In addition to listing individual namespaces, the uses clause also allows the asterisk character as a wildcard, to include a namespace and all its sub-namespaces. For example, uses MyCompany.MyProject.* would add MyCompany.MyProject.SubSystemA and all the rest to the scope, including recursive sub-namespaces.

namespace MyCompany.MyProject;

uses

MyCompany.MyProject.*;

Standard Namespaces

Certain System namespaces will be in scope by default and do not manually need to be listed in the uses clause for their types to be accessible by their short name.

-

The RemObjects.Elements.System namespace contains compiler-intrinsic types, such as Integer, System Functions and other elements, and is always first in scope.

-

On .NET, the System namespace contains many core classes, such as String and Object, and is always second in scope.

-

On Java, the java.lang namespaces contain many core classes, such as String and Object, and are always second in scope.

-

On Cocoa, the rtl.* namespace contains the C runtime library, core types and many core C-based APIs and is always second in scope.

(On Island, all standard types are in the above-mentioned RemObjects.Elements.System)

Project-Wide Default Uses Clauses

In addition, you can specify a list of namespaces be in scope for all files via the "Default Uses Clause" Project Setting. Any namespaces in that list will be in scope before those listed in an individual source file's uses clause (in alphabetical order, with RemObjects.Elements.RTL, of present, last).

The unit Keyword

For backwards compatibility with Delphi, Oxygene allows the unit keyword to be used instead of namespace. Note that even when using that keyword, the following identifier still specifies the namespace for that file, and all the above discussion still applies.

Just as with namespace, the identifier does not have to match or correspond to the file name, and multiple (or even all) files in the project can (and typically will) use the same namespace.

See Also

Types

Types are the fundamental building blocks of the Oxygene language.

There are three broad categories of types:

-

Predefined Simple Types are small and atomic types that are built into the language to represent the simplest of data: numbers, booleans, strings, and the like.

-

Custom Types are types not defined by the language, but by yourself or provided by base libraries or frameworks. Where standard types are universal in application, custom types usually serve a specific purpose.

-

Modified Types are defined by the language itself, and extend or modify the behavior of a regular type, or form complex combinations, such as arrays, sequences, tuples or pointers of a given other type.

-

Anonymous Types are custom types (Classes or Records) that are instantiated on the fly without being explicitly declared or given a name.

Oxygene also has support for declaring types in special ways:

-

Generic Types are classes (or records and interfaces) where one or more of the other types that the class interacts with (for example to receive or return as a parameter from methods) is not well-defined, but kept generic. This allows for the class to be implemented in a fashion that it can work with or contain different type parameters. Only when a generic type is used, a concrete type is specified.

-

Partial Types are regular types that are declared and implemented across multiple source files – commonly to keep a large class easier to maintain, or because one part of the class is generated by a tool.

-

Mapped Types allow you to provide the definition of a type that will not exist at runtime, but merely map to a different, already existing type.

-

Type Extensions can expand an existing type with new methods or properties (but not new data), even if that type was originally declared in an external library.

-

Type Aliases can give a new name to an existing type.

Type Declarations

Custom Types and Aliases to existing types can be declared in the interface or implementation section of any source file, after a type keyword.

Each type declaration starts with the type's name, followed by an equal sign (=) and followed by optional Type Modifiers, which can also include a Visibility Lebel, followed by the details of the type declaration as specified in the individual custom type topics referenced above.

type

MyClass = sealed class

end;

MyInteger = public type Integer;

In the above example, MyClass ands MyInteger are the type names. They are followed by the equal sign, and the sealed and public type modifiers, respectively. Finally class ... end and Integer are the type declaration itself (a Class declaration and an Alias, in this case).

Type References

While Type Declarations, covered above, introduce a new type name, the most common interaction with types is to reference existing ones.

Most types, including Simple Types and Custom Types, are referenced using simply their name – either their short name, or their fully qualified name including the Namespace.

var x: SomeClass; // Variable x is declared referencing the SomeClass type by name.

By contrast, Modified Types are referenced using a particular syntax specific to the type, such as the array of, sequence of or nullable keywords, often combined with a type name.

var x: array of SomeRecord; // Variable x is as an *array* of the type referred to by name.

var y: nullable Integer; // Variable x is as a *nullable* version of the name's simple type.

On the Cocoa platform, type references can be prefixed with Storage Modifiers to determine how they interact with ARC.

On the Island-based platform, type references can be prefixed with a Life-Time Strategy Modifier to determine how their lifetime is managed (although doing so explicitly is rarely needed). Storage Modifiers, as discussed above, are also supported when working with Cocoa or Swift objects Cocoa project.

More on Type Names

Every named type (Simple Types and Custom Types) in Oxygene can be referred to either by its short name (e.g. MyClass), or what is called a fully qualified name that includes a full namespace (e.g. MyCompany.MyProject.MyClass).

When declaring types with a simple name, the type will automatically be placed within the namespace that is declared at the top of the file alongside the namespace keyword. Alternatively, a fully qualified name can be provided in the declaration to override the namespace.

namespace MyCompany.MyProject

interface

type

MyClass = class // full name will be MyCompany.MyProject.MyClass

end;

MyCompany.OtherProject.OtherClass = class // full name will be MyCompany.OtherProject.OtherClass

end;

You can read more about this in the Namespaces topic.

See Also

Predefined Simple Types

Like most programming languages, Oxygene defines a set of basic types that make up the core language, cover simple and atomic data types (such as numbers and characters) and form the base for more advanced types you define yourself or link in from libraries.

Integers

Oxygene provides integer types in 4 sizes, from 8-bit to 64-bit, both signed and unsigned. Refer to the Integers topic in the API Reference for more details.

Floating Points

Two floating point types provide storage of fractional values, with single and double precision. The Floats section in the API Reference covers these in more depth.

Boolean

A boolean is a simple value type that can hold one of two values – true or false. See Boolean for details.

Strings and Characters

Individual characters can be stored in the Char type, while the String class provides support for strings of characters. Both hold 16-bit UTF-16 entities.

Object

Object is the implied root class of all Class Types defined in Oxygene and the other Elements languages. On .NET and Java (and in a limited degree on Cocoa and Island), it is also the root of all other types, through a mechanism called Boxing). Visit the Object topic for more details.

Dynamic

The dynamic type can be used to work with objects whose API is not known at compile time. It can be used as a placeholder type for a field, variable or parameter that can hold any type, at runtime. Different than an Object reference, which needs to be cast to a more concrete type for useful work, a dynamic reference allows any imaginable calls to be made on it, without enforcing any compile times checks to see whether it is valid. The compiler will generate code that tries to dispatch the call dynamically, failing at runtime if a call is invalid.

Custom Types

Custom Types are types not defined by the language, but by yourself or provided by base libraries or frameworks. Where standard types are universal in application, custom types usually serve a specific purpose.

Classes

A class is a data structure that may contain data members (Constants, Fields and Properties, as well as actions that work with that data (Methods).

Classes take part in a hierarchy of types and can descend from each other to form that hierarchy (more on that below). An instance of a class is commonly referred to as an "object".

A class type is declared using the class keyword, followed by zero or more member declarations, and closed off with the end keyword. Optionally, a base class and/or a list of one or more interfaces implemented by the class can be provided in parenthesis behind the class keyword:

type

MyClass = public class(Object, IMyInterface)

private

fName: String;

fValue: Integer;

public

property Name: String read fName;

property Value: Integer read fValue;

end;

Like all custom types, classes can be nested in other types with the nested in syntax.

Members

Classes can contain Type Members, including Fields, Properties and even Methods. Also like in classes, the members can be grouped in Visibility Sections.

Invariants

Classes can define Invariants to help ensure a consistent state. Invariants are boolean expressions that will automatically be enforced by the compiler every time a method or property accessor finishes.

Nested Types

Classes can also define Nested Types. Nested types are like regular custom types, except they are considered part of the class and their visibility can be scoped as granular as all class Members.

Nested types are declared using the nested in syntax, and (outside of the containing class) are referred to in the same way as static class members would – prefixed with the name of the class, and dot.

Refer to the Nested Types topic for more details.

Polymorphism

As hinted above, classes are part of a class hierarchy, where each class (except the root) has an ancestor class that it descends from and extends. You can think of this hierarchy as a tree, with a common root (Object).

Classes can be treated polymorphically. That means an object (a concrete instance of a class) can be treated the same as any any of its base classes. This allows code to be written that can work with a base class (or even Object itself), and it can be applied to any descendant of the same class, as well.

Individual members of a class can be virtual, which means that descendant classes can override their implementation to provide more specific behavior. When code working with a common base class accesses virtual members, at runtime execution is automatically passed to the implementation for the concrete instance.

For example, code could be written for a list of Person classes, which, at runtime, includes various concrete subclasses or persons, such as Employee, Manager, FamilyMember or the like. The code has access to all (visible) members declared on Person, but might end up transparently calling more specific implementations of these members provided by Employee, Manager or FamilyMember.

Please refer to the Polymorphism topic in the Concepts section, for details.

Abstract Classes

A class can be marked with the abstract Modifier, to indicate that it is an abstract base class. Abstract classes cannot be instantiated, and they may (but don't have to) contain abstract Members – that is, members that have been defined on the class, but not implemented.

Descendants from an abstract classes class can become non-abstract, if they provide overriden implementations for all abstract members.

Consider a class hierarchy of vehicles, where the base class Vehicle can represent any kind of vehicle, but not a concrete type. It might provide an abstract Drive method, but no implementation (since there is no one way to drive "any vehicle"). It makes no sense to create an instance of a Vehicle. Concrete subclasses such as Car, Train and Bike could provide implementations for the Drive method.

Yet, even though Vehicle is abstract, code can be written that knows how to call Drive on any vehicle.

Again, please refer to the Polymorphism topic in the Concepts section, for details.

Sealed Classes

A class can be marked with the sealed Modifier, to prevent further subclassing.

Extension Classes

Extension Classes can add properties, methods, operators and events to an existing type (but not add anything that requires storage, like fields, events or stored properties). These become available to callers if this type is in scope for the caller. The first type in the ancestor defines which type gets extended; optional interfaces can be used to add/implement as interfaces allowing the type to be compatible with that interface.

Read more about Extension Classes here.

Type Visibility

The visibility of a class type can be controlled by applying a Visibility Modifier on the declaration. The default visibility is assembly.

Other Modifiers

A number of other Type Modifiers can be applied to classes:

abstract Forces the class to be abstract; see above.extension Makes this an extension class; see above and Extensions.mapped Makes this a mapped class; see Mapped Types.partial Partial can be used to spread a type over several files in the same project. All parts must have this modifier then.readonly Makes this class readonly. All fields in it will be readonly and can only be set by a constructor and not modified afterwards.static Static classes are classes with only static members. The class modifier is implied on all members.

See Also

Records

Records (also called "Structures" or "Structs", in many other programming languages) are a lot like Classes except for two crucial differences:

- Records are value types, and stored on the stack, while classes are reference types, and stored in global memory.

- While they can have an ancestry hierarchy (i.e. a record type can decent from and extend another record), they do not support polymorphism, e.g., overriding virtual methods.

When using a record type, the value is stored on the stack (or when defined inside a different type, it is stored inline within the memory space of that type). Assigning a record from one variable to another creates a copy of the record, and making changes to on copy does not affect the other. For this reason, records are usually used to hold a small number of related values.

See Value Types vs. Reference Types) for more on stack- vs heap-baswed types.

A record type is declared using the record keyword, followed by zero or more member declaratins, and closed off with the end keyword. An optional ancestor and/or a list of one of more interfaces to implement can be provided in parenthesis behind the record keyword:

type

Color = public record(IColor)

public

R, G, B, A: Byte;

end;

Like all custom types, records can be nested in other types with nested in syntax.

Note: Oxygene records are not to be confused with the record types introduced in C# 9 and Mercury, which add special logic to class (or struct). Oxygene records are the equivalent of a simple struct in C# or Swift, or a Structure in Mercury.

Members

Like Classes, records can contain Type Members, including Fields, Properties and even Methods. Also like in classes, the members can be grouped in Visibility Sections

Packed Records

By default in-memory layout of a record's individual fields is optimized for fast access first, and memory efficiency second. This means that additional padding might be added to make sure Integers and pointers align at 4 or 8-byte boundaries, and the in-memory order of fields might be rearranged, as well.

When a record is marked as packed with the packed directive (or the cross-language Packed Aspect), this will not happen, and the records memory layout will be exactly as specified.

Use packed records when the memory layout matters – for example when reading binary data from disk or the network and accessing it as a record, or when sharing records in-memory with code compiled from non-Elements languages, such as C ort Delphi.

Invariants

Records can define Invariants to help ensure a consistent state. Invariants are boolean expressions that will automatically be enforced by the compiler.

Note that invariants can only be effective for non-public fields, as access to public fields would bypass them. This makes invariants less useful for most typical records rthan they are for Classes.

Nested Types

Records can also define Nested Types. Nested types are like regular custom types, except they are considered part of the record and their visibility can be scoped as granular as all class Members.

Refer to the Nested Types topic for more details.

Extension Records

Like Extension Classes, Extension Records can add properties, methods, operators and events to an existing type (but not add anything that requires storage, like fields, events or stored properties). These become available to callers if this type is in scope for the caller. The first type in the ancestor defines which type gets extended; optional interfaces can be used to add/implement as interfaces allowing the type to be compatible with that interface.

Read more about Extension Records here.

Type Visibility

The visibility of a record type can be controlled by applying a Visibility Modifier on the declaration. The default visibility is assembly.

Other Modifiers

A number of other Type Modifiers can be applied to records:

extension Makes this an extension record; see Extensions.mapped Makes this a mapped record; see Mapped Types.partial Partial can be used to spread a type over several files in the same project. All parts must have this modifier then.readonly Makes this record readonly. All fields in it will be readonly and can only be set by a constructor and not modified afterwards.static Static records are records with only static members. packed Packed records do not align their members by round offsets. Ignored on .NET and Java

See Also

Interfaces

Interfaces provide an abstract definition of one or more type members (Methods, Properties or Events) that other types may opt to implement. You can think of them as type contract that a type promises to provide functionality for.

Many types can implement the same interface, regardless of whether they share a common ancestry in their inheritance hierarchy, and all types implementing the interface can then be interacted with by the same code, without the code having to be aware of the actual concrete types.

Any type opting to implement an interface must provide an implementation for all members of the interface (except for those declared optional, a feature available only on Cocoa objects). All interface members are implied to be public, and no visibility sections are allowed (with the exception of Private Interface Members, discussed below).

By convention, and to provide distinction from concrete types, interface names start with an uppercase I followed by a PascalCased name. But this is not a rule that is compiler-enforced. On Java, system-provided interfaces do not follow this convention.

An interface type is declared using the interface keyword, followed by zero or more member declarations, and closed off with the end keyword. Optionally, one or more base interfaces can be provided in parenthesis behind the interface keyword:

type

IMyInterface = public interface

method DoSomething;

end;

MyClass = public class(IMyInterface)

public

method DoSomething; ///

end;

var x: IMyInterface;

x.DoSomething(; // we don't know the actual type of x, only that it implements IMyInterface

Interfaces Members are limited to

Like all custom types, interfaces can be nested in other types with nested in syntax.

Default Implementations

Interfaces can optionally choose to provide a default implementation for some of the methods they define. If a default implementation is provided, types implementing the interface may choose not to provide an implementation themselves, and will in that case "inherit" the default implementation.

This is often helpful for interface methods that would be similar for most implementations. Consider the ILogger interface example below. Most concrete implementations would only need to implement the first method to emit the log string to various mediums. The second method is handy to have for callers of the interface, but it would be cumbersome having to re-implement it for each logger.

type

ILogger = public soft interface

method Log(aInfo: String);

method Log(aFormat: String; params aParameters: array of Object);

begin

Log(String.Format(aFormat, aParameters));

end;

end;

Private Interface Members

As part of default implementations, interfaces can also define private helper members. These members must provide an implementation; they do not become part of the official interface contract, and are only available from other methods implemented in the same interface. Consider:

type

ILogger = public soft interface

public

method Log(aInfo: String);

method Log(aFormat: String; params aParameters: array of Object);

begin

Log(CustomFormat(aFormat, aParameters));

end;

private

method CustomFormat(aFormat: String; params aParameters: array of Object): String;

begin

...

end;

end;

Optional Members (Cocoa)

On the Cocoa platform, interface members can be marked as optional with the optional keyword directive. Optional members do not have to (but may) be implemented by classes conforming to the interface. Of course, code calling into such optional members must take care to ensure they are implemented by the concrete instance, at runtime – usually by calling the respondsToSelector() method on Cocoa's base Object.

type

IFoo = public interface

method One;

method Two; optional;

end;

Soft Interfaces

Interfaces can be marked as soft and suitable for automatic Duck Typing, using the soft modifier keyword. You can read more about Soft Interfaces, in all Elements languages, here.

type

ISoftDuck = public soft interface

method Quack;

end;

Combined Interfaces

A Type Alias can combine two or more interfaces into a new interface type that combines both:

type

ICodable = IEncodable and IDecodable;

See the Combined Interfaces topic for more details

Type Visibility

The visibility of an interface type can be controlled by applying a Visibility Modifier on the declaration. The default visibility is assembly.

Other Modifiers

Only one Type Modifier can be applied to interfaces:

soft marks the interface as soft (see above)

See Also

Enums

An enum type, short for enumeration, is a type that represents a number of distinct named values, each of which can optionally be represented by a specific numerical value. An instance of an enum represents a single of the enum's values.

Flags are a special sub-type of enums where each value is represented by a separate bit in the underlying storage, allowing for a flag instance to represent a single value or a combination of two or more values, using bitwise or.

An enum type is declared with the enum or flag keyword, followed by one or more value names in parentheses:

type

Color = public enum(

Red, // implied 0

Green, // implied 1

Blue // implied 2

);

Number = public enum

(

One = 1,

Two = 2,

Three = 3

) of Word;

State = public flags

(

IsVisible, // implied 1

IsSelected, // implied 2

IsHovered // implied 4

);

Each enum value can be assigned to a specific numerical representation. If no numerical representation is provided, the compiler will automatically assign consecutive numbers, starting at 0 and counting up in increments of 1 for enum types, and starting at 1 and shifting the bit to the left for flags types.

If a numerical representation is provided for some values but not all, the compiler will use the fixed values where provided, and increment (enums) or look for the next free higher bit (flags from there for subsequent values without explicit number).

By default, enums are stored at 32-bit numbers compatible with a UInt32 type. Optionally, a different integer storage type can be provided at the end of the declaration, using the of keyword. This can be used to size the enum smaller (Byte or Word) or larger (Int64).

Type Visibility

The visibility of an enum type can be controlled by applying a Visibility Modifier on the declaration. The default visibility is assembly.

Other Modifiers

Other modifiers do not apply to enums.

Blocks

A block type, also referred to as a delegate type on .NET, defines a method pointer that can be used to hold a reference to a Method or an Anonymous Method. Blocks are a safe way to dynamically invoke methods with a specific signature on unrelated classes.

Different from regular Function Pointers on native platforms (more on those below), block instance captures not only the implementation of the method in question, but also the Self pointer of the object instance that contains the method. This allows calls to blocks to execute in the context of a specific object instance, and access that object's members.

A block type is declared using the block keyword, followed by a regular Method signature. This signature can include parameters and a result type.

type

MyBlock1 = public block(aParameter: Integer);

MyBlock2 = public block: String;

MyBlock3 = public block;

In addition to block, the delegate keyword can also be used to declare a block.

The two keywords are interchangeable, and delegate is mainly supported for backwards compatibility. We discourage the use of delegate, because, while commonly used on .NET, the term can be confusing with what the terminology "delegate" refers to many other platforms, especially Cocoa. We recommend to use the block keyword, exclusively.

Creating Block References

A block reference can be created by simply assigning a Method, Anonymous Method or Lambda Expression to a block variable.

To avoid ambiguity (e.g. as to whether to call the method and use its result, or assign the method itself), the method reference can be prefixed with the Address Of (@) Operator. But note that this is seldomly required, except in cases of ambiguity (e.g. if the method itself returns a compatible block), ot to levarage type inference.

var x: MyBlock1 := @MyClass.MyMethod; // use a method as block

var y: MyBlock2 := () -> "Hello"; // use a lambda as block

var z := method begin // use an anonymous method

DoSomething;

end;

Invoking a Block

Invoking a block reference is as easy as calling into the block as if it were a regular local method:

x(15);

var s: String := y();

z();

Note that different than in regular Method Calls, parenthesis are required to call the block. This is to avoid ambiguity between calling or referencing the block, e.g.:

var a := y; // assigns the same block as `y` to `a`

var b := y(); // calls `y` and assigns the result to `a`.

Function Pointers

Function Pointers are a type similar to blocks, but more limited. They can be declared using the same syntax, but with the method keyword instead of block.

Different than blocks, function pointers do not capture a Self. As such, they can only refer to simple (global) functions, not to class methods. Anonymous methods or lambdas can be used as function pointers, as long as they do not access the surrounding scope or self.

Inline Block Types

Inline block types are blocks defined in a type reference, they use the same syntax as regular blocks, but without a name. On .NET and Java, these blocks must map to system-predefined generic delegates (System.Action*, and System.Func* on .NET and types in the Cooper Base Library for Java). Cocoa and Island support arbitrary inline blocks natively.

method DoSomething(aCallback: block(aStatus: String));

begin

//...

aCallBack("Done.");

end;

Type Visibility

The visibility of a block type can be controlled by applying a Visibility Modifier on the declaration. The default visibility is assembly.

Other Modifiers

Other modifiers do not apply to blocks.

Modified Types

Modified Types extend or modify the behavior of a regular type, to form more complex combinations, such as arrays, sequences, tuples or pointers of a given other type.

For example, value types can normally not be nil, but the nullable version of that same type can. And an array can broaden what usually is a single copy of a given type into a group of several items of the same type that can be worked on in bulk.

Modified Types are defined by the Oxygene language itself, and are usually not referred to by name (although one can of course define an Alias to a specific modified type), but with a special language syntax.

Array Types

An array is a constant sized list of elements. An array type is expressed with the ``array of` keyword followed by the name of any valid Type; an optional size can be provided in square brackets. The lower bound of an array does not have to be 0.

var x: array of Integer; // an array of undetermined (as of yet) size

var y: array [0..9] of Integer; // an array fixed to 10 elements

var z: array [5..10] of Integer; // an array fixed to 6 elements, with a lower bound of 5.

Arrays can be made multidimensional, by providing more than one set of bounds.

var x: array[0..9, 0..9] of Integer; // 10x10 = 100 integers

var y: array[0.., 0..] of Integer; // an undetermined (but rectangular) number of integers

var z: array of array of Integer; // an undetermined (loose) number of integers

Static Arrays

It is worth noting that arrays with fixed specified bounds are automatically allocated by the compiler, on the stack as value types, and can be immediately used. These are referred to as Static Arrays.

var x: array[0..9] of Integer; // these 10 integers now exist as space on the stack

x[3] := 42; // so we can just set the one at index 3 to "42"

The same holds true for multi-dimensional arrays with fixed bounds. These are allocated as a monolithic block of memory, so essentially array [0..99] of Integer and array [0..9, 0..9] of Integer have the same memory representation. Merely the semantics of how the 100 individual items get accessed differs.

var y: array[0..9, 0..9] of Integer; // these 100 integers too exist as space on the stack

x[3,8] := 42; // so we can just set the one at index 3 to "42"

Dynamic Arrays

Arrays without bounds (or with open bounds) are un-initialized reference types, and set to nil by default (because, after all, their actual size is not known from the declaration). These are referred to as Dynamic Arrays.

To use, fill these arrays with values, a copy needs to be instantiated using the new operator:

var x: array of Integers; // unknown number of Integers, for now

x := new Integer[20]; // so we need to allocate 20 of them in memory (indexed 0..19)

x[3] := 42; // *now* we can set the one at index 3 to "42"

var x: array [0.., 0..] of Integers; // unknown number of Integers, for now

x := new Integer[20,20]; // so we need to allocate 400 of them in memory (indexed 0..19/0..19)

x[3,8] := 42; // *now* we can set the one at index 3 to "42"

For loose multi-dimensional dynamic arrays, each level would need to be instantiated manually (since each level can, in theory, contain a different-sized sub-array):

var x: array [0.., 0..] of Integers; // unknown number of Integers, for now

x := new array of Integer[50]; // we allocate 50 arrays for dimension one (indexed 0..49)

for i: Integer := 0 to 49 do

x[i] = new Integer[20]; // and for each, we allocate 20 integers — for a total of 50*20 = 1000

x[3,8] := 15; // now we can set values

Dynamic arrays are always reference types.

Inline Arrays

Inline arrays are concept specific to arrays on the .NET platform. The inline Type Modifier ensures that memory for the arrays is allocated with the stack space or the memory space of the containing Class or Record.

type

MyRecord = public record

public

Chars: inline array[0..255] of Byte;

end;

The above record's size would be 256 bytes, unlike a record with a regular array, which would be stored outside of the record. Inline arrays are specially useful when working with P/Invoke to call native code.

Inline arrays are considered "unsafe". In order to use them, the "Allow Unsafe Code" Compiler Option must be enabled, and any Methods that deal with them must be marked with the unsafe Member Modifier.

See Also

Sequence Types

Sequences are a special type in the language and can be thought of as a collection of elements, similar to an array.

In contrast to arrays, sequences do not imply a specific form of data storage, but can represent any collection of elements that is accessible in a specific order. This could be an array (and as a matter of fact, all arrays can be treated as a sequence) or a different data store, such as a linked list, a binary tree or a custom collection implementation.

Sequences can also represent non-static data that is retrieved or generated on the fly when the sequence is enumerated. For example, you could implement a sequence that calculates all digits of Pi, or retrieves RSS headlines downloaded from a server.

As a result, sequences are not a concrete type that can be instantiated – one cannot "new up a new sequence", because it would be undefined where its data would come from. Instead, sequences are used as base types to consume data where the exact storage is unknown.

For example, a method could be written that prints out a sequence of Integer to the console. That method could then be invoked with any number of different types that adhere to the sequence protocol, no matter where those Integers come from. By contrast, if the method were declared to take an Array or a generic List<Integer>, it would be much more restrictive.

Using Sequences

Sequence types are expressed with the sequence of keyword followed by a type name.

var SomeIntegers: sequence of Integer;

The most common operation on a sequence is to iterate it using a for each Loop loop, a variant of for loop that executes a statement (or block of statements) once for each item in the sequence:

for each i in SomeIntegers do

writeLn(i);

It is also common to apply LINQ operations in form of a from Expression to perform operations on a sequence, such as filtering or sorting.

var FewerSortedIntegers := from i in SomeIntegers where i ≥ 20 order by i;

for each i in FewerSortedIntegers do

writeLn(i);

In the above example, the sequence of Integers will be filtered to include only those values larger or equal to 20, and then sorted numerically.

Compatible Types

In addition to Array, most collection types on the various platforms, including the List<Integer> type in Elements RTL are compatible with sequences. Sequences can easily be implemented using Iterators or for Loop Expressions.

Parallel Sequences

Available only on .NET, parallel sequences are a special type of sequence that can be iterated in parallel in a multi-threaded environment with .NET's parallelism APIs. It maps to the ParallelQuery<T> system type.

var data: parallel sequence of Integer;

data.ForEach(a -> DoSomethingParallelWith(a));

Queryable Sequences

Available only on .NET only, queryable sequences are a special type of sequence where LINQ expressions applied to the sequence will be compiled to executable code, but converted into meta data that can be used to perform operations on the sequence at runtime.

For example, using LINQ to SQL or DA LINQ, a from expression on a sequence of database objects can be translated into SQL code that could perform the operations on the back-end database. Rather than fetching an entire data table and then filtering it locally, the filtering can be done by the database engine.

var AllUsers := rda.GetDataTable<Users>;

var NewUsers := from u in AllUsers where u.DateSignedUp > DateTime.Now.Add(-1);

for each u in NewUsers do

writeLn(u.Name);

In this case, the "where" clause would get translated to SQL to only fetch the most recent users from the database instead of all of them – potentially saving a lot of network bandwidth and memory.

A queryable sequence maps to the IQueryable<T> system type.

Asynchronous Sequences

Also available only on .NET only, asynchronous sequences (called asynchronous streams in C# parlance) are sequences that can be iterated asynchronously using the a await for each Loop Statement.

var lItems: async sequence of String := ...;

await for each i in lItems do

writeLn(el);

writeLn("Done");

Asynchronous Sequences are expressed by the async sequence keyword, and map to the IAsyncSequence<T> platform type. They can easily be created by implementing an Iterator with an async sequence of X result type.

See Also

Set Types

A set is a collection of ordinal values of the same Integer or Enum type. The set type defines the range of potential values; for each instance of the set, any number of individual items (from none to all of them) can be contained in the set. Each value can only be contained once.

A set type is expressed using the set of keywords, followed by a range of values.

type

DayOfTheWeek = public enum (Mon, Tue, Wed, Thu, Fri, Sat, Sun);

var Weekend: set of DayOfTheWeek := [DayOfTheWeek.Sat, DayOfTheWeek.Subn];

if TodaysDay in Weekend then

PartyTime();

Sets can be comprised of Enum values (as shown above), or Integer values:

type

DaysOfTheMonth: set of 1..31;

var FirstFewPrimeNumbers: set of Integer := [2, 3, 5, 7, 11, 13, 17, 19, 23, 29, 31];

Sets are limited to 64 individual possible values, ensuring they can be stored in an UInt64 value. Sets containing 32 or less items are stored in an UInt32.

Operators

The following Operators are supported on sets:

| Operator |

Meaning |

+ |

Union of two sets: [a,b,e] + [c,e,f] = [a,b,c,e,f] |

- |

Difference of two sets: [a,b,c,d] - [a,c] = [b,d] |

* |

Intersection: [a,b,e] * [c,e,f] = [e] |

= |

Exact equal; only true if all elements are the same in both |

≠ |

Not equal |

< |

Subset, true if the right side has all elements the left set has, and more |

> |

Superset, true if the left side has all elements the right set has, and more |

≤ |

Subset, true if equal or if the right side has all elements the left set has |

≥ |

Superset, true if equal or if the left side has all elements the right set has |

in |

Check if an enum or integer is in a set: a in [a, b, c] |

not in |

returns not (a in b). |

Note that Oxygene's language level sets are not to be confused with Swift's higher-level Set<T> struct.

See Also

Tuple Types

A tuple is a well-defined group of values of specific types that can be handled together as a single grouped value, and also be taken apart into their individual values easily. Tuples provide a more lightweight way to group related values without the need of declaring, for example, an explicit Record type.

A tuple type is expressed with the tuple of keywords, followed by a list of two or more types (since a tuple of just one value makes very little sense).

method ExtractValues(s: String): tuple of (String, Integer);

The method declared above would return a tuple consisting of a String and an Integer.

A tuple value can be constructed simply by providing a matching set of values surrounded by parentheses. The following result assignment would work for the above method.

result := ("A String", 5);

Tuple values can be assigned in whole or as their individual parts, both when assigning from a tuple or to one:

var t: tuple of (String, Int);

var s: String := "Hello"

var i: Integer := 5;

t := (s, i); // assigning individual values to a tuple

var u := t; // assigning one tuple to another

(s, i) := ExtractValues("Test"); // assigning a tuple back to individual elements

Extracting a tuple back to individual items can even be combined with a var Statement, to declare new variables for the items:

var t := ExtractValues("Test");

var (a, b) := ExtractValues("Test"); // assigning a tuple back to individual elements

Here, three new variables are declared. For the first call, t is declared as new tuple variable, so far so unusual. For the second call though, two new variables a and b are declared, and the tuple is automatically taken apart, so that a would hold the String value and b the Integer.

Tuples and Discardable

Tuple extraction can also be combined with a [Discardable] Expression(../Expressions/Discardable). If only some of the values of a tuple are of interest, the nil keyword can be provided in place of the items that are not of interest, and the will be discarded.

var (FirstName, nil, Age) := GetFirstNameLastNameAndAge();

Here, assuming that GetFirstNameLastNameAndAge returns a tuple of three values of information about a person, but only two variables are declared, for the FirstName and Age, the middle value of the tuple is simply discarded.

Accessing Individual Tuple Items

Instead of extracting the whole tuple, individual values inside a tuple can also be accessed directly, with the Indexer Expression:

var Info := GetFirstNameLastNameAndAge();

writeLn($"{Info[0]} is {Info[2]" years old".)

While in syntax this access looks like an array access, the access to to each item of the tuple is strongly typed, so Info[0] is treated as a String, and Info[2] as an Integer, for this example. For this reason, a tuple can only be indexed with a constant index.

Named Tuples

Tuples can optionally be defined to provide names for their values. Either all or none of the values need to have a name, a tuple cannot be "partially named". A named tuple can be initialized with a tuple literal with or without names.

var Person: tuple of (Name: String, Age: Integer);

Person := (Name := "Peter", Age := 25);

Person := ("Paul", 37);

In a named tuple, individual items can be accessed both via index as outlined above, and via name:

writeLn($"{Person.Name} is {Person[1]" years old".)

Named and unnamed tuples (and tuples with mismatched names) are assignment compatible, as long as the types of the tuple items matches.

var Person: tuple of (Name: String, Age: Integer);

var Person2: tuple of (String, Integer);

Person := Person2;

Person2 := Person;

See Also

Future Types

A future is a strongly typed variable that represents a value that might or might not have been calculated yet, but is promised to be (made) available when needed.

A future is expressed by the future keyword, followed by a type:

var count: future Integer;

Future values can be used interchangeably with their underlying type, including as parameters to Method Calls or even in arithmetic or logical Expressions. The first time the value of a future is accessed, execution will wait for the future's value to become available.

The futureAssigned() System Function can be used to check if a future itself is assigned or not (i.e. is nil). Note that a future can be assigned, but still have a determined value of nil, of course.

Synchronous and Asynchronous Futures

Future types can be synchronous or asynchronous. By default, futures are synchronous, and will be evaluated the first time they are used.

When futures are used in combination with an Async Expression, they become asynchronous, and will execute to determine their value on a background thread. When an asynchronous future is first accessed, its value might or might not have been determined yet. If it has not, one of two things can happen:

- If execution of the future has already started, access will block the current thread and wait for that execution to finish and the value to become available.

- If execution of the future has not started yet, it will be executed inline within the current thread.

Both of these scenarios happen transparently to the calling code.

Futures and Exceptions

Any exception that occurs while calculating the future will be caught, cached, and re-thrown whenever the future's value is accessed.

Example

Consider the following snippet of pseudo code that calculates the Sum of values in a binary tree:

method ThreeNode.Sum: Integer;

begin

var l := Left.Sum;

var r := Right.Sum;

result := l+r;

end;

This code first calculates the Sum of the left part of the subtree, then that of the right one. Finally, the two values are combined using the + operator. Calculating the value of both l and r might take a relatively long time, yet the value of l is not actually needed until the very last line of the method. This is an ideal candidate for a future:

method ThreeNode.Sum: Integer;

begin

var l: future Integer := async Left.Sum;

var r: Integer := Right.Sum;

result := l+r;

end;

Instead of calculating the value of l in place as before, l is now defined as a future Integer, declaring that the variable does not actually hold the value of Left.Sum, but just the promise that, at some point in the future, it will. This first line of code will execute in virtually no time, and the method can move on to calculating r, which is unchanged and will happen inline, as before.

Note how the value assigned to l has been changed to include the async keyword, making it an async expression that will be spawned in the background. In fact, it's this use of the async keyword that makes the future useful in this case.

The result of an async expression is always a future, so the code would behave the same without explicit type declarations:

method ThreeNode.Sum: Integer;

begin

var l := async Left.Sum; // l will become a future Integer

var r := Right.Sum; // r is still a regular Integer

result := l+r;

end;

The actual value of the future, l in this example, will not be accessed until it is used in code. In the code above, this happens on the last line of the method, when l is used with the + operator to combine with with r.

When the value is accessed, one of three things can happen:

- If the future is already done executing in the background, its value will be available immediately, just as if it were a plain non-future type that is being accessed.

- If the future is not finished executing at that point, execution will hold and block the current thread until the future is done.

- If any exception occurred while executing the future in the background, that exception will be re-thrown as the future value is accessed.

Note how in the example above, the code does not need to worry about whether the value of the future l has already been determined or not when execution reaches the last line and the value is required. The code can simply treat l as if it were a regular Integer.

Type-Less Futures

Futures can also be type-less, which is also referred to as Void Futures. Such a type-less future does not represent a value, but merely a certain action that will be run in the background.

A type-less future can be called, like a statement, in order to wait for its action to be finished. But because a type-less future has no value, it cannot be used as an expression, only as a statement.

var f := async begin // goes off and does a couple of things in the background

DoSomething();

DoSomethingElse();

end;

DoSomeMore(); // meanwhile, do more work on the current thread

f(); // wait for the type-less future to finish, if it hasn't already.

var x := f; // Compiler error: f has no value.

As with typed futures, if any exception occurred in the background while executing the future, that exception will be re-thrown if and when the future is being called into.

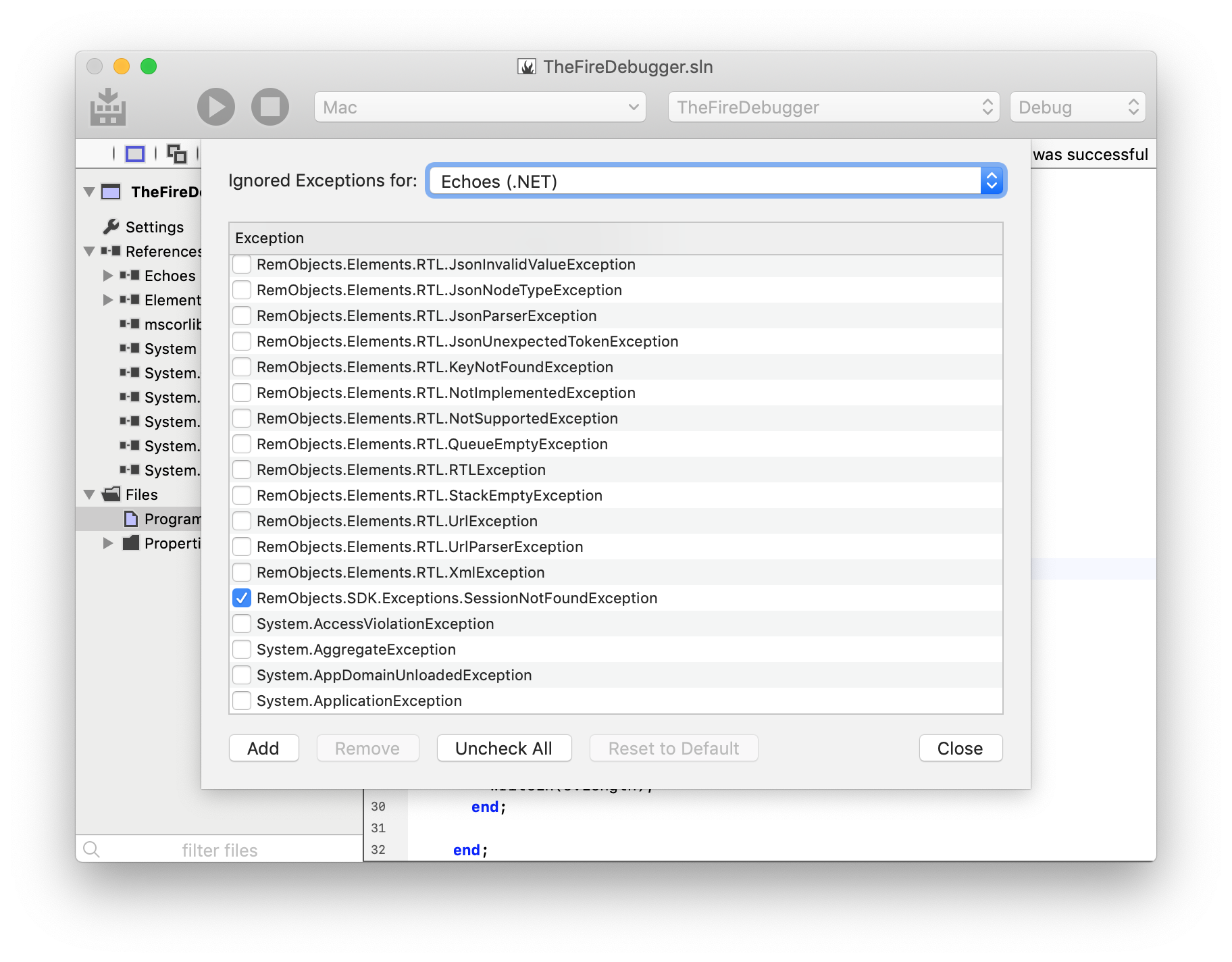

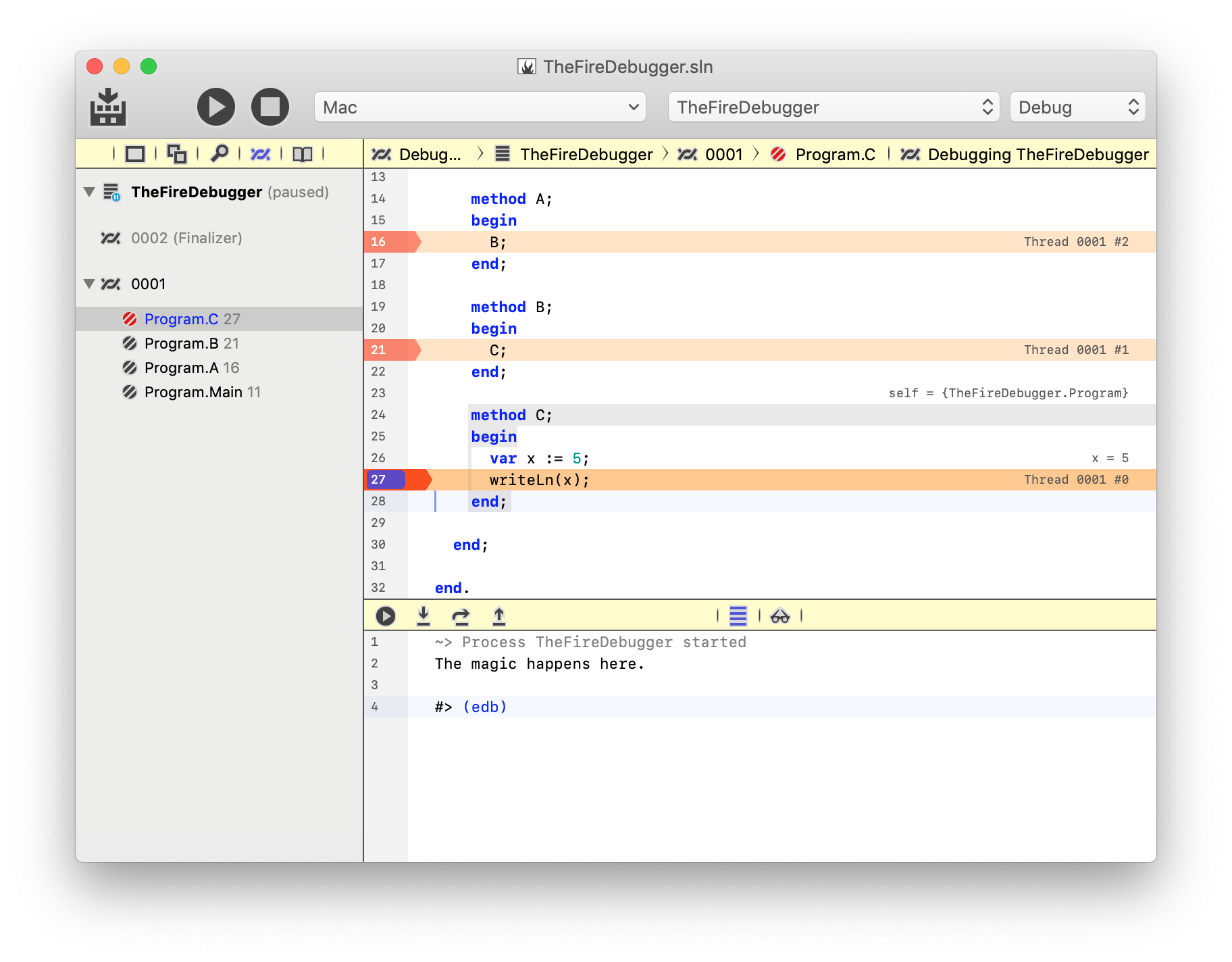

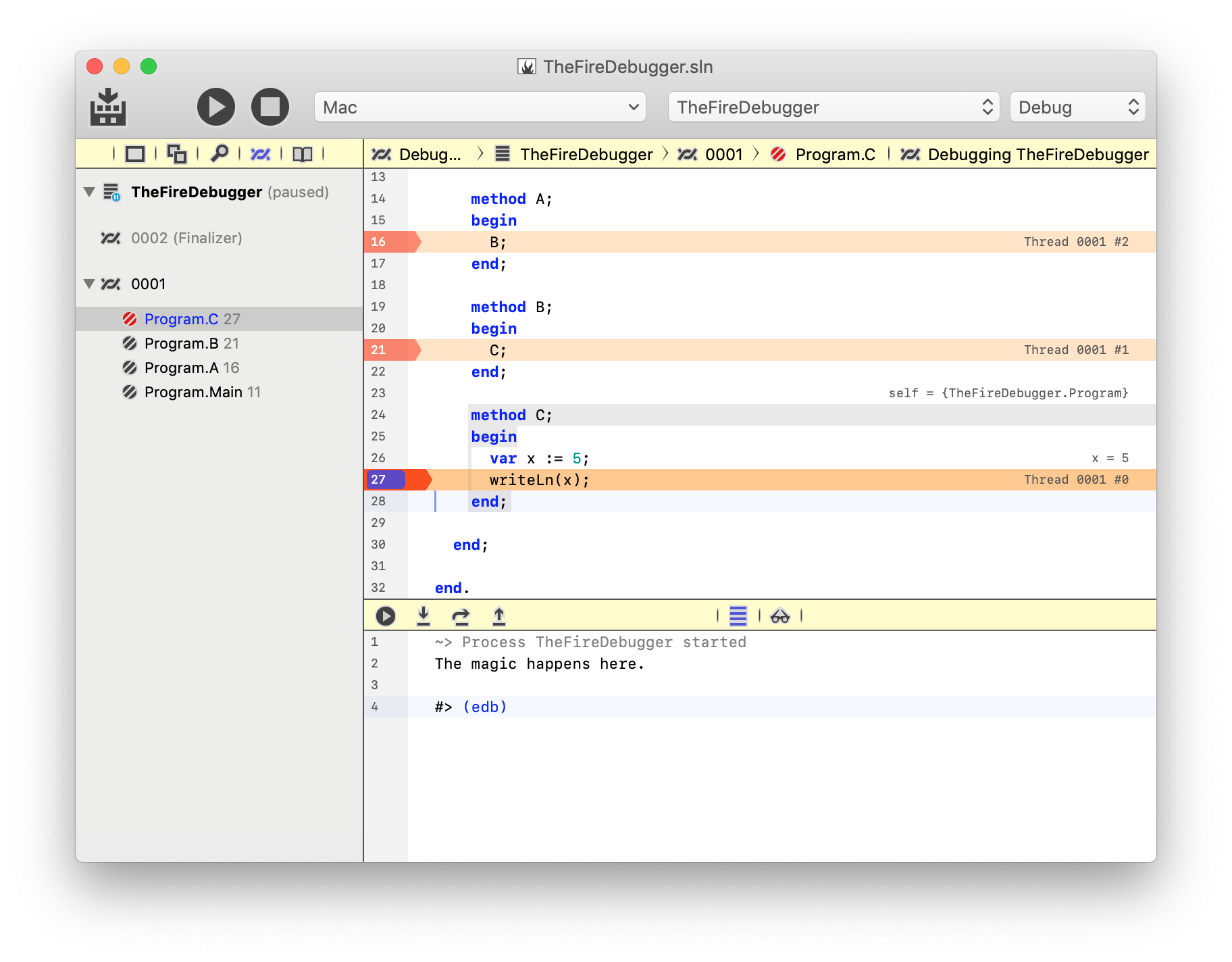

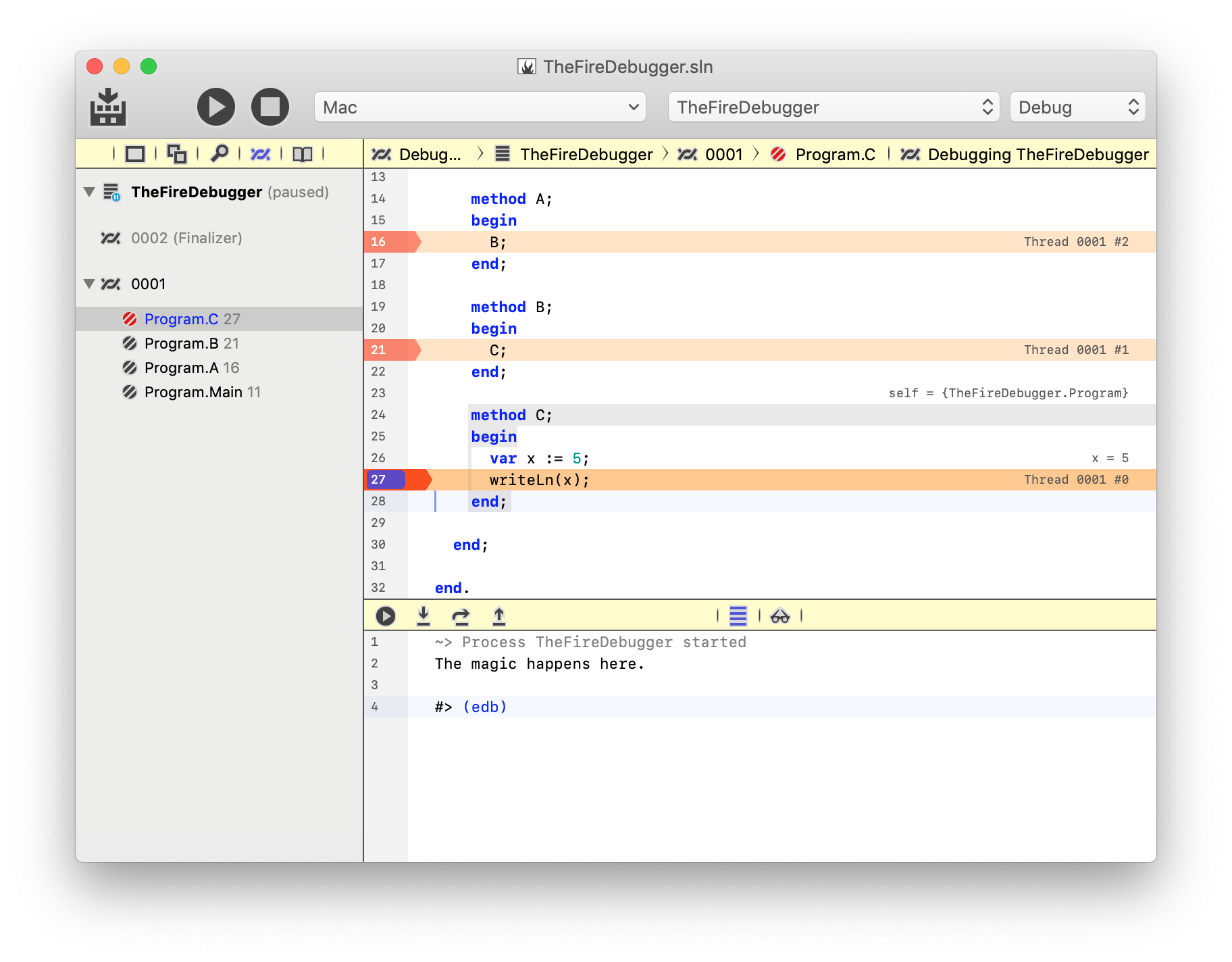

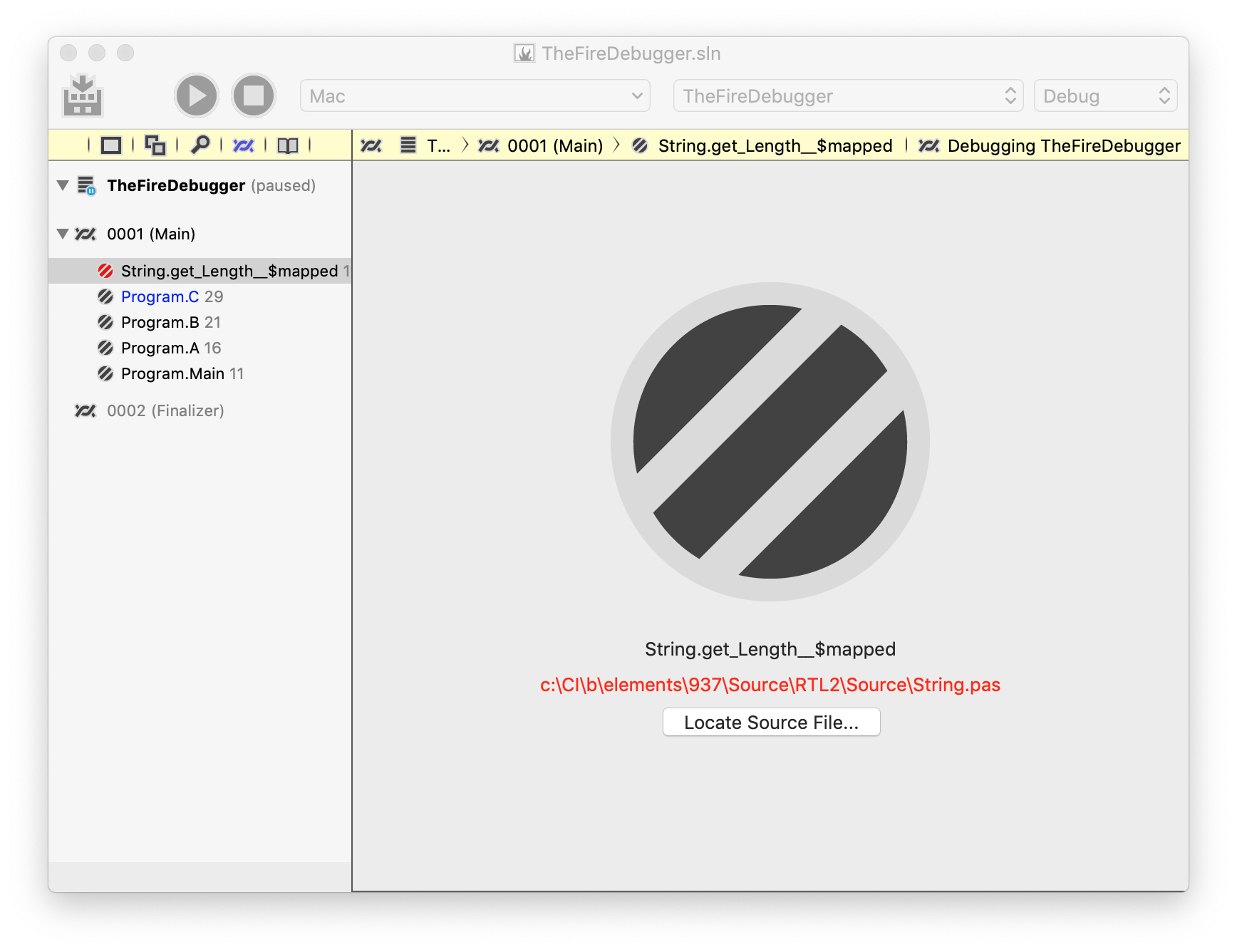

Non-Asynchronous Futures